Why read this book?

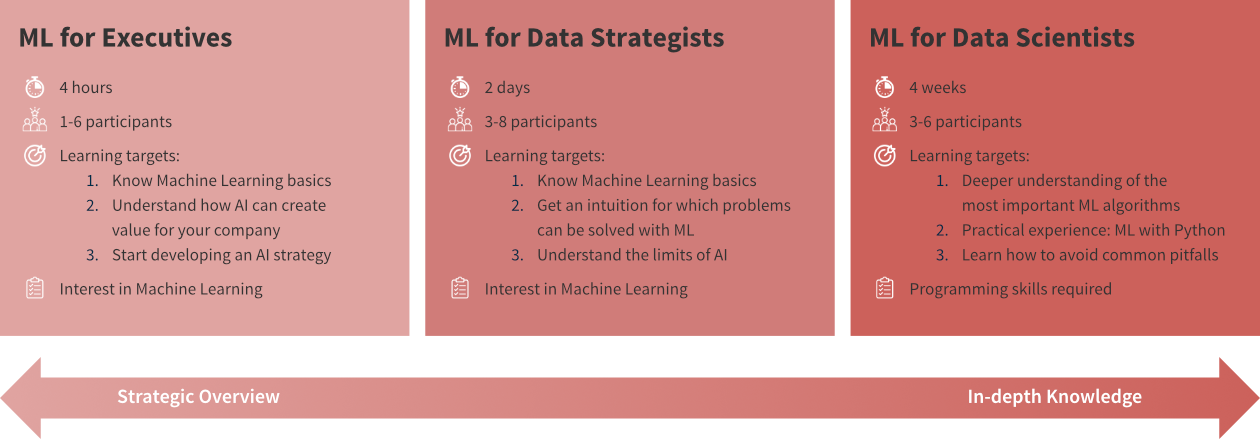

There are a lot of machine learning (ML) resources out there. Many of them either targeted at students or researchers and rather heavy on the mathematical theory, or others in the form of tutorials, focusing on the concrete implementation and application of some ML algorithm to a specific problem. This book tries to find a middle ground between both the theoretical background, which I have studied in depth while completing my PhD in machine learning at the TU Berlin, Germany, and the practical applications of these algorithms to solve different problems, as I have been doing in the last few years as an independent data science consultant for various firms. This book originated from my experience holding dozens of machine learning seminars and workshops in front of audiences with varying levels of technical and mathematical background.

Questions this book answers:

-

Which problems can machine learning (ML) solve?

-

How does ML solve these problems, i.e., how do the algorithms work (in theory)?

-

How do you actually get this to work in practice and avoid common pitfalls?

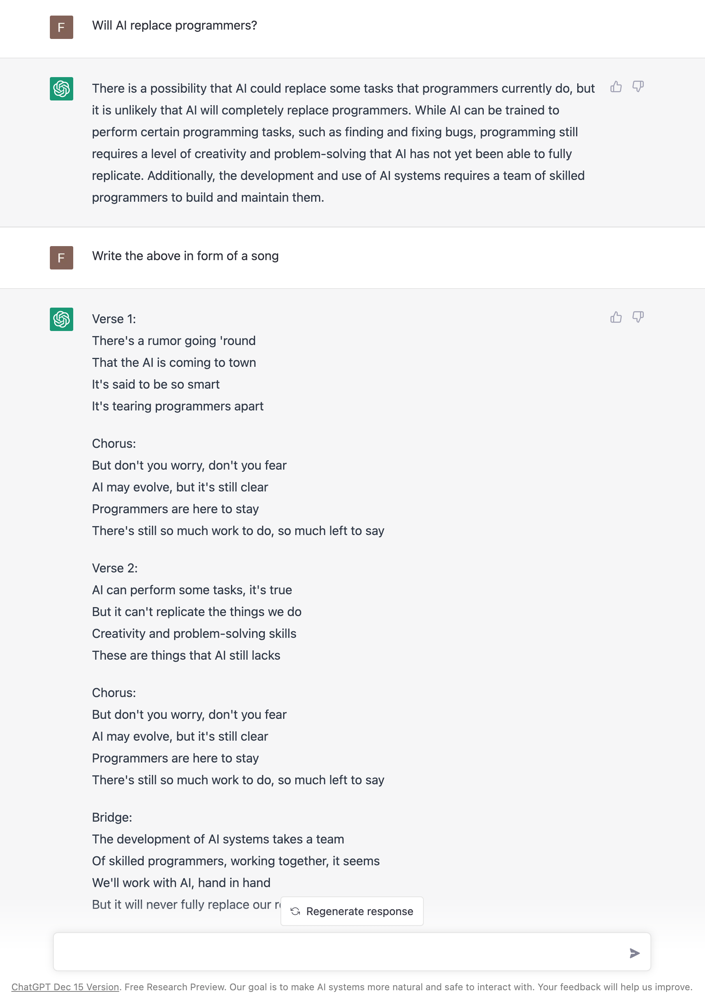

This book does not explain the latest fancy neural network model that achieves state-of-the-art performance on some specific task. Instead it provides a general intuition for the ideas behind different machine learning algorithms to establish a solid framework that helps you better understand and integrate into a bigger picture what you later read about these specific approaches.

This book and the associated courses exist in two versions:

The condensed version is written for all audiences, i.e., readers generally interested in ML, who want to understand what is behind the hype and where ML can — or should not — be used. The full version is mainly written for ML practitioners and assumes the reader is familiar with elementary concepts of linear algebra (see also this overview on the mathematical notation used in the book).

While the book focuses on the general principles behind the different models, there are also references included to specific Python libraries (mostly scikit-learn) where the respective algorithms are implemented and tips for how to use them. To get an even deeper understanding of how to apply the different algorithms, I recommend that you try to solve some exercises covering different ML use cases.

This is still a draft version! Please write me an email or fill out the feedback survey, if you have any suggestions for how this book could be improved!

Enjoy! :-)

- Acknowledgments

-

I would like to thank: Antje Relitz for her feedback & contributions to the original workshop materials, Robin Horn for his feedback & help with the German translation of the book, and Karin Zink for her help with some of the graphics (incl. the book cover).

- How to cite

@misc{horn2021mlpractitioner,

author = {Horn, Franziska},

title = {A Practitioner's Guide to Machine Learning},

year = {2021},

url = {https://franziskahorn.de/mlbook/},

}

Introduction

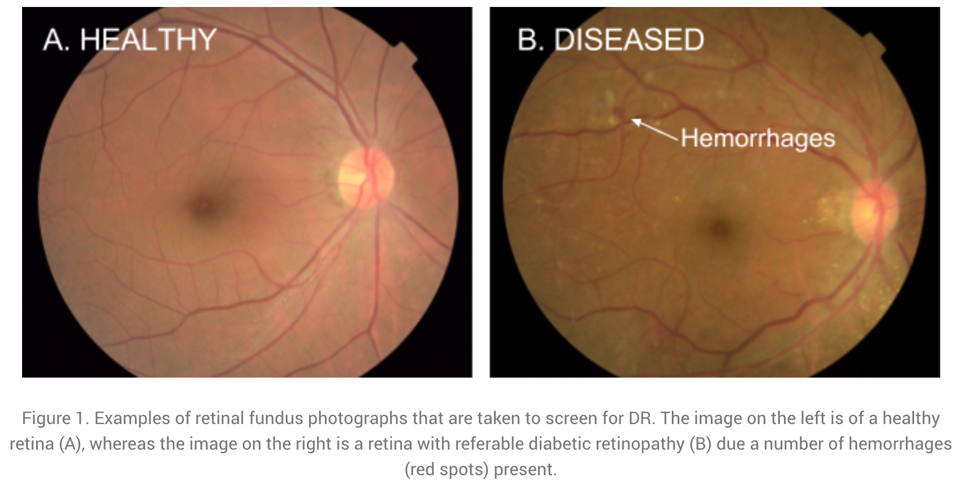

This chapter provides some motivating examples illustrating the rise of machine learning (ML).

ML is everywhere!

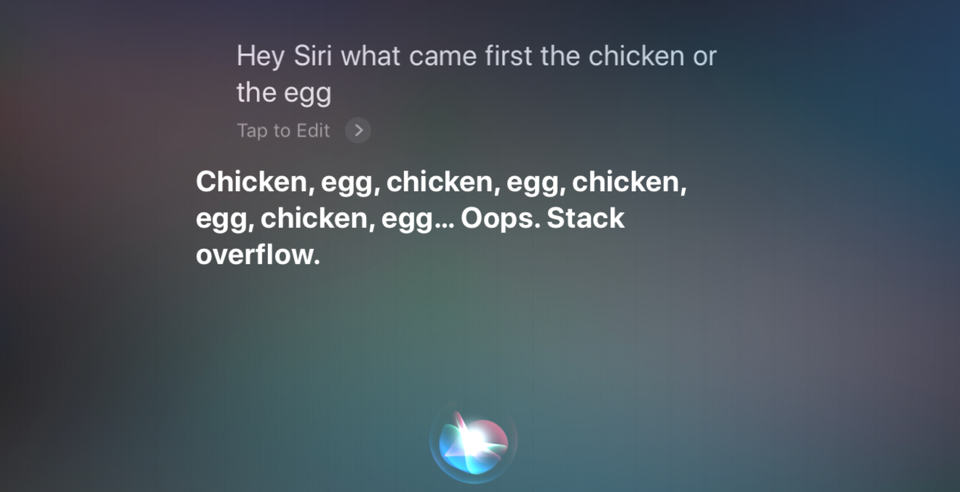

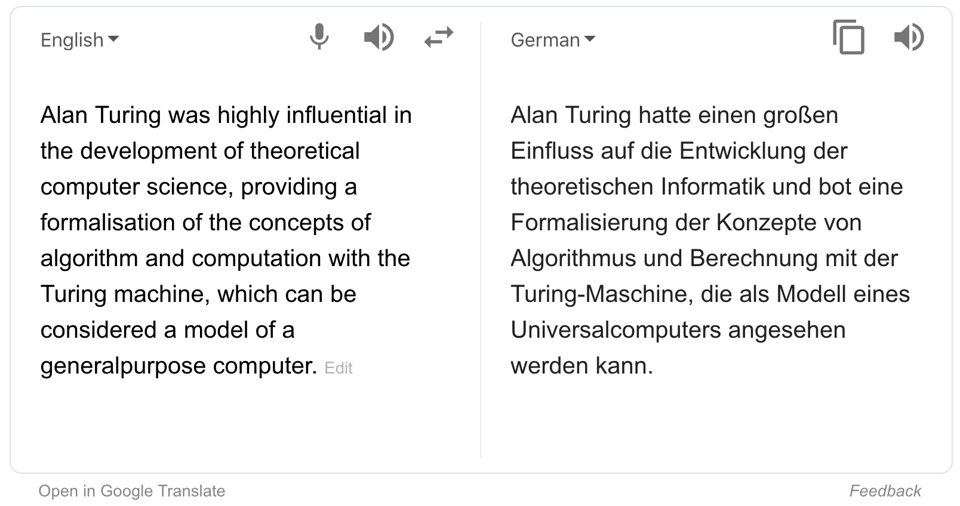

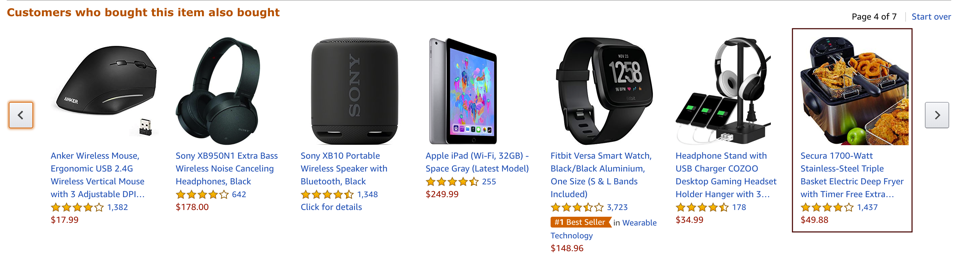

Machine learning is already used all around us to make our lives more convenient:

ML history: Why now?

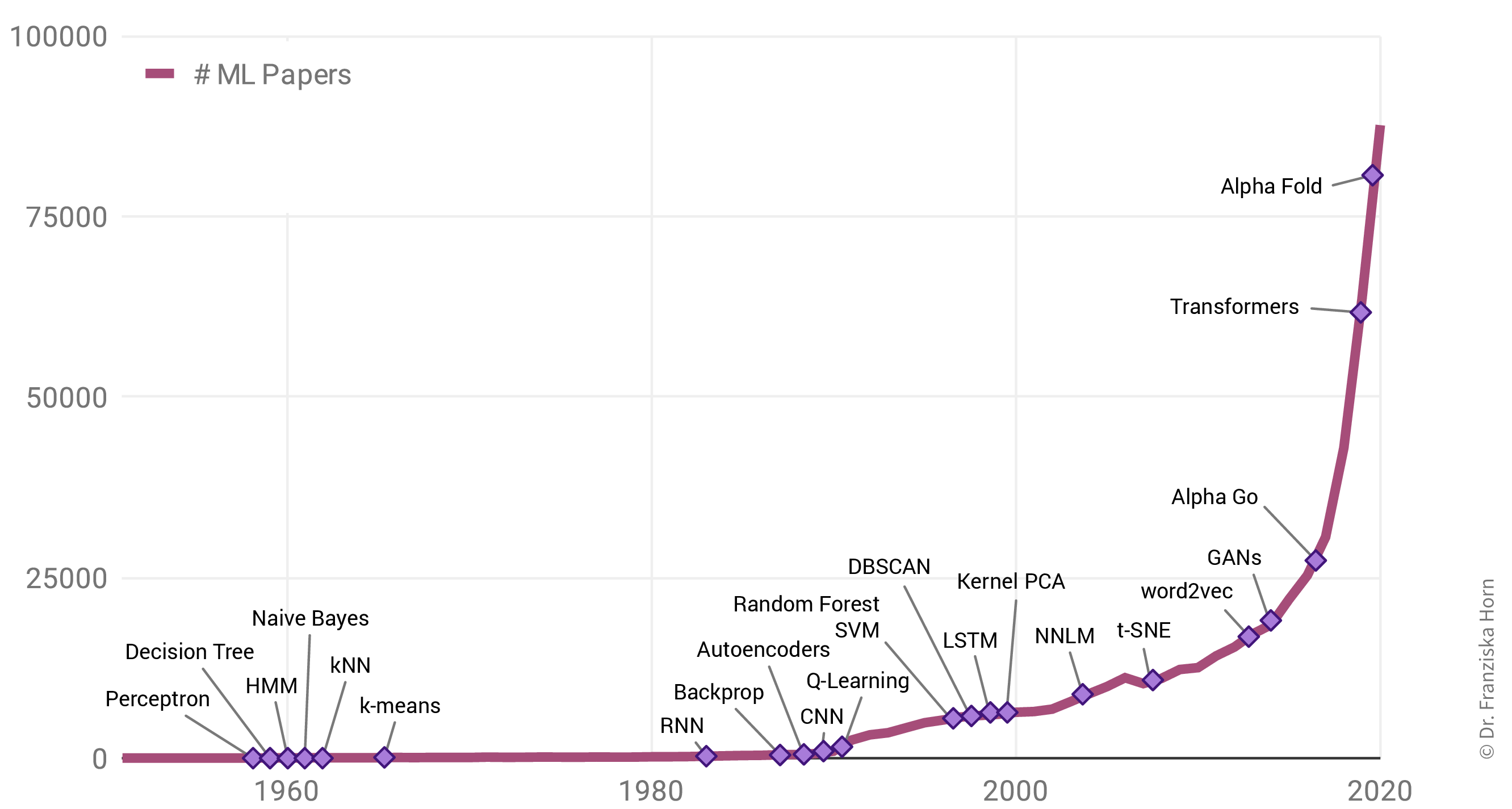

Why is there such a rise in ML applications? Not only in our everyday lives has ML become omnipresent, but also the number of research paper published each year has increased exponentially:

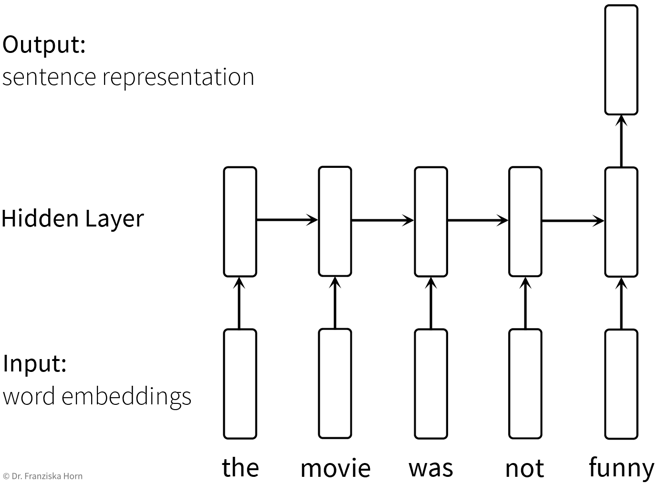

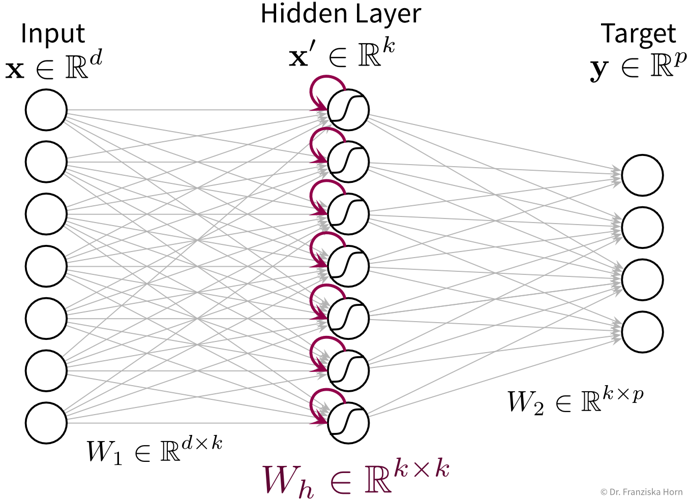

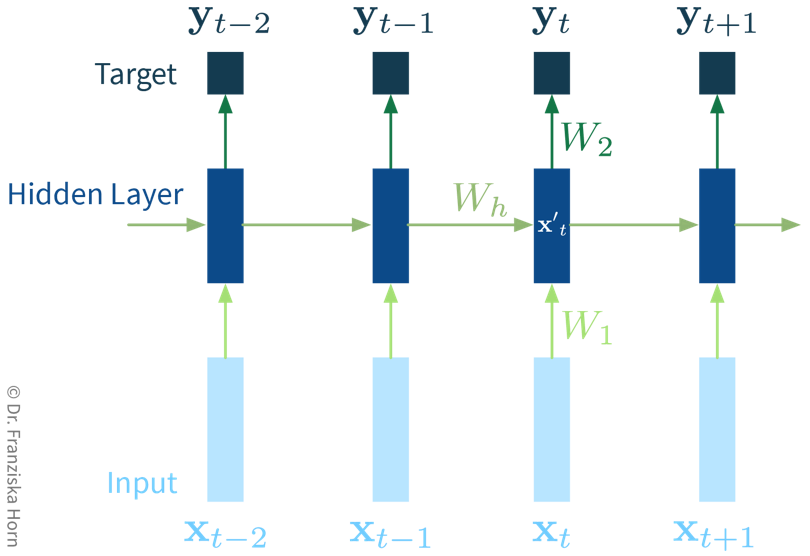

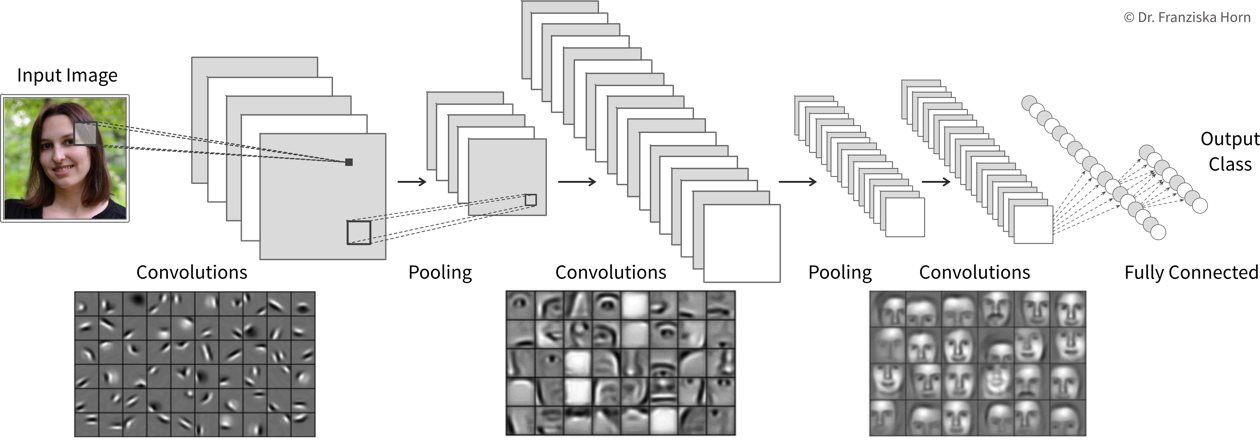

Interestingly, this is not due to an abundance of groundbreaking theoretical accomplishments in the last few years (indicated as purple diamonds in the plot), but rather many of the algorithms used today were actually developed as far back as the late 50s / early 60s. For example, the perceptron is a precursor of neural networks, which are behind all the examples shown in the last section. Indeed, the most important neural network architectures, recurrent neural networks (RNN) and convolutional neural networks (CNN), which provide the foundation for state-of-the-art language and image processing respectively, were developed in the early 80s and 90s. But back then we lacked the computational resources to use them on anything more than small toy datasets.

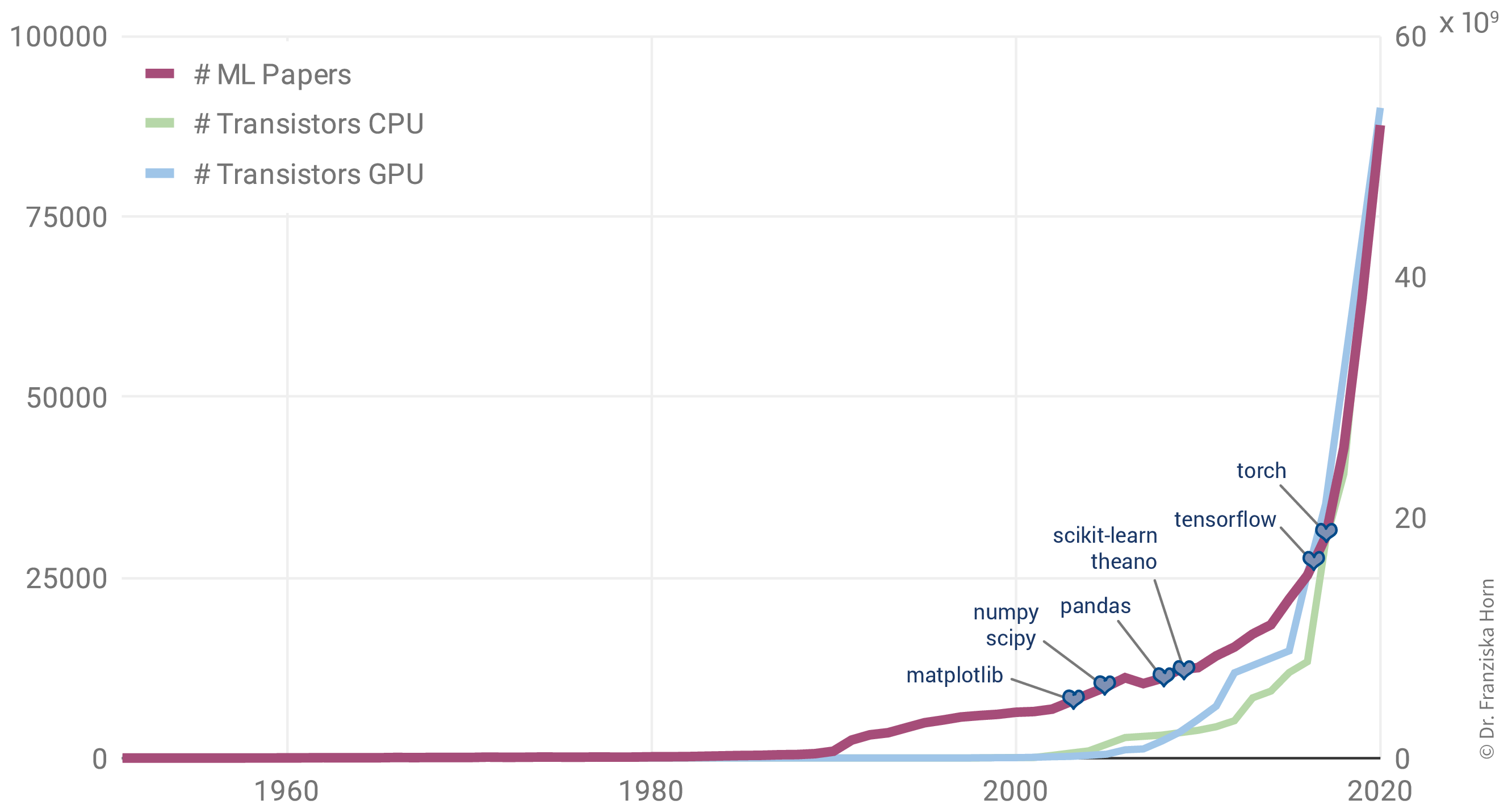

This is why the rise in ML publications correlates more closely with the number of transistors on CPUs (i.e., the regular processors in normal computers) and GPUs (graphics cards, which parallelize the kinds of computations needed to train neural network models efficiently):

Additionally, the release of many open source libraries, such as scikit-learn (for traditional ML models) and theano, tensorflow, and (py)torch (for the implementation of neural networks), has further facilitated the use of ML algorithms in many different fields.

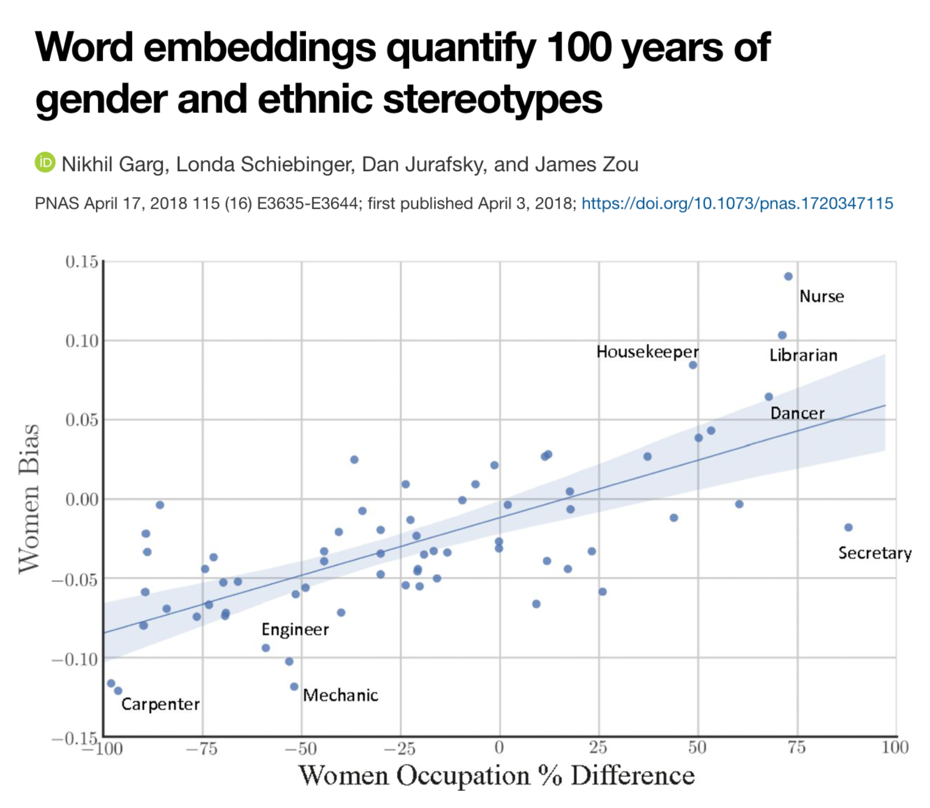

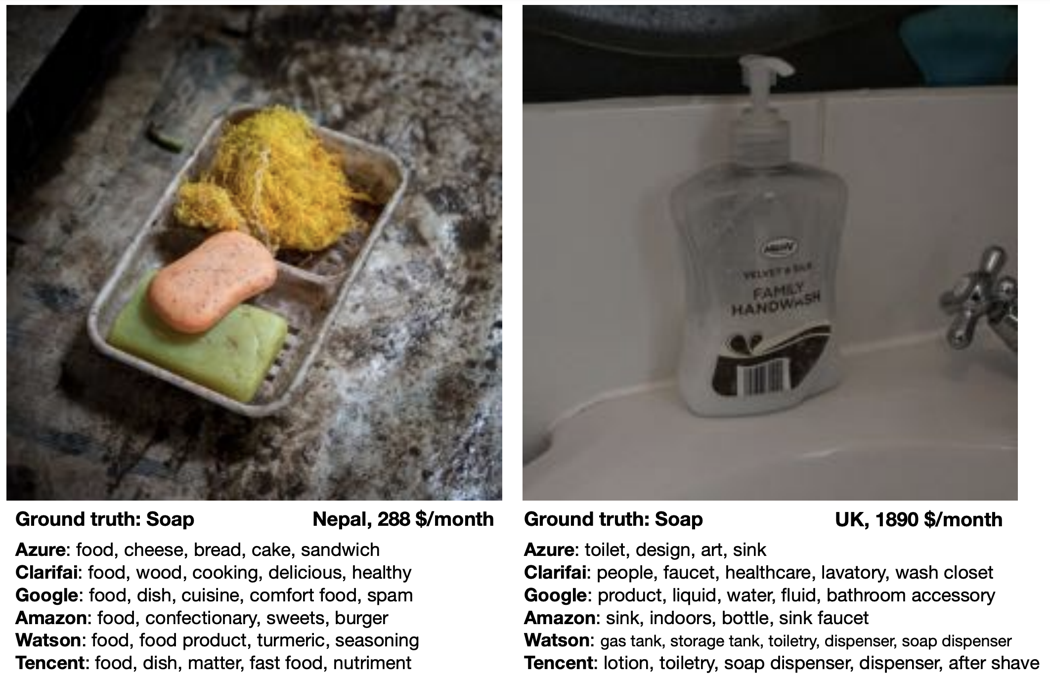

| While these libraries democratize the use of ML, unfortunately, this also brings with it the downside that ML is now often applied without a sound understanding of the theoretical underpinnings of these algorithms or their assumptions about the data. This can result in models that don’t show the expected performance and subsequently some (misplaced) disappointment in ML. In the worst case, it can lead to models that discriminate against certain parts of the population, e.g., credit scoring algorithms used by banks that systematically give women loans at higher interest rates than men due to biases encoded in the historical data used to train the models. We’ll discuss these kinds of issues in the chapter on avoiding common pitfalls. |

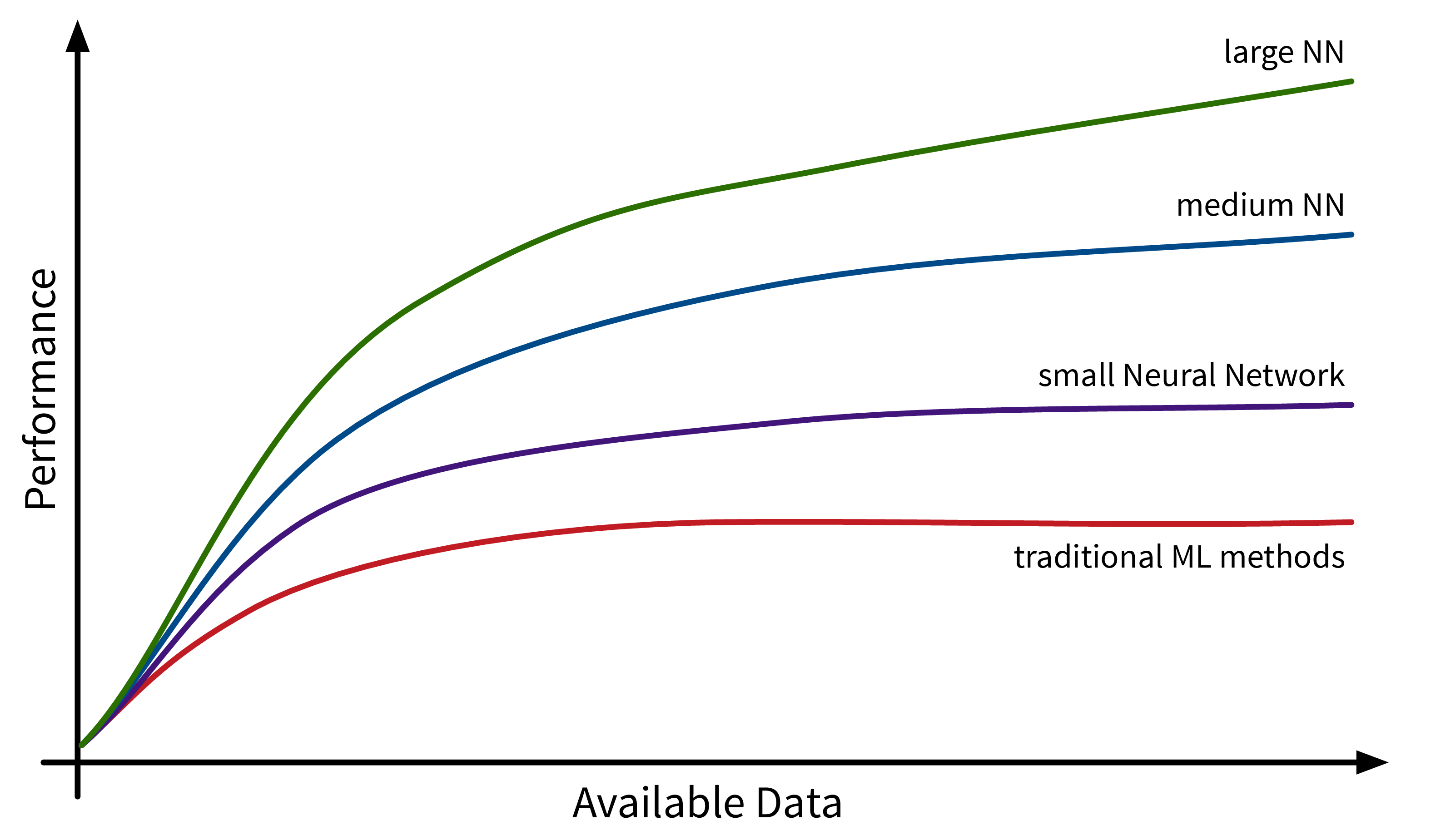

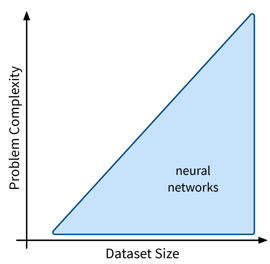

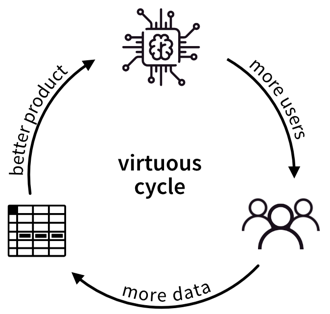

Another factor driving the spread of ML is the availability of (digital) data. Companies like Google, Amazon, and Meta have had a head start here, as their business model was built around data from the start, but other companies are starting to catch up. While traditional ML models do not benefit much from all this available data, large neural network models with many degrees of freedom can now show their full potential by learning from all the texts and images posted every day on the Internet:

The Basics

This chapter provides a general introduction into what machine learning (ML) actually is and where it can — or should not — be used.

Data is the new oil!?

Let’s take a step back. Because it all begins with data.

You’ve probably heard this claim before: “Data is the new oil!”. This suggests that data is valuable. But is it?

The reason why oil is considered valuable is because we have important use cases for it: powering our cars, heating our homes, and producing plastics or fertilizers.

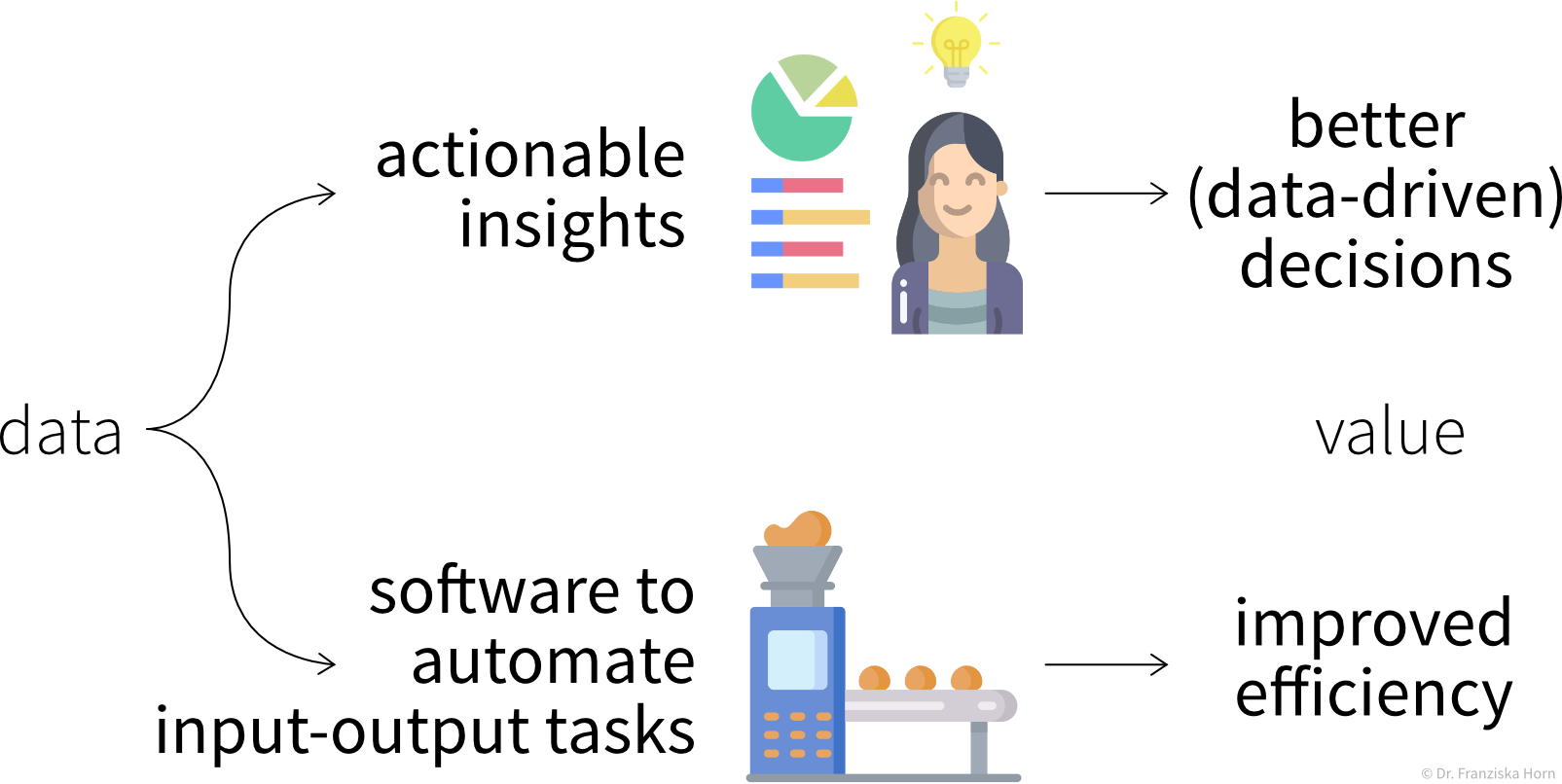

Similarly, our data is only as valuable as what we make of it. So what can we use data for?

The main use cases belong to one of two categories:

Insights

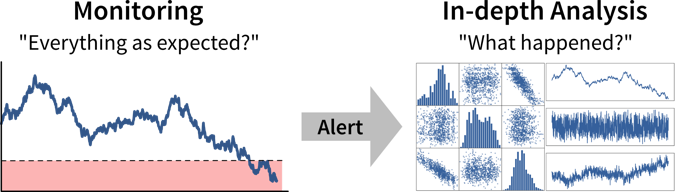

We can generate insights either through continuous monitoring (“Are we on track?”) or a deeper analysis (“What’s wrong?”).

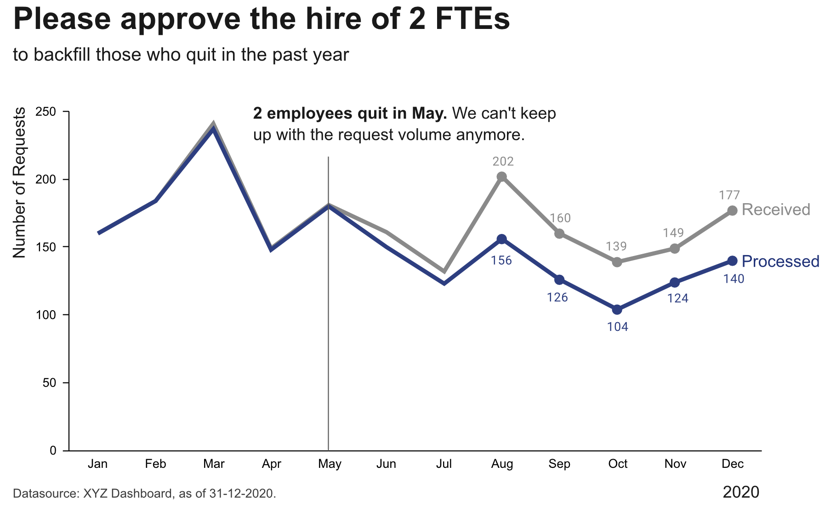

By visualizing important variables or Key Performance Indicators (KPIs) in reports or dashboards, we increase transparency of the status quo and quantify our progress towards some goal. When a KPI is far from its target value, we can dig deeper into the data with an exploratory data analysis to identify the root cause of the problem and answer questions such as

-

Why are we not reaching our goal?

-

What should we do next?

However, as we’ll discuss in more detail in the section on data analysis, arriving at satisfactory answers is often more art than science 😉.

Automation

As described in the following sections, machine learning models can be used to automate ‘input → output’ tasks otherwise requiring a human (expert). These tasks are usually easy for an (appropriately trained) human, for example:

-

Translating texts from one language into another

-

Sorting out products with scratches when they pass a checkpoint on the assembly line

-

Recommending movies to a friend

For this to work, the ML models need to be trained on a lot of historical data (e.g., texts in both languages, images of products with and without scratches, information about different users and which movies they watched).

The resulting software can then either be used to automate the task completely or we can keep a human in the loop that can intervene and correct the suggestions made by the model.

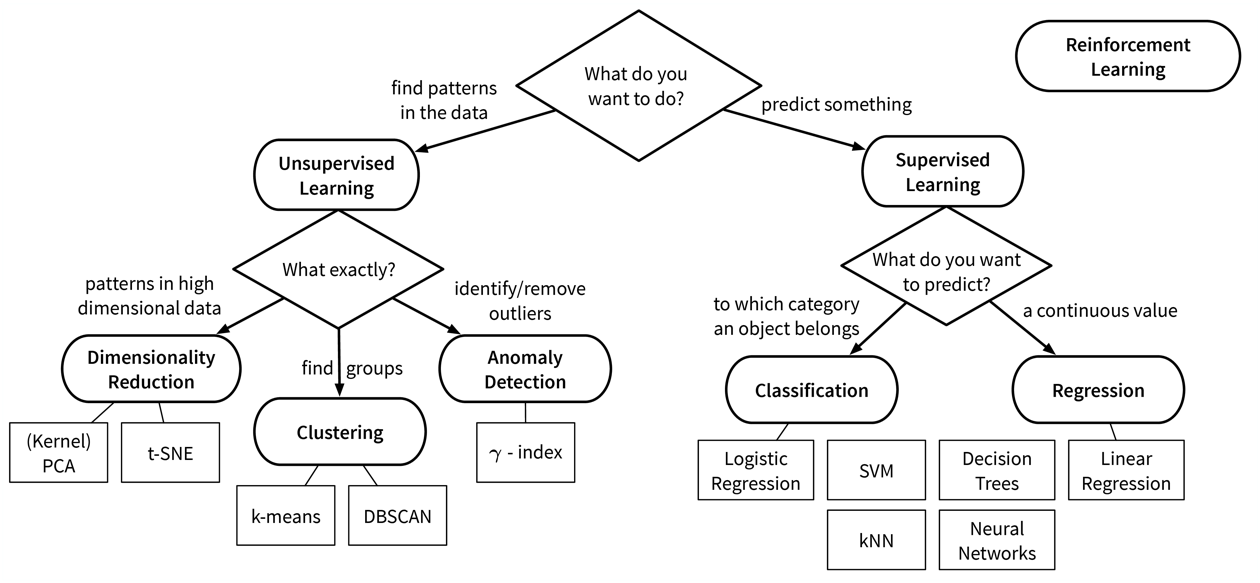

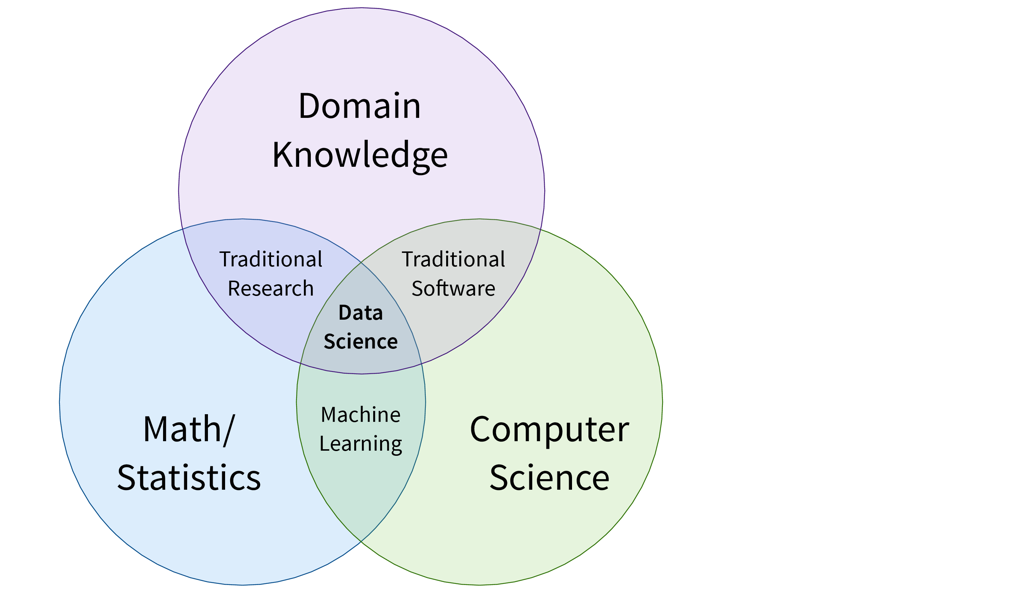

What is ML?

OK, now what exactly is this machine learning that is already transforming all of our lives?

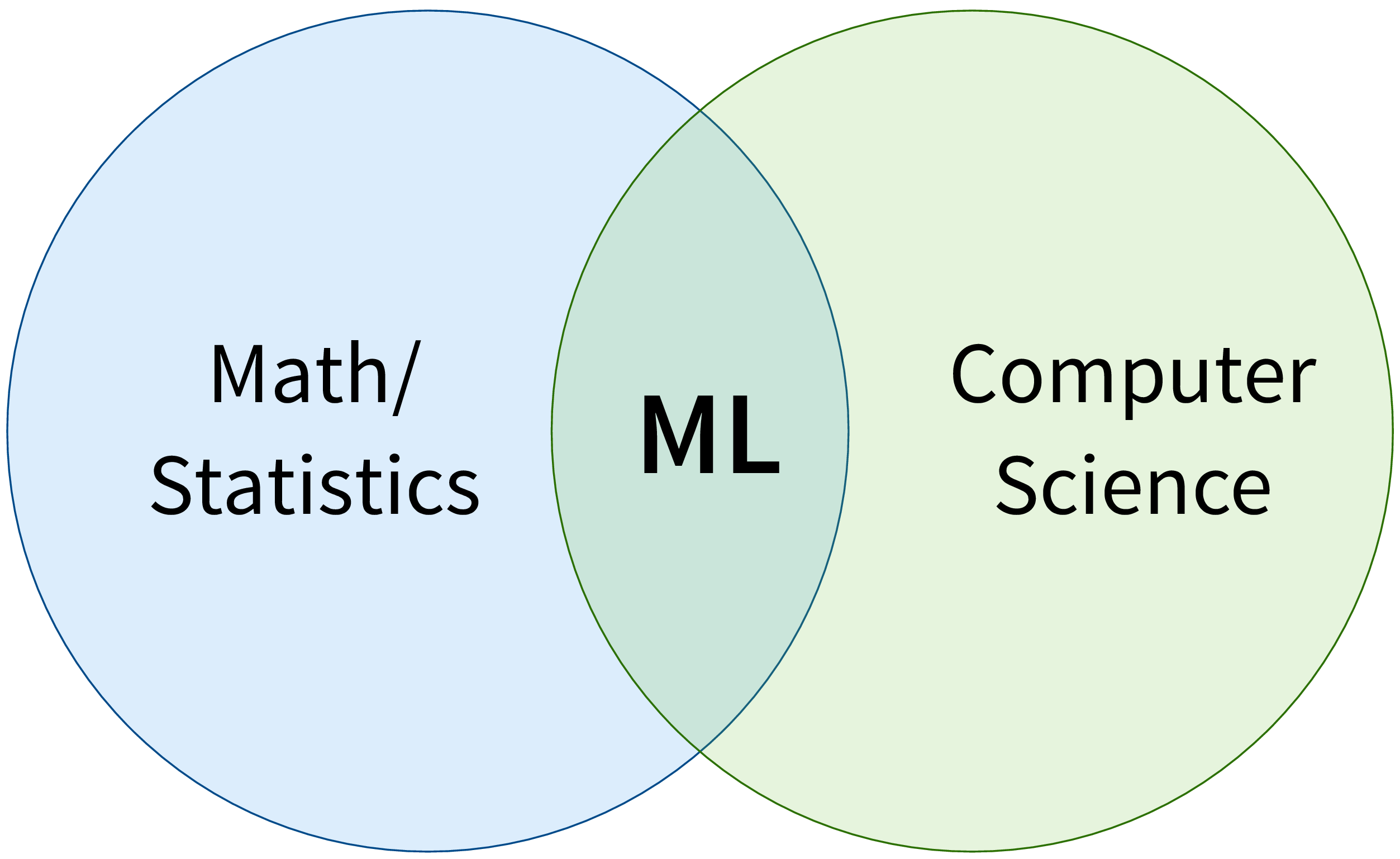

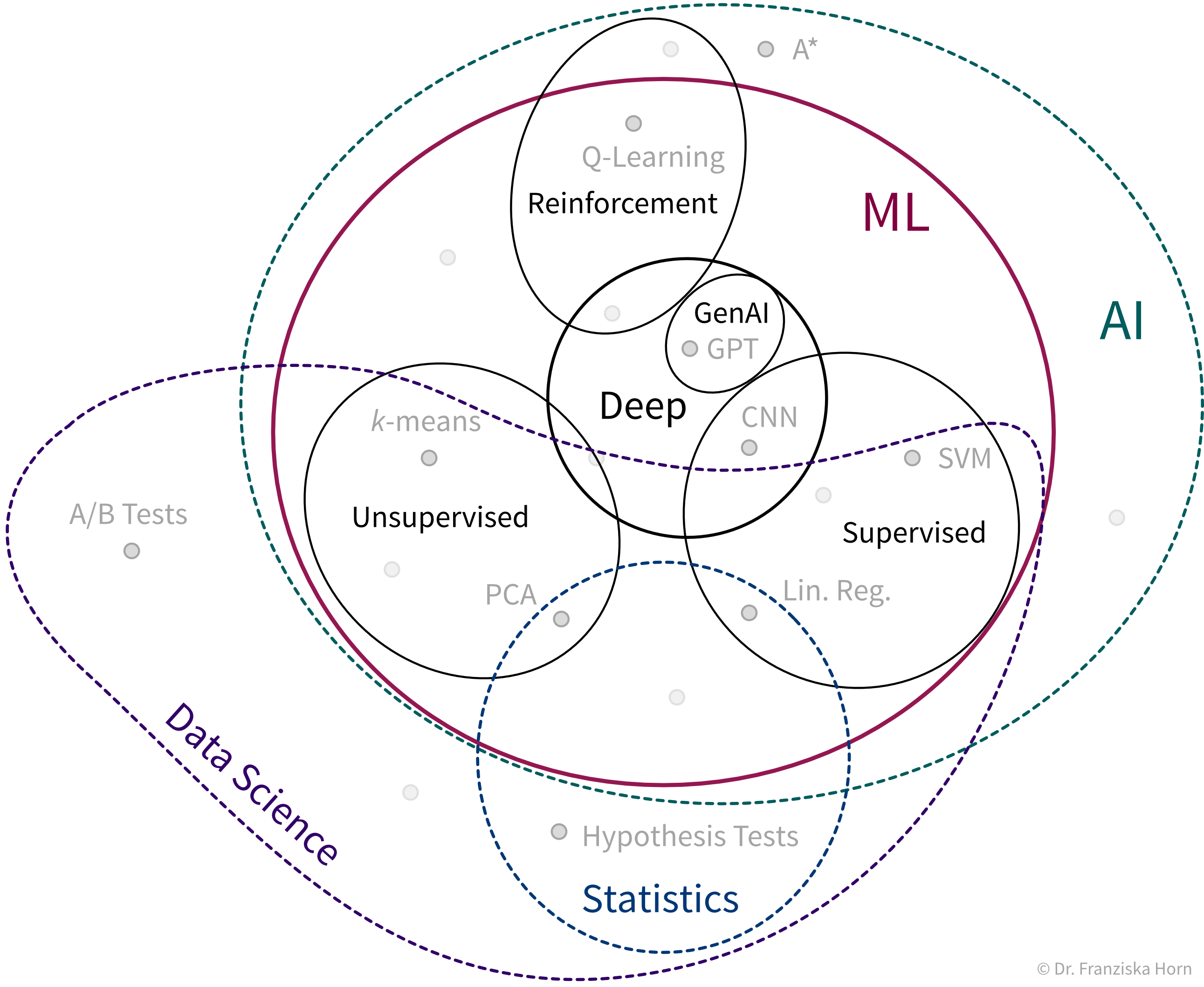

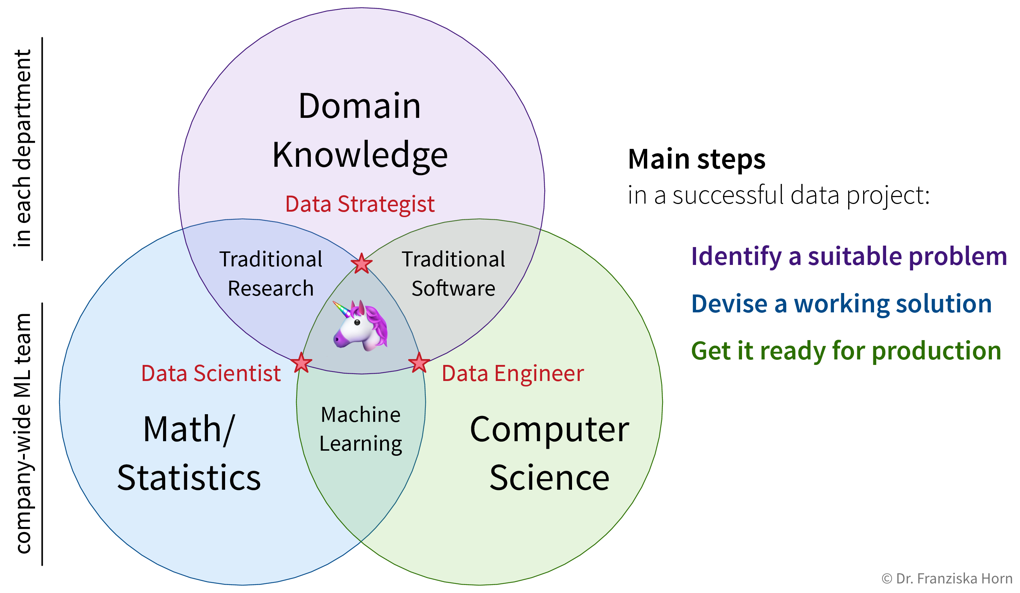

First of all, ML is an area of research in the field of theoretical computer science, i.e., at the intersection of mathematics and computer science:

More specifically, machine learning is an umbrella term for algorithms that recognize patterns and learn rules from data.

| Simply speaking, an algorithm can be thought of as a strategy or recipe for solving a certain kind of problem. For example, there exist effective algorithms to find the shortest paths between two cities (e.g., used in Google Maps to give directions) or to solve scheduling problems, such as: “Which task should be done first and which task after that to finish all tasks before their respective deadlines and satisfy dependencies between the tasks.” Machine learning deals with the subset of algorithms that detect and make use of statistical regularities in a dataset to obtain specific results. |

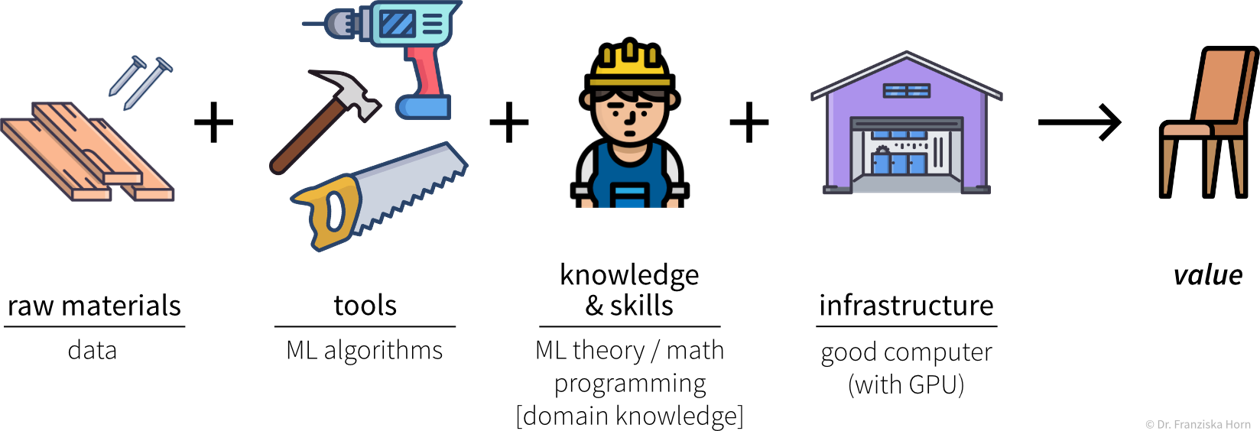

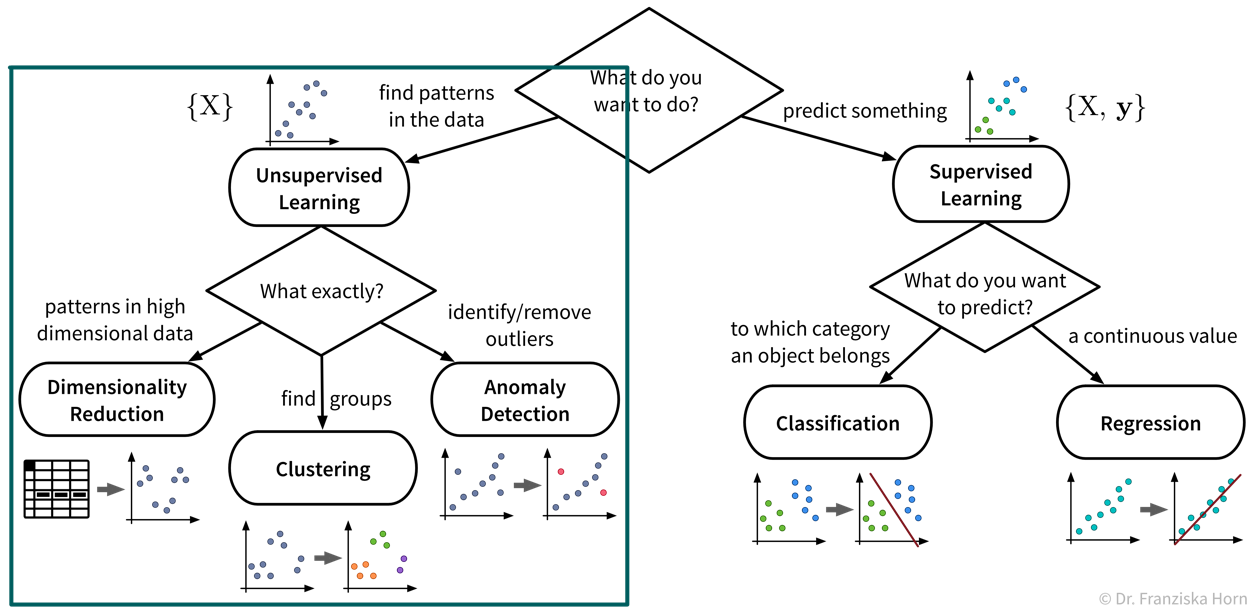

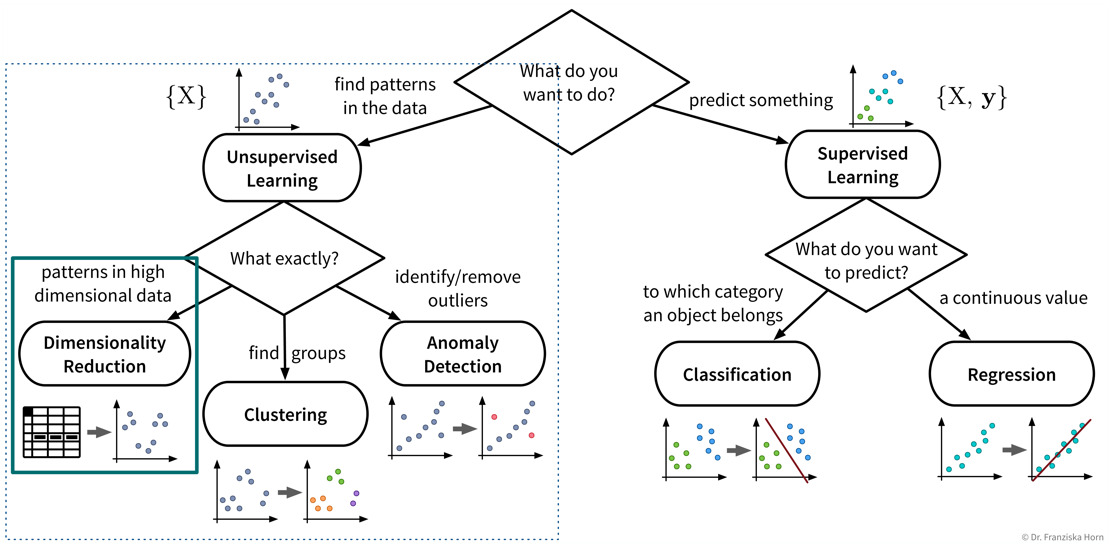

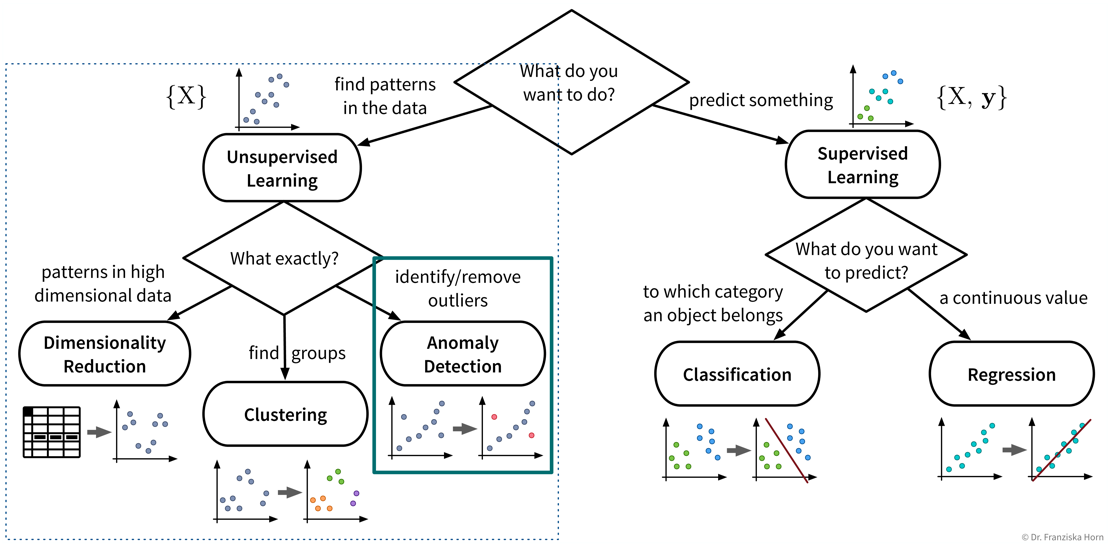

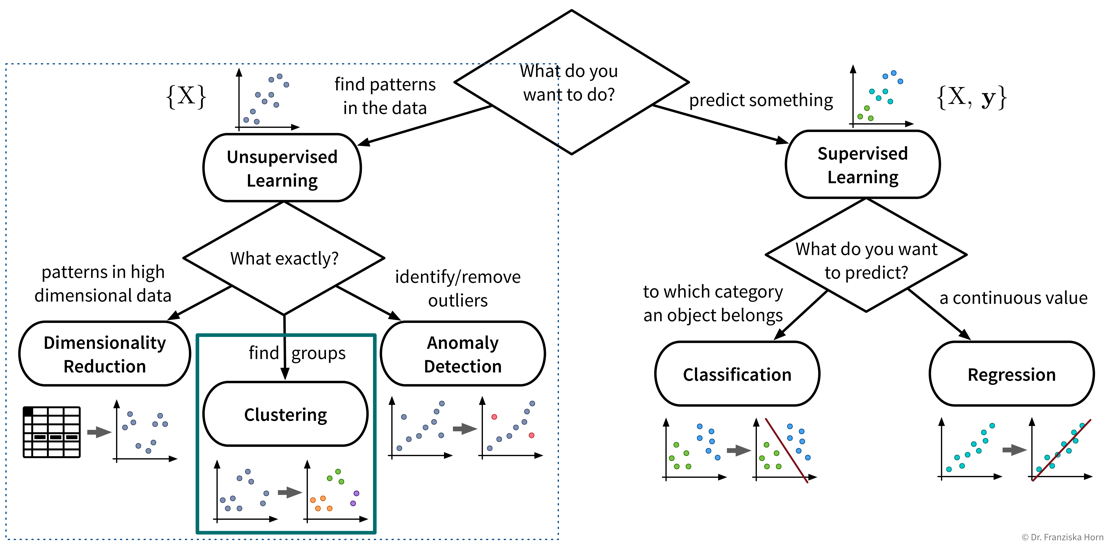

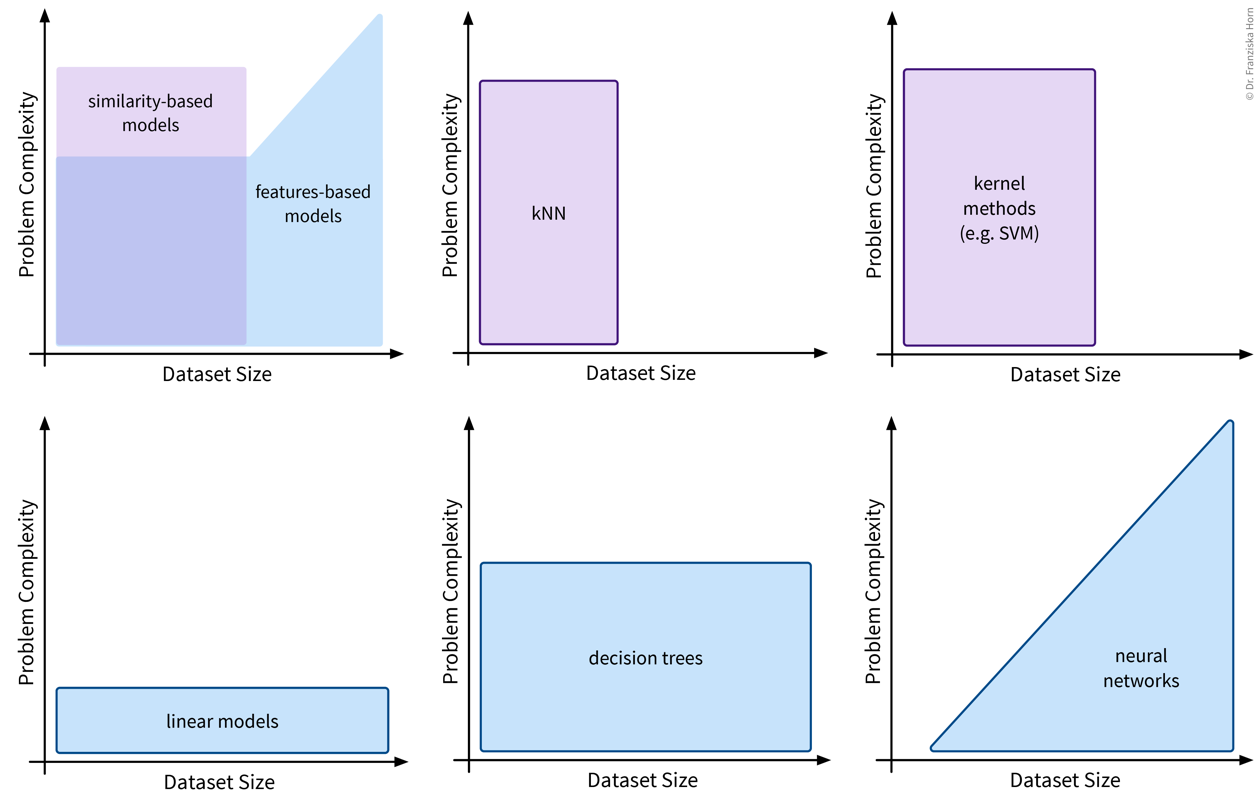

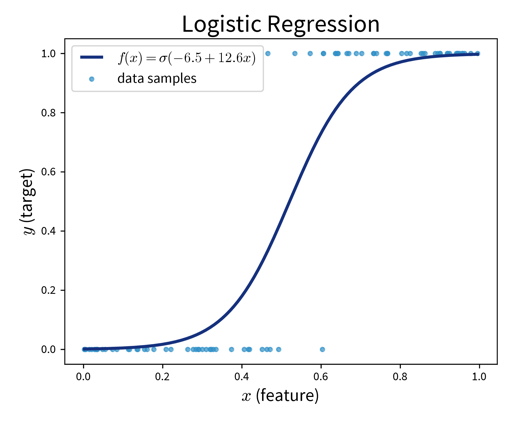

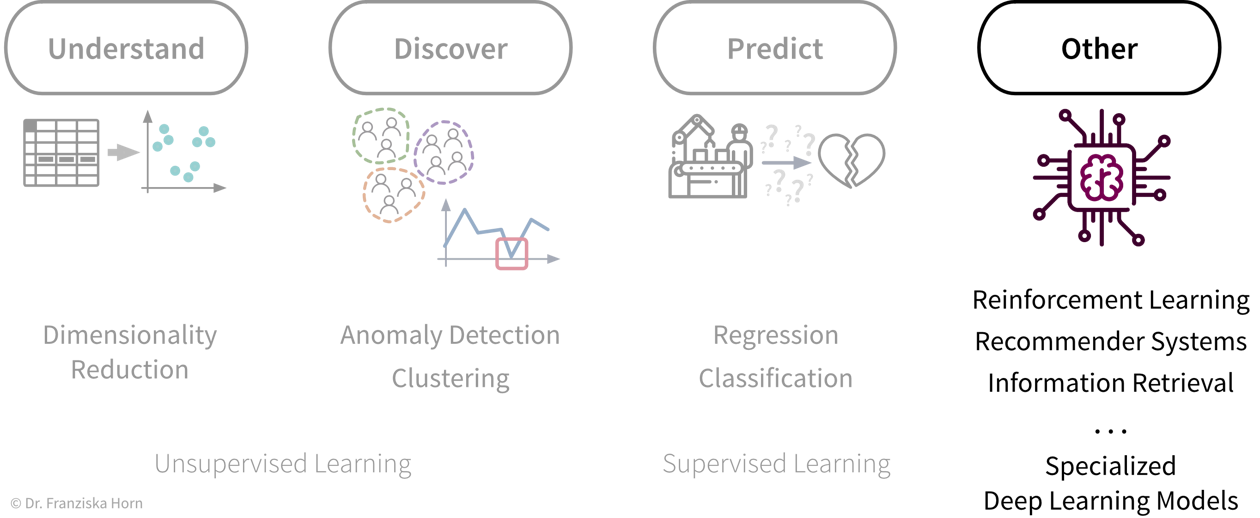

We can think of the different ML algorithms as our ML toolbox:

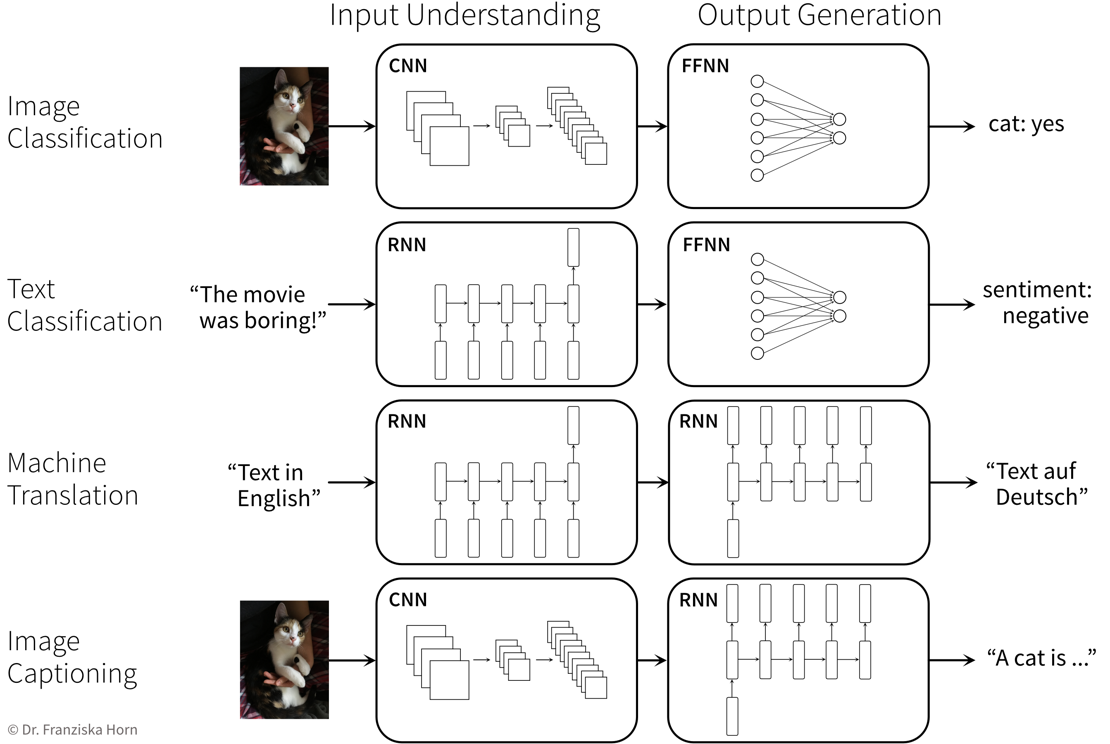

- ML algorithms solve “input → output” problems

-

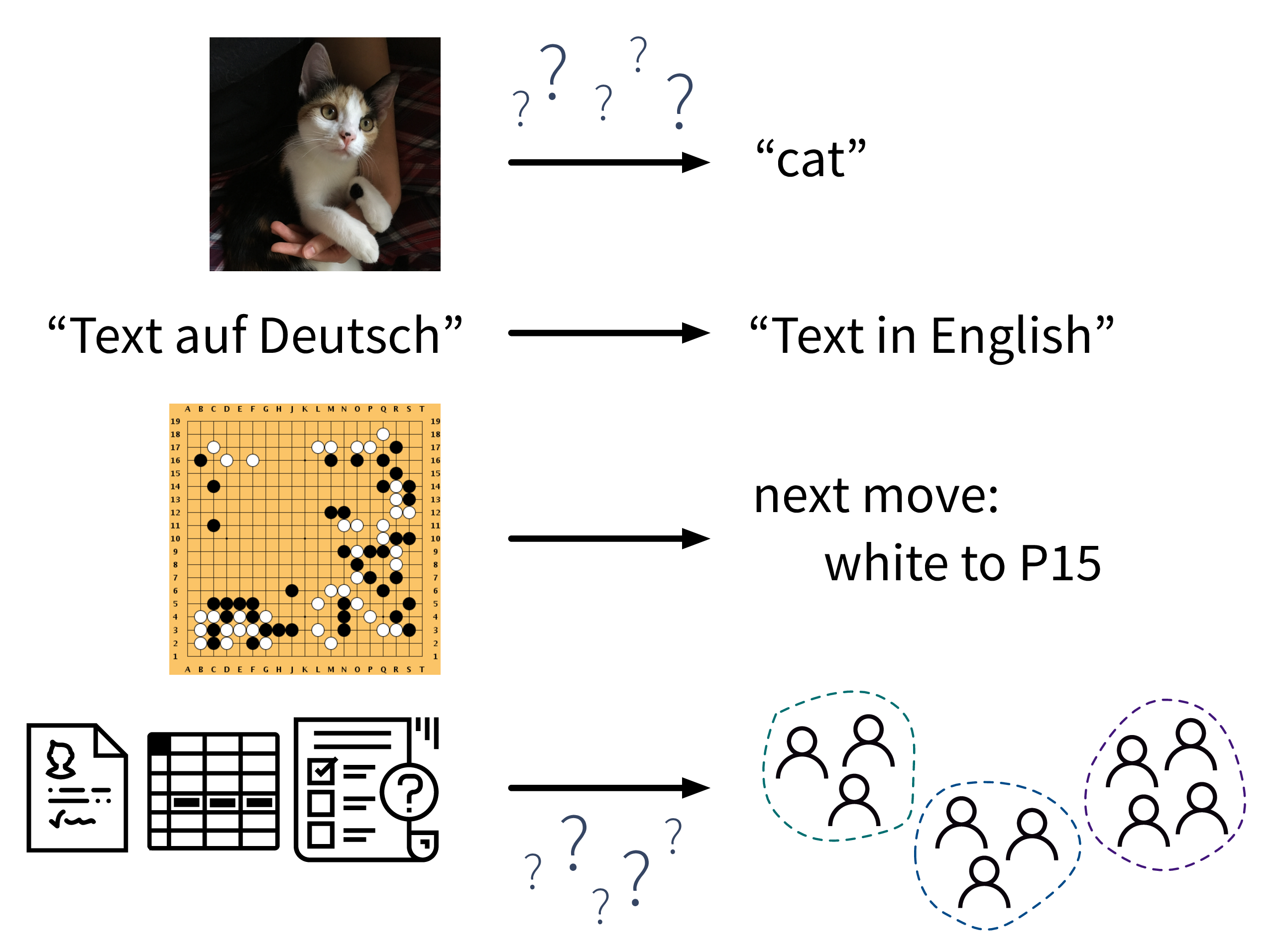

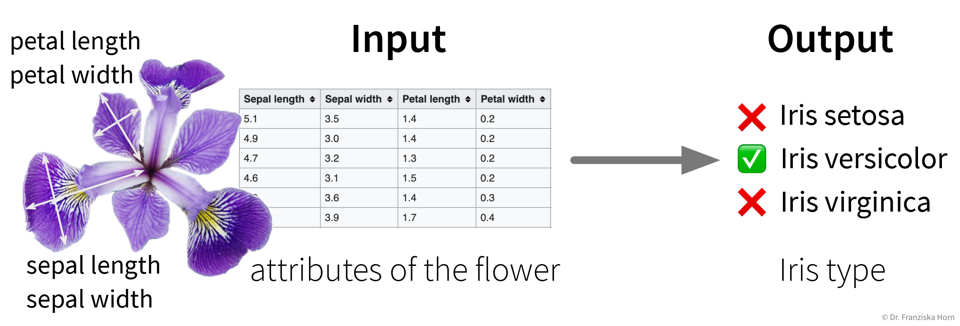

What all of these ML algorithms have in common, is that they solve “input → output” problems like these:

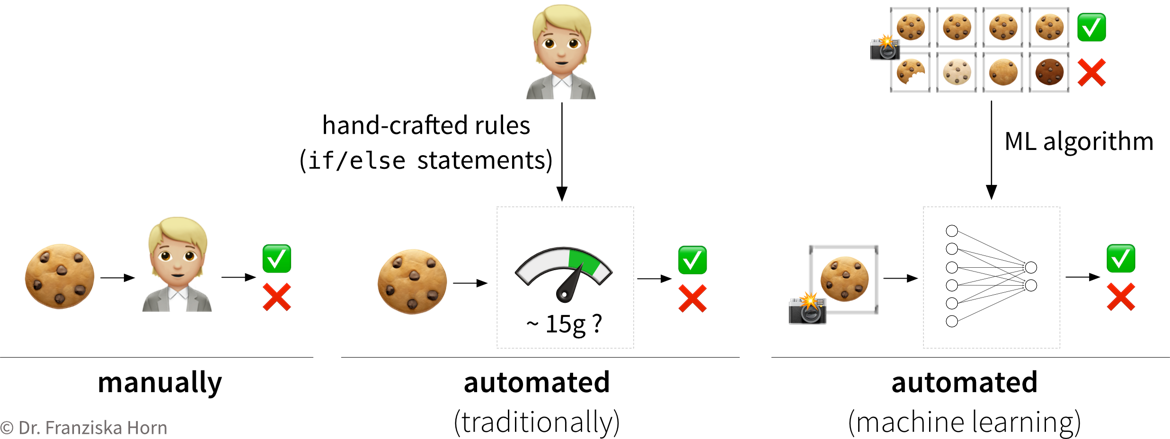

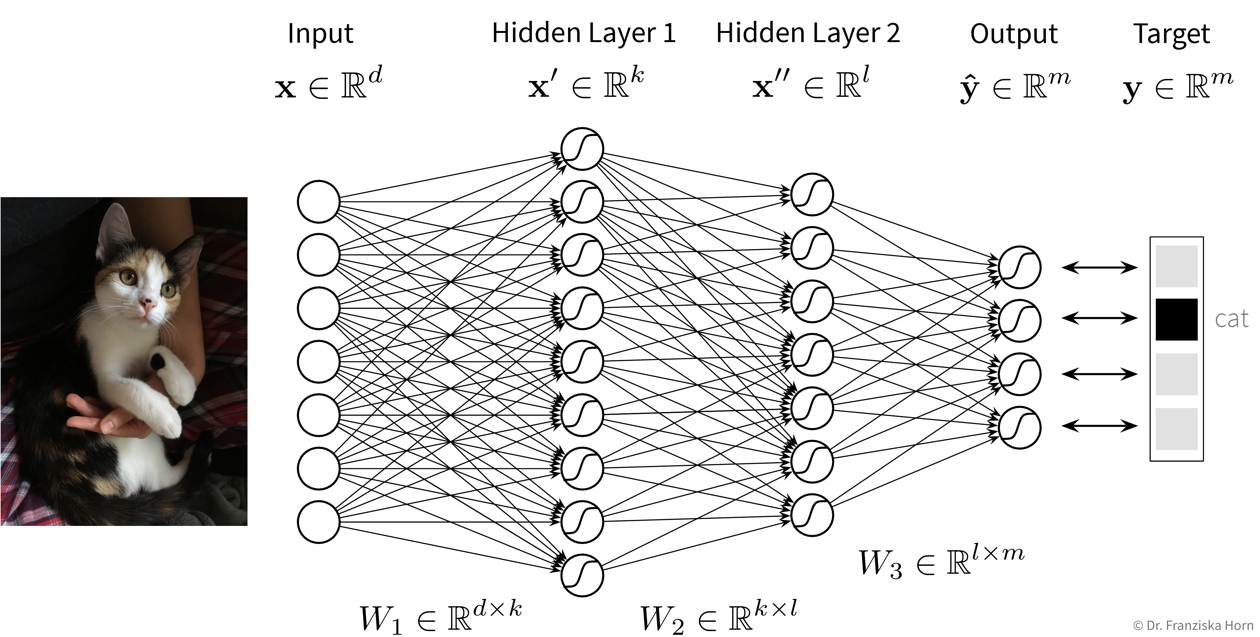

In the above examples, while a human (expert) could easily produce the correct output given the input (e.g., even a small child can recognize the cat in the first image), humans have a hard time describing how they arrived at the correct answer (e.g., how did you know that this is a cat (and not a small dog)? because of the pointy ears? the whiskers?). ML algorithms can learn such rules from the given data samples.

- Steps to identify a potential ML project

-

-

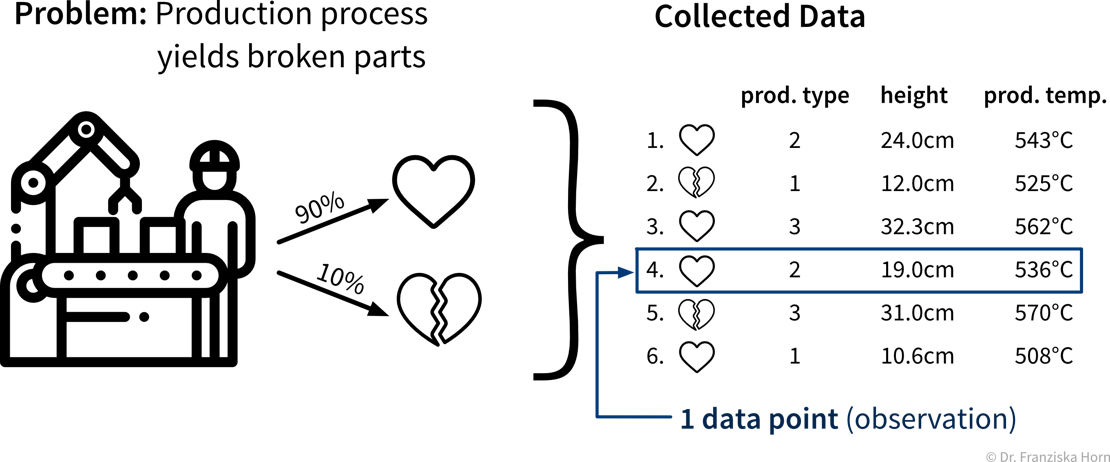

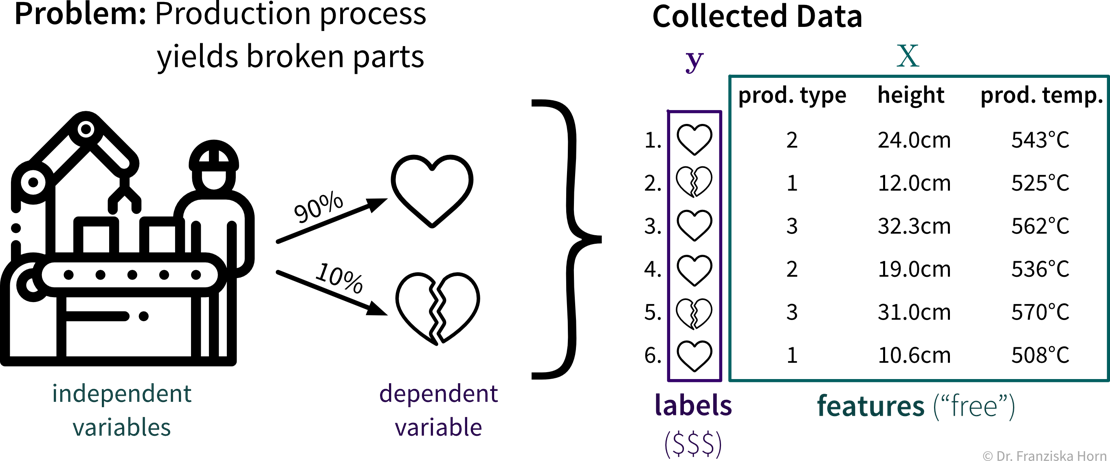

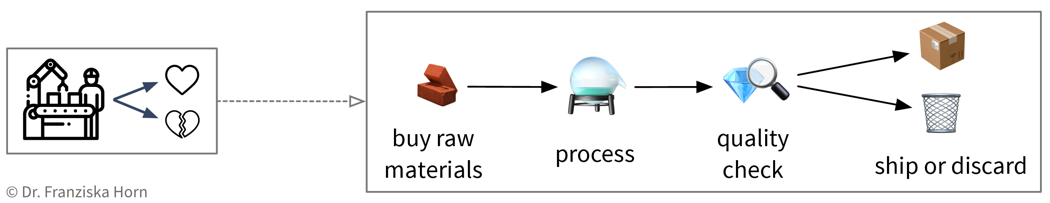

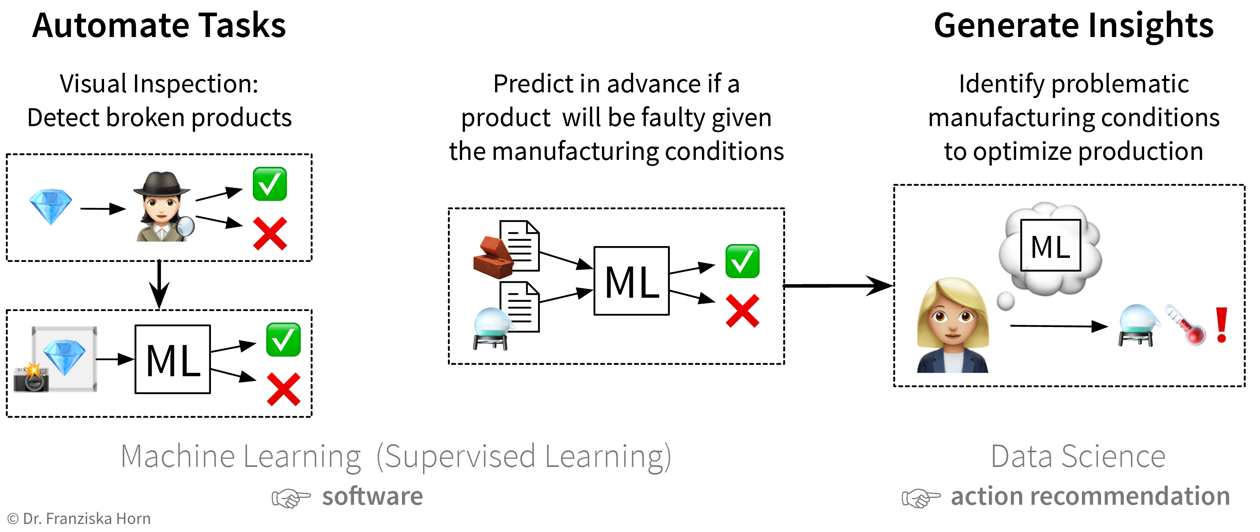

Create a process map: which steps are executed in the business process (flow of materials & information) and what data is collected where. For example, in a production process where some of the produced parts are defective:

-

Identify parts of the process that could either be automated with ML (e.g., straightforward, repetitive tasks otherwise done by humans) or in other ways improved by analyzing data (e.g., to understand root causes of a problem, to improve planning with what-if simulations, or to optimize the use of resources):

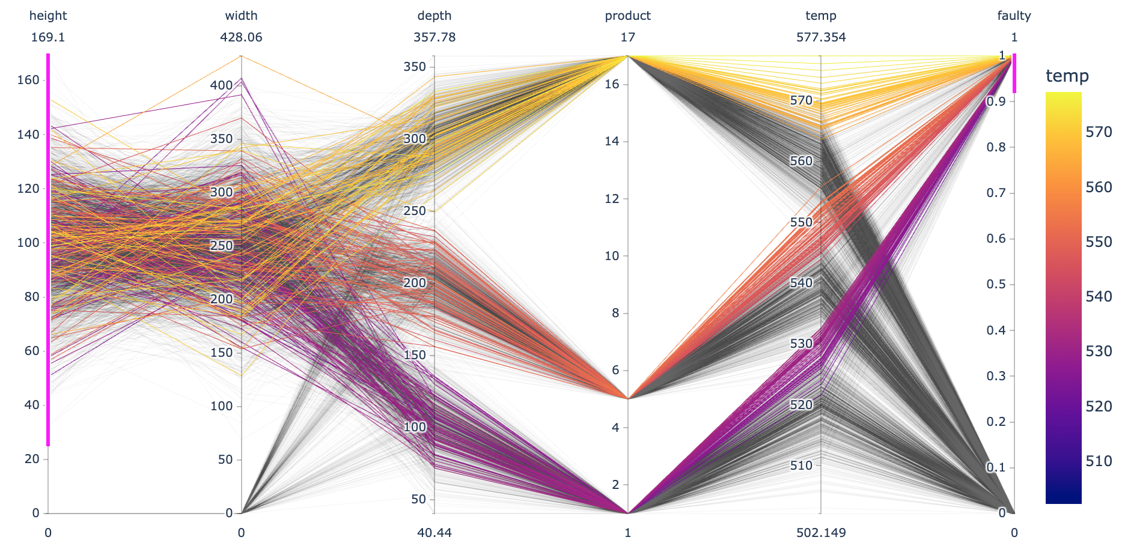

The first idea is to automate the quality check that was so far done by a human: since the human can easily recognize the defects in the pictures taken of the products, an ML model should be able to do this, too. The next idea is to try to predict in advance whether a product will be faulty or not based on the composition of raw materials and the proposed process conditions: success here is unclear, since the human experts are not sure whether all of the information necessary to determine if the product will be fine is contained in this data — but nevertheless it’s worth a try since this could save lots of resources. While the final ML model that solves the input-output problem can be deployed as software in the ongoing process, when a data scientist analyzes the results and interprets the model, she can additionally generate insights that can be translated into action recommendations.

The first idea is to automate the quality check that was so far done by a human: since the human can easily recognize the defects in the pictures taken of the products, an ML model should be able to do this, too. The next idea is to try to predict in advance whether a product will be faulty or not based on the composition of raw materials and the proposed process conditions: success here is unclear, since the human experts are not sure whether all of the information necessary to determine if the product will be fine is contained in this data — but nevertheless it’s worth a try since this could save lots of resources. While the final ML model that solves the input-output problem can be deployed as software in the ongoing process, when a data scientist analyzes the results and interprets the model, she can additionally generate insights that can be translated into action recommendations. -

Prioritize: which project will have a high impact, but at the same time also a good chance of success, i.e., should yield a high return on investment (ROI)? For example, using ML to automate a simple task is a comparatively low risk investment, but might cause some assembly-line workers to loose their jobs. In contrast, identifying the root causes of why a production process results in 10% scrap could save millions, but it is not clear from the start that such an analysis will yield useful results, since the collected data on the process conditions might not contain all the needed information.

-

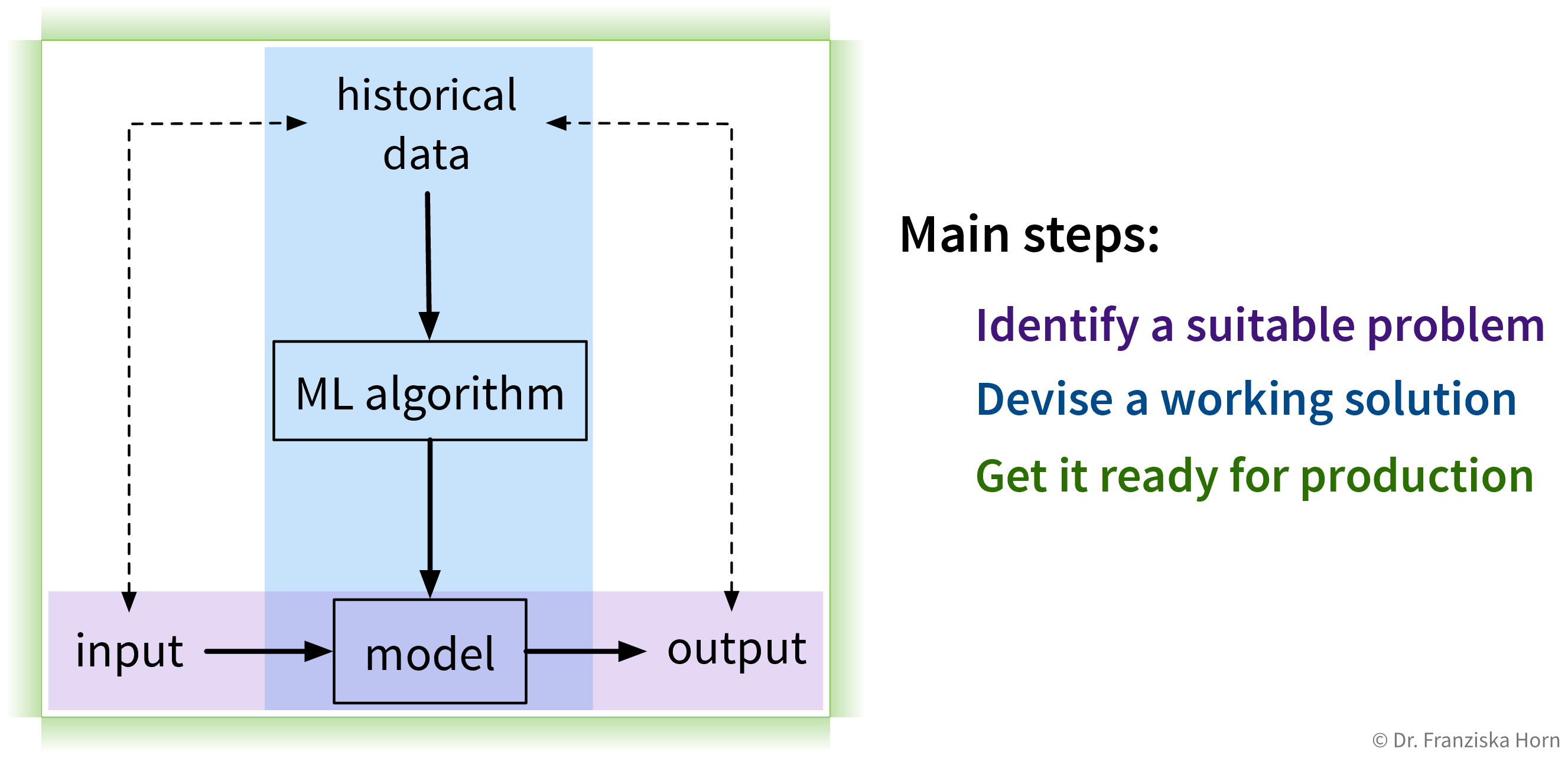

How do machines “learn”?

How do ML algorithms solve these “input → output” problems, i.e., how do they recognize patterns and learn rules from data?

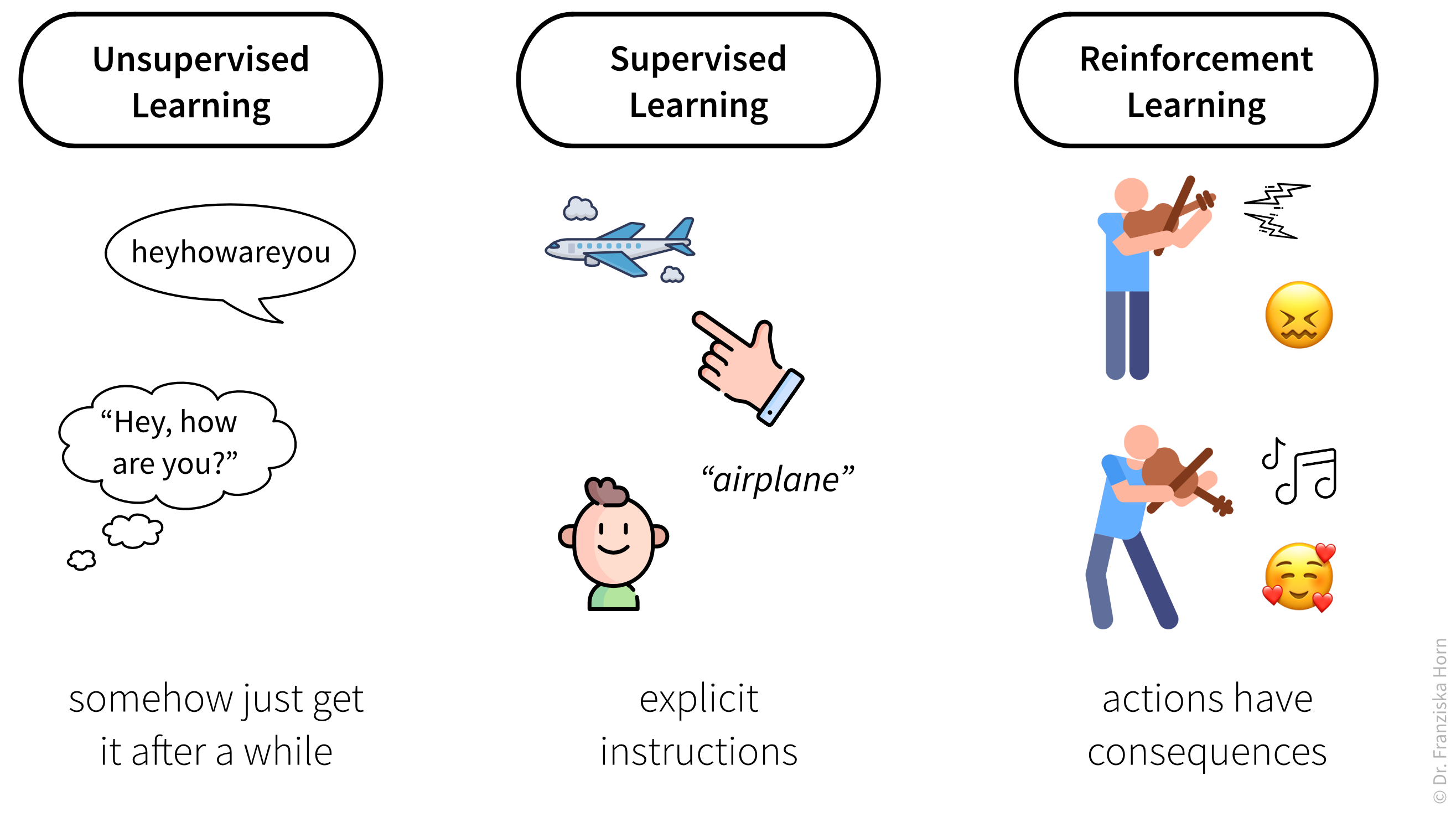

The set of ML algorithms can be subdivided according to their learning strategy. This is inspired by how humans learn:

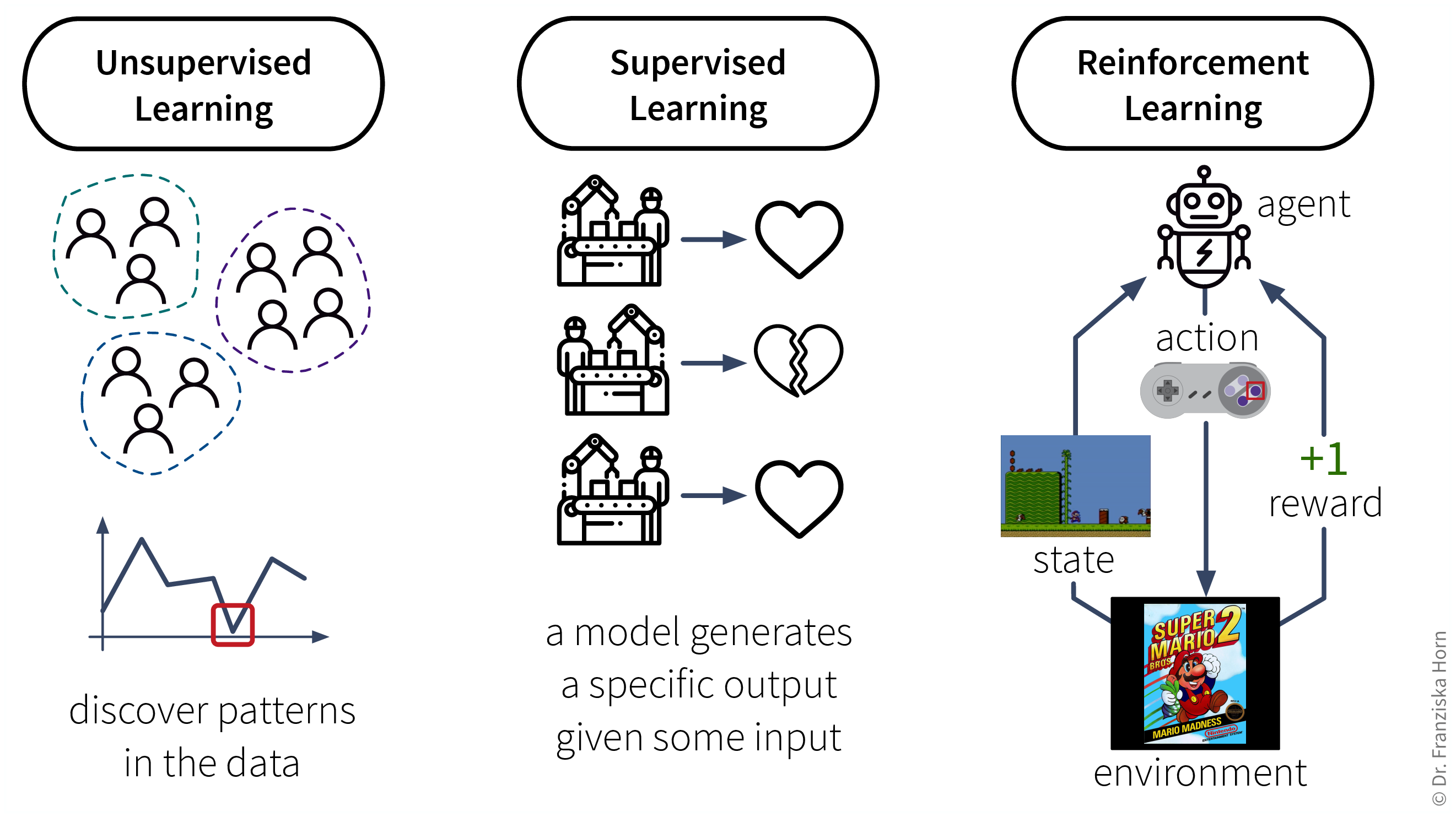

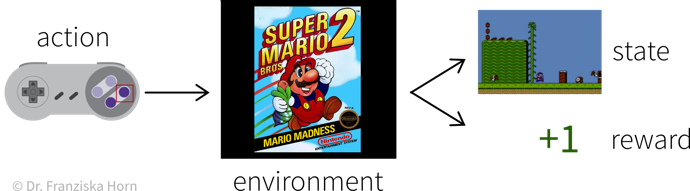

Analogously, machines can also learn by following these three strategies:

- Data requirements for learning according to these strategies:

-

-

Unsupervised Learning: a dataset with examples

-

Supervised Learning: a dataset with labeled examples

-

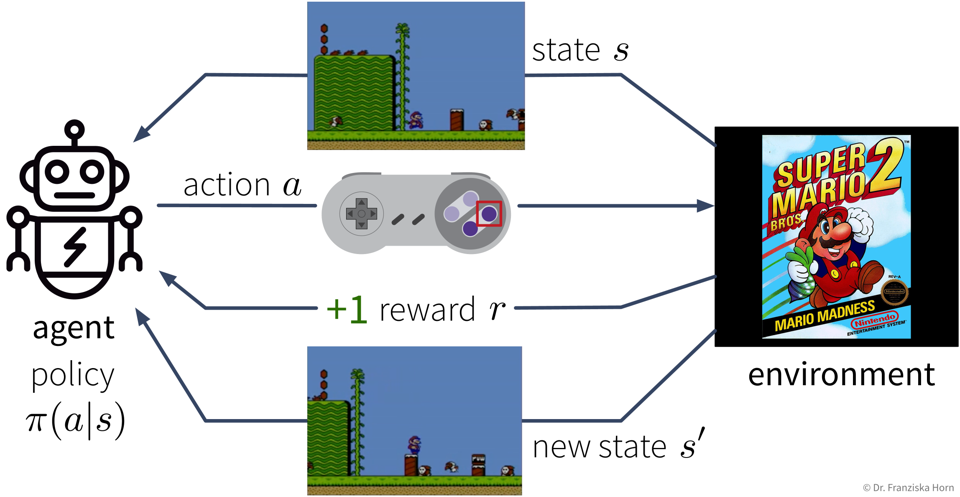

Reinforcement Learning: a (simulation) environment that generates data (i.e., reward + new state) in response to the agent’s actions

With its reliance on a data-generating environment, reinforcement learning is a bit of a special case. Furthermore, as of now it’s still really hard to get reinforcement learning algorithms to work correctly, which means they’re currently mostly used in research and not so much for practical applications.

-

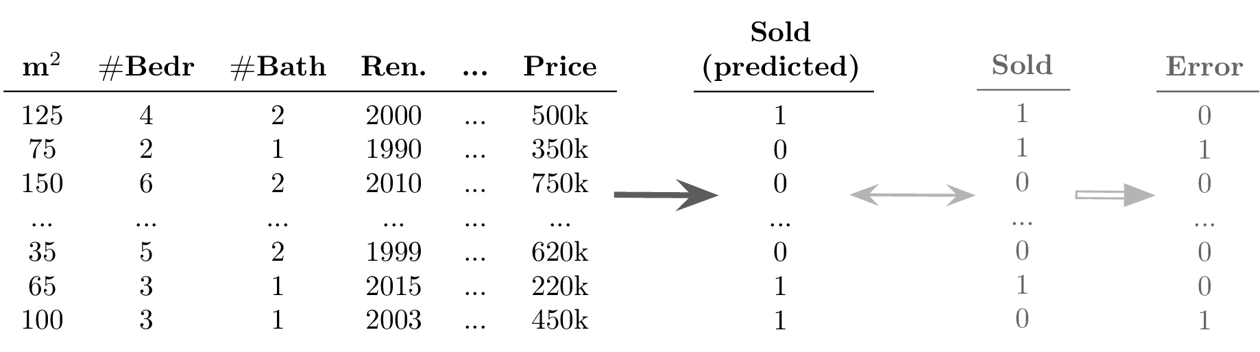

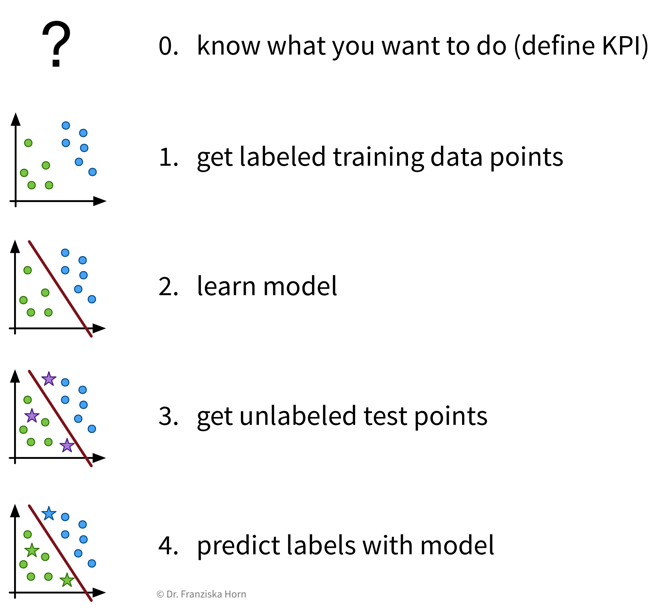

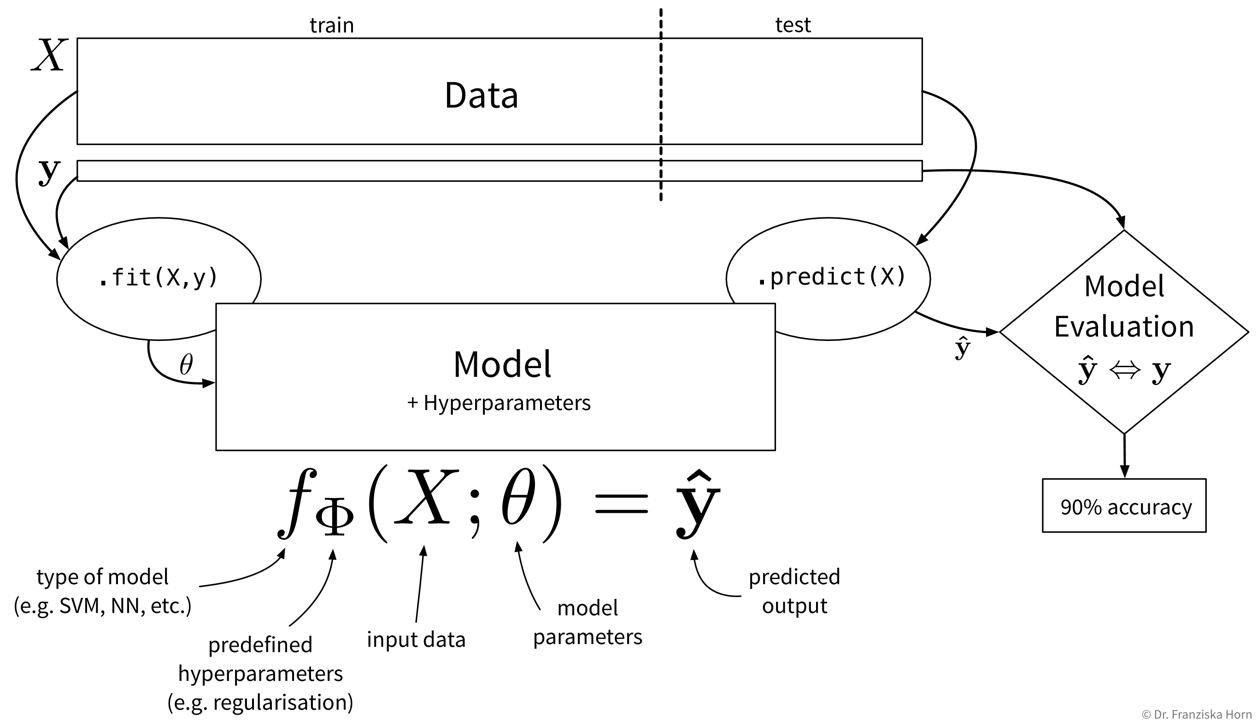

Supervised Learning

Supervised learning is the most common type of machine learning used in today’s applications.

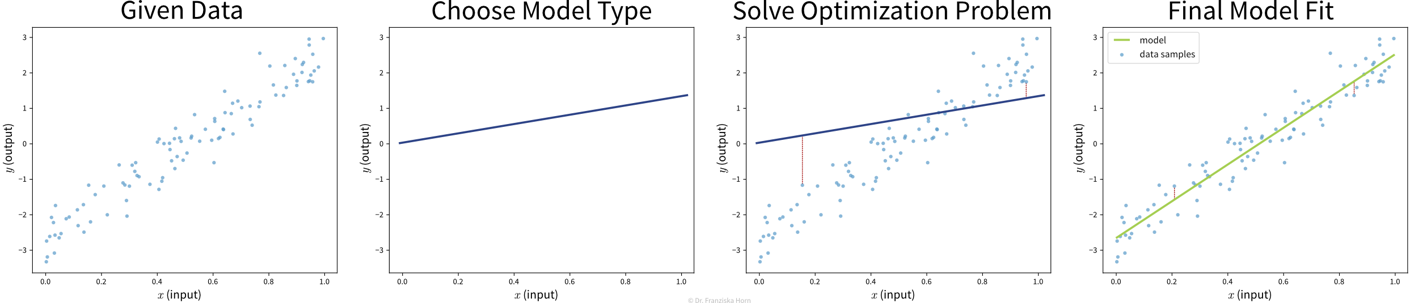

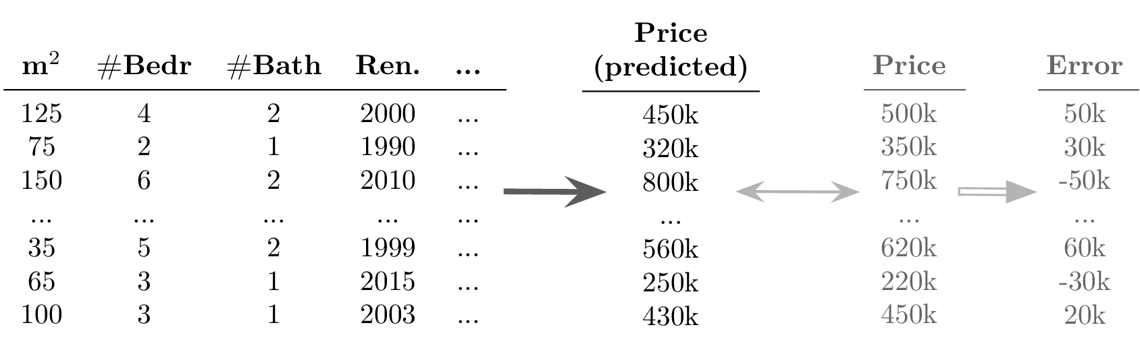

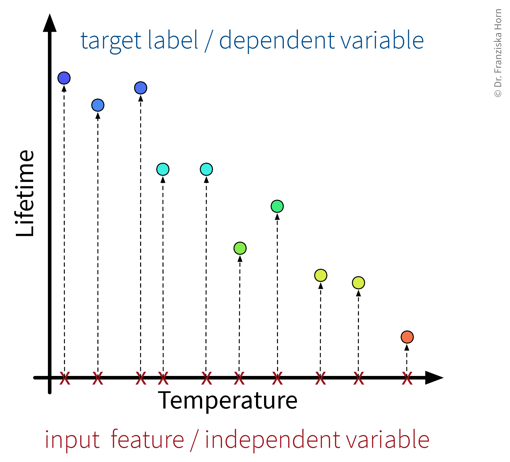

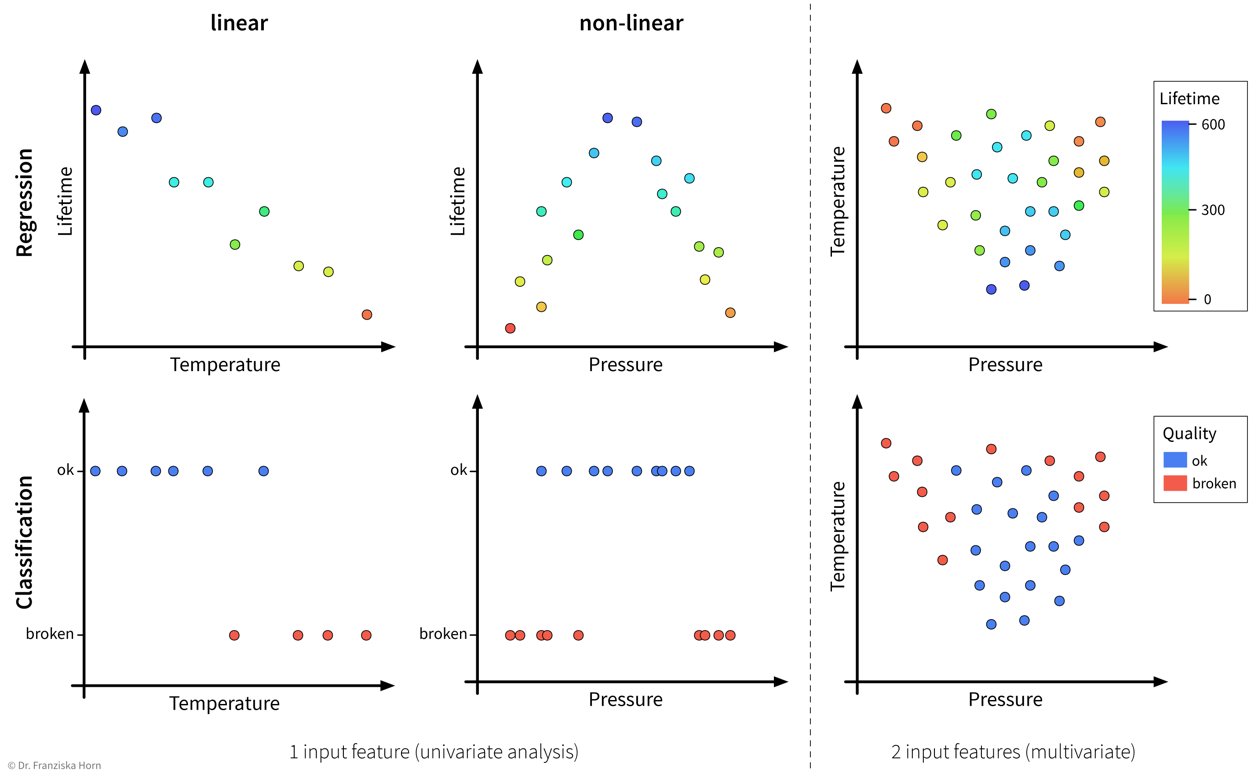

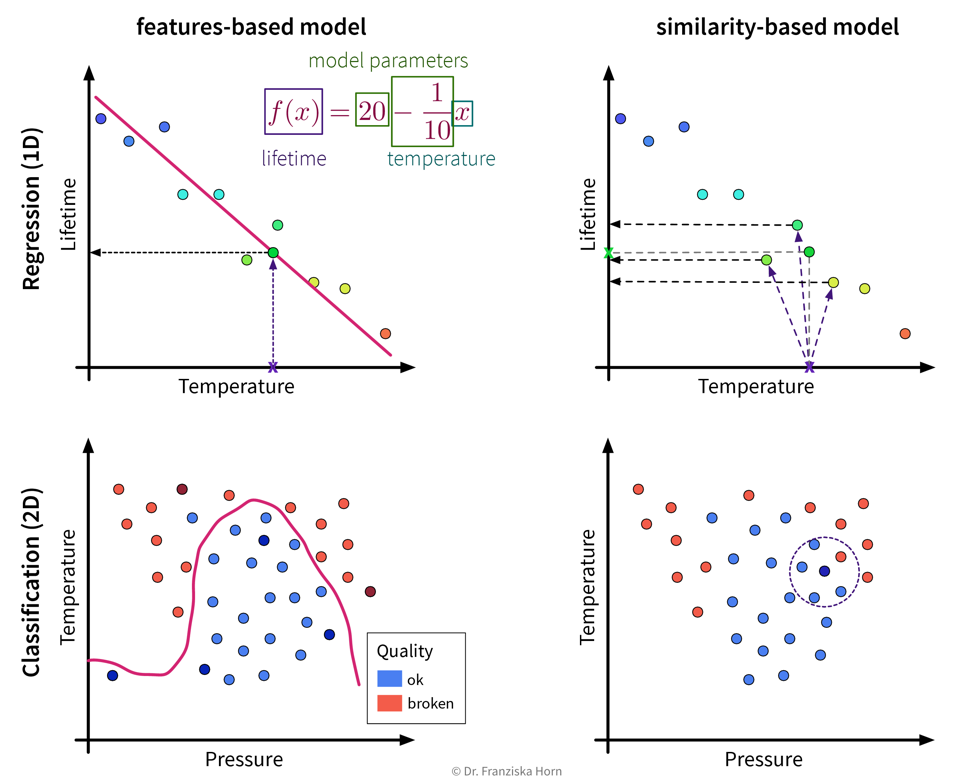

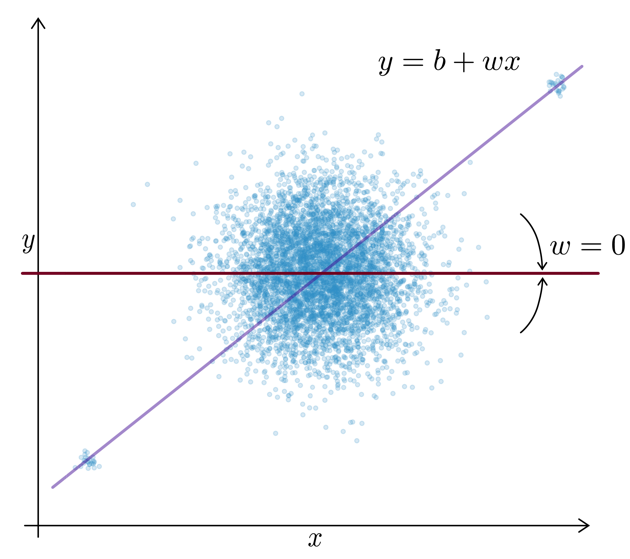

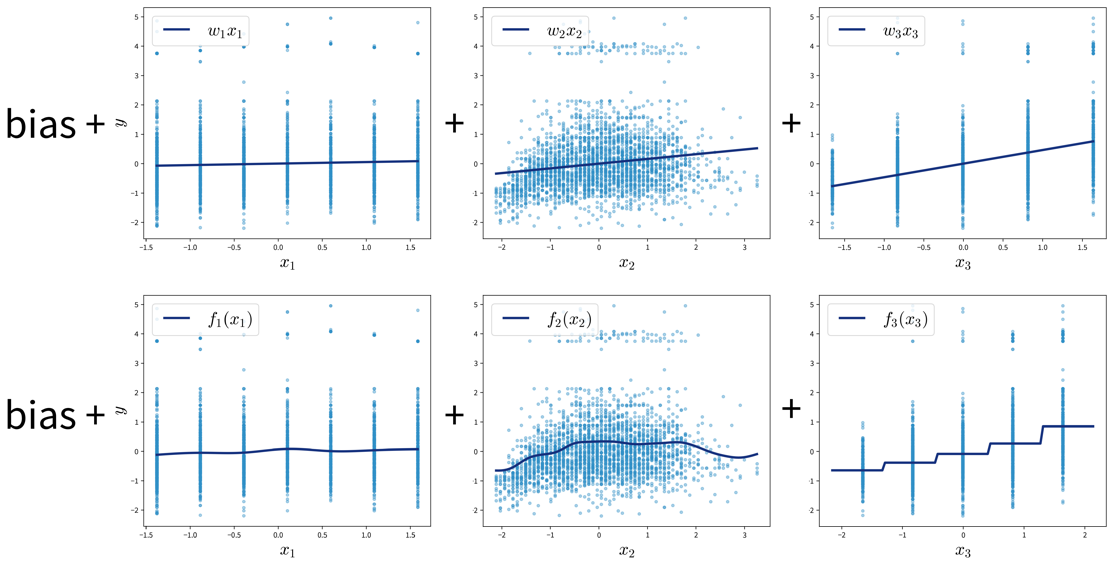

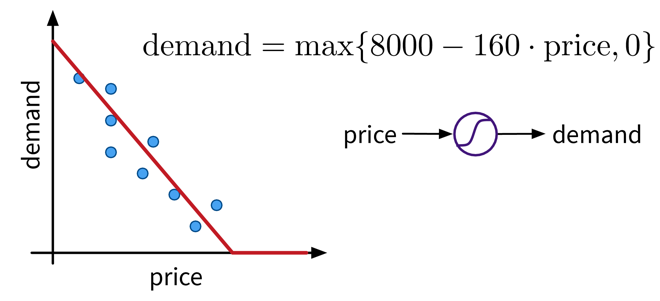

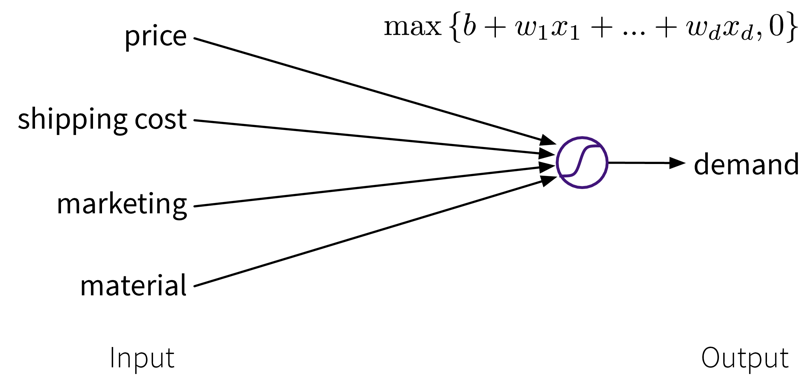

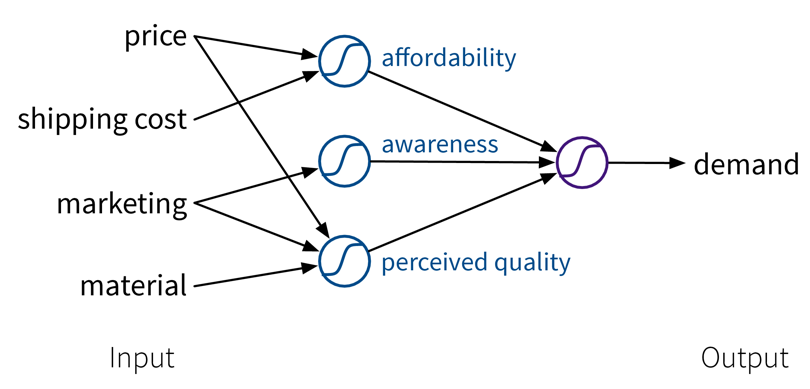

The goal here is to learn a model (= a mathematical function) \(f(x)\) that describes the relationship between some input(s) \(x\) (e.g., different process conditions like temperature, type of material, etc.) and output \(y\) (e.g., resulting product quality).

This model can then be used to make predictions for new data points, i.e., compute \(f(x') = y'\) for some new \(x'\) (e.g., predict for a new set of process conditions whether the produced product will be of high quality or if the process should be stopped to not waste resources).

- Supervised Learning in a nutshell:

-

Before we start, we need to be very clear on what we want, i.e., what should be predicted, how will predicting this variable help us achieve our overall goals and create value, and how do we measure success, i.e., what is the Key Performance Indicator (KPI) of our process. Then, we need to collect data — and since we’re using supervised learning, this needs to be labeled data, with the labels corresponding to the target variable that we want to predict. Next, we “learn” (or “train” or “fit”) a model on this data and finally use it to generate predictions for new data points.

Before we start, we need to be very clear on what we want, i.e., what should be predicted, how will predicting this variable help us achieve our overall goals and create value, and how do we measure success, i.e., what is the Key Performance Indicator (KPI) of our process. Then, we need to collect data — and since we’re using supervised learning, this needs to be labeled data, with the labels corresponding to the target variable that we want to predict. Next, we “learn” (or “train” or “fit”) a model on this data and finally use it to generate predictions for new data points.

| Video Recommendation: If you’re not familiar with linear regression, the most basic supervised learning algorithm, please watch the explanation from Google decision scientist Cassie Kozyrkov on how linear regression works: [Part 1] [Part 2] [Part 3] |

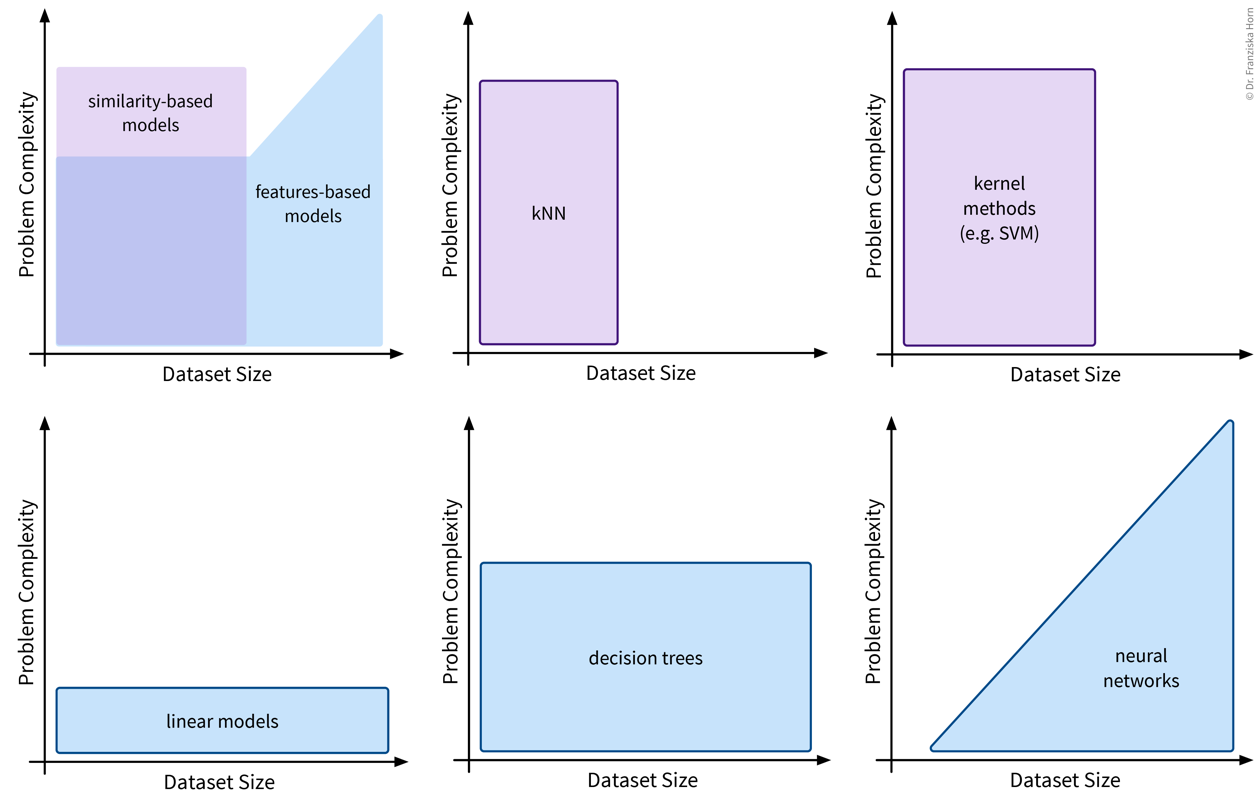

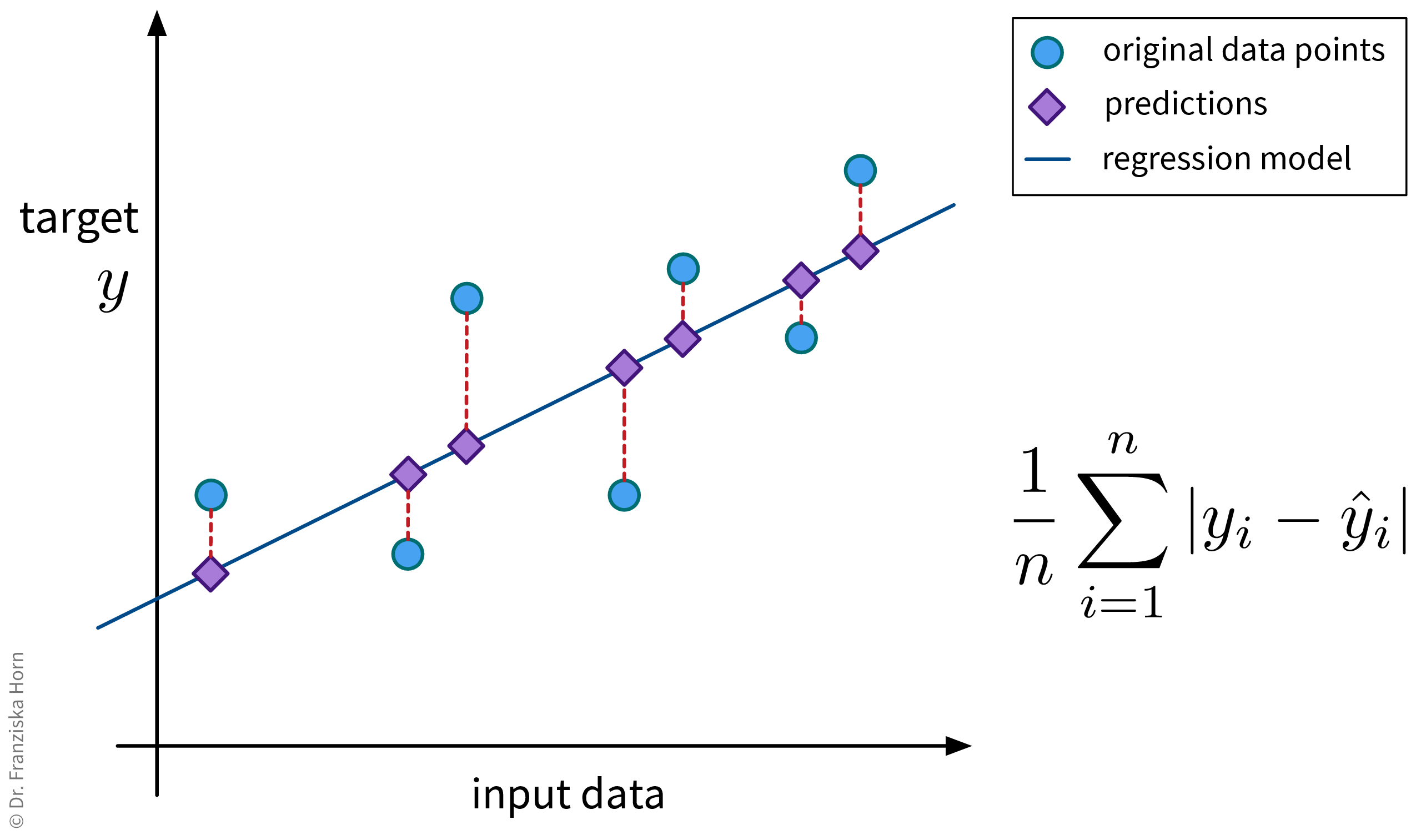

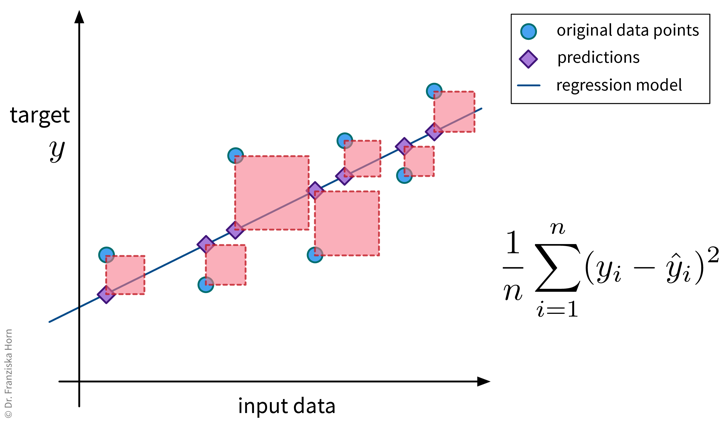

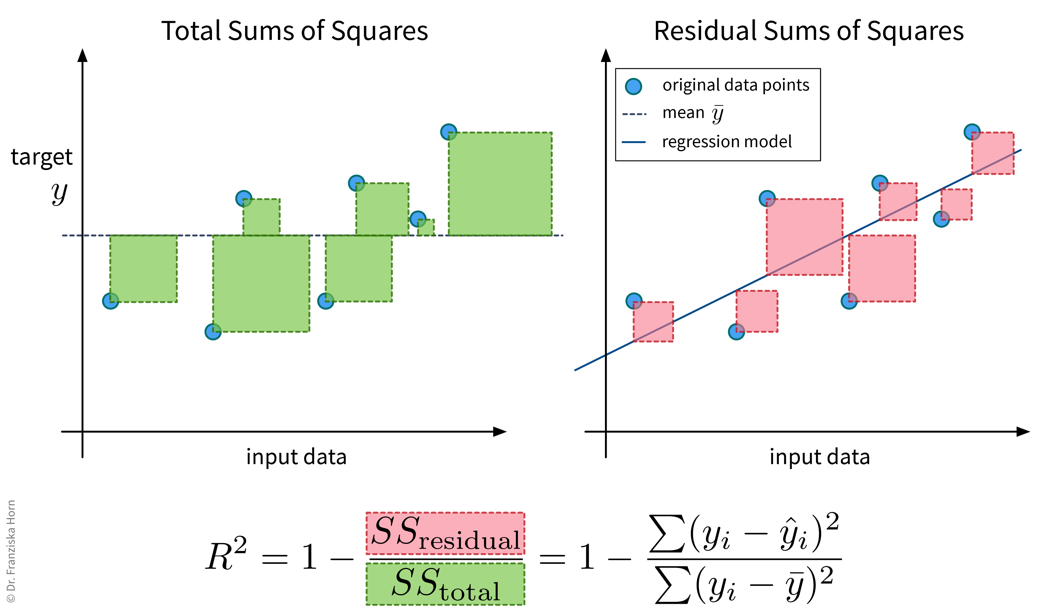

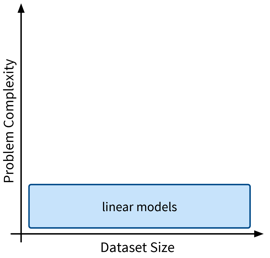

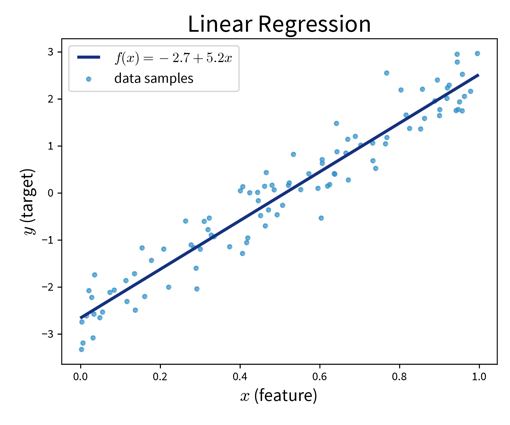

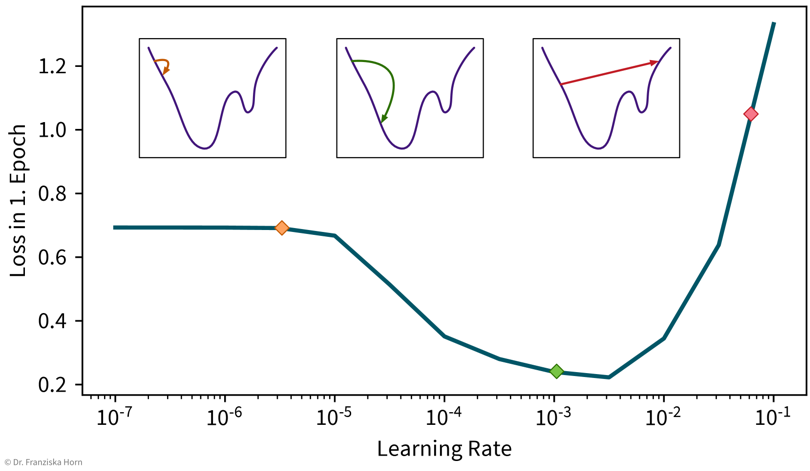

The available supervised learning algorithms differ in the type of \(x \to y\) relationship they can describe (e.g., linear or nonlinear) and what kind of objective they minimize (also called loss function; an error computed on the training data, quantifying the mismatch between true and predicted labels). The task of a data scientist is to select a type of model that can optimally fit the given data. The rest is then taken care of by an optimization method, which finds the parameters of the model that minimize the model’s objective, i.e., such that the model’s prediction error on the given data is as small as possible.

| In most of the book, the terms “ML algorithm” and “ML model” will be used interchangeably. To be more precise, however, in general the algorithm processes the data and learns some parameter values. These parameter settings define the final model. For example, a linear regression model is defined by its coefficients (i.e., the model’s parameters), which are found by executing the steps outlined in the linear regression algorithm, which includes solving an optimization problem. |

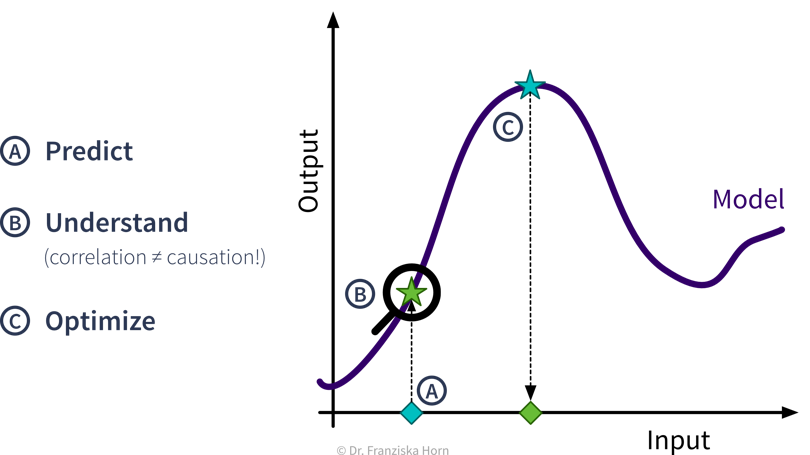

- Predictive Analytics

-

By feeding historical data to a supervised learning algorithm, we can generate a predictive model that makes predictions about future scenarios to aid with planning.

Example: Use sales forecasts to better plan inventory levels. - Interpreting Predictive Models

-

Given a model that makes accurate predictions for new data points, we can interpret this model and explain its predictions to understand root causes in a process.

Example: Given a model that predicts the quality of a product from the process conditions, identify which conditions result in lower quality products. - What-if Analysis & Optimization

-

Given a model that makes accurate predictions for new data points, we can use this model in a “what-if” forecast to explore how a system might react to different conditions to make better decisions (but use with caution!).

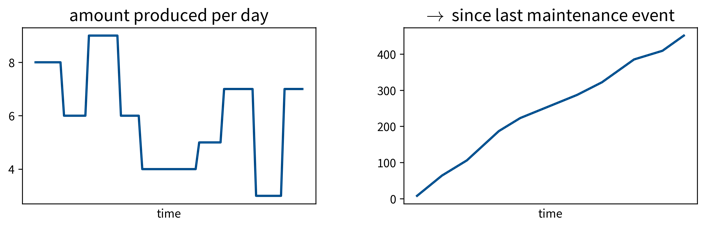

Example: Given a model that predicts the remaining lifetime of a machine component under some process conditions, simulate how quickly this component would deteriorate if we changed the process conditions.Going one step further, this model can also be used inside an optimization loop to automatically evaluate different inputs with the model systematically to find optimal settings.

Example: Given a model that predicts the quality of a product from the process conditions, automatically determine the best production settings for a new type of raw material.

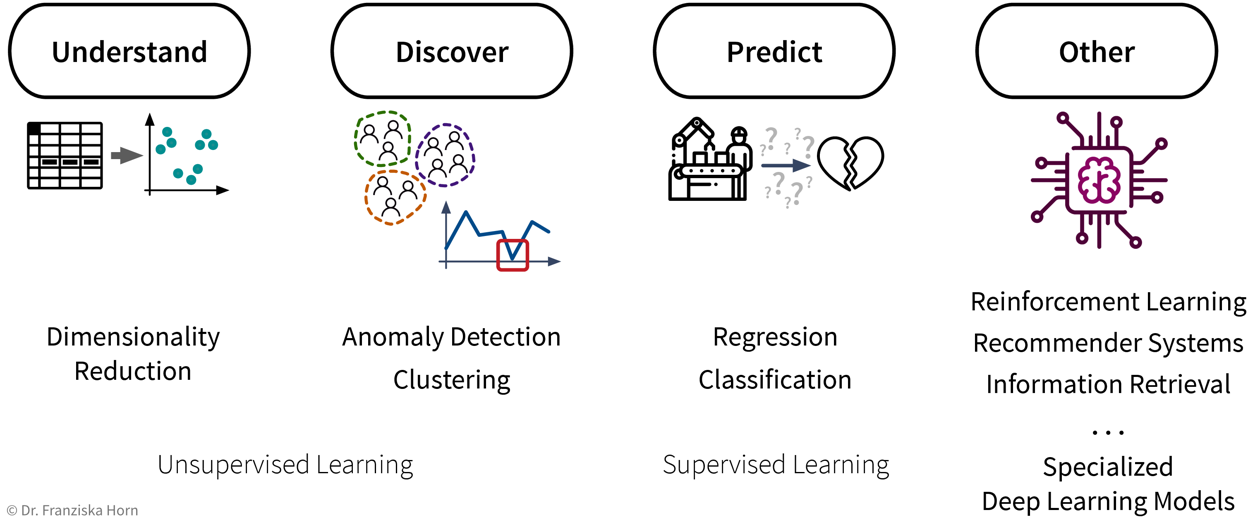

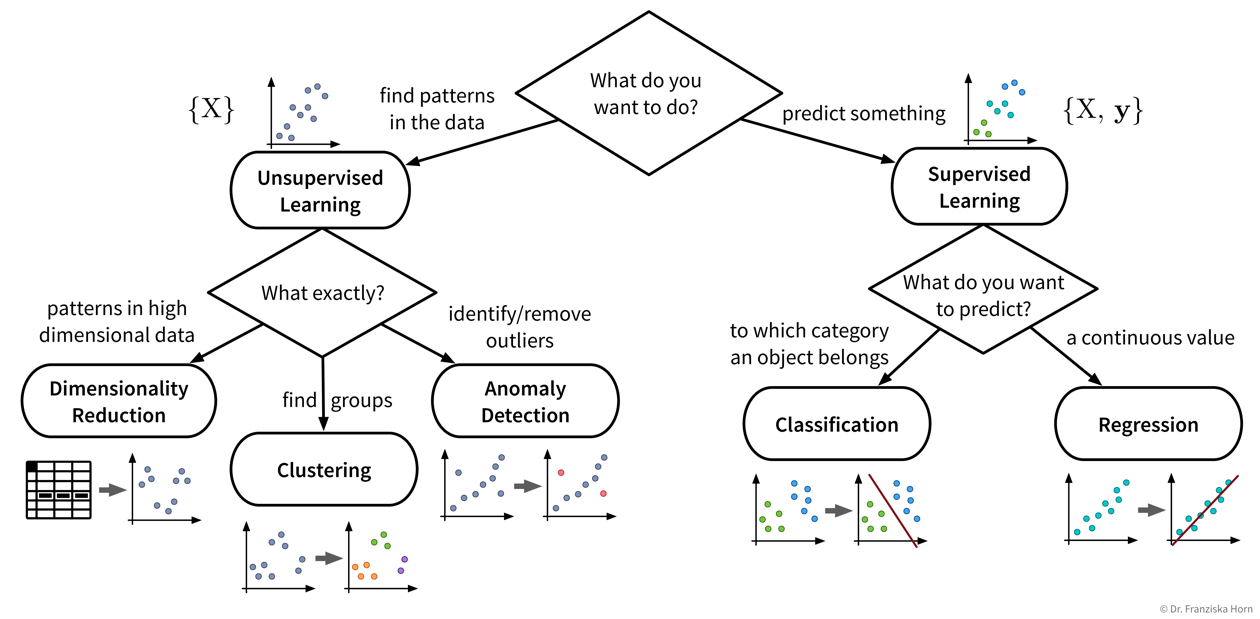

ML use cases

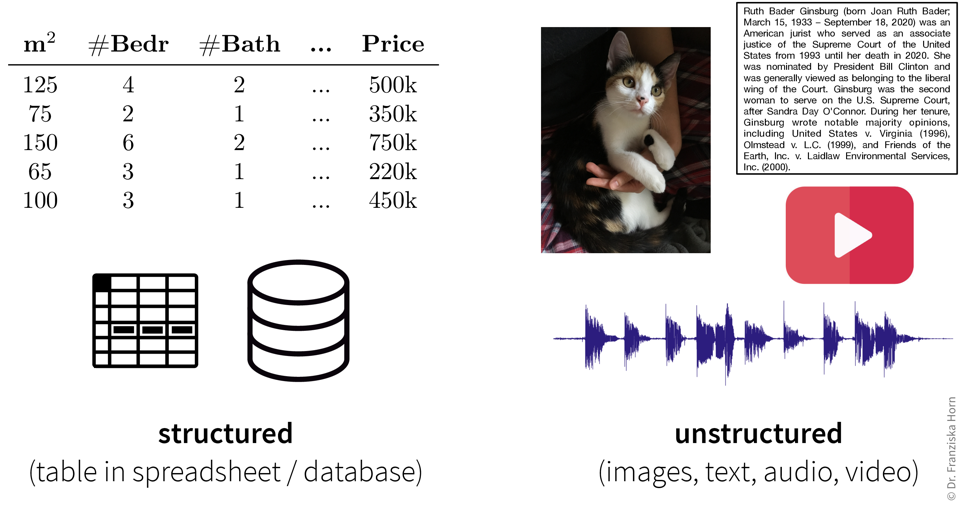

The inputs that the ML algorithms operate on can come in many forms…

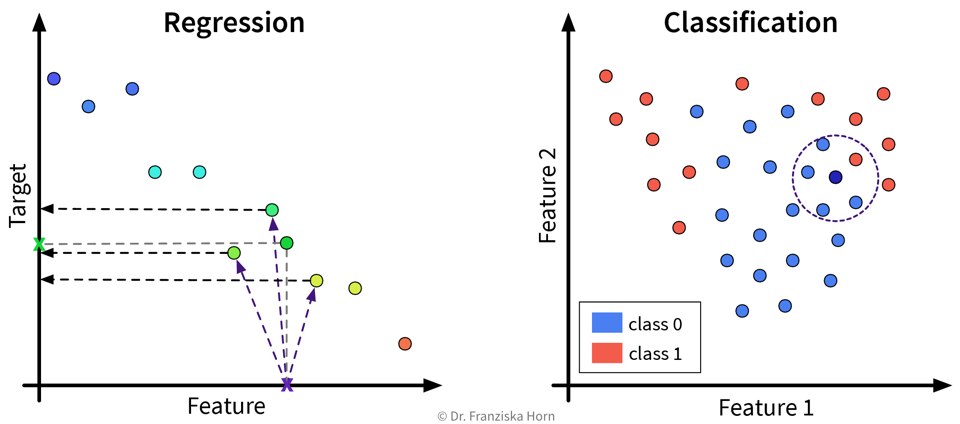

…but our goal, i.e., the desired outputs, determines the type of algorithm we should use for the task:

Some example input → output tasks and what type of ML algorithm solves them:

| Input \(X\) | Output \(Y\) | ML Algorithm Category |

|---|---|---|

questionnaire answers |

customer segmentation |

clustering |

sensor measurements |

everything normal? |

anomaly detection |

past usage of a machine |

remaining lifetime |

regression |

spam (yes/no) |

classification (binary) |

|

image |

which animal? |

classification (multi-class) |

user’s purchases |

products to show |

recommender systems |

search query |

relevant documents |

information retrieval |

audio |

text |

speech recognition |

text in English |

text in French |

machine translation |

To summarize (see also: overview table as PDF):

- Existing ML solutions & corresponding output (for one data point):

-

-

Dimensionality Reduction: (usually) 2D coordinates (to create a visualization of the dataset)

-

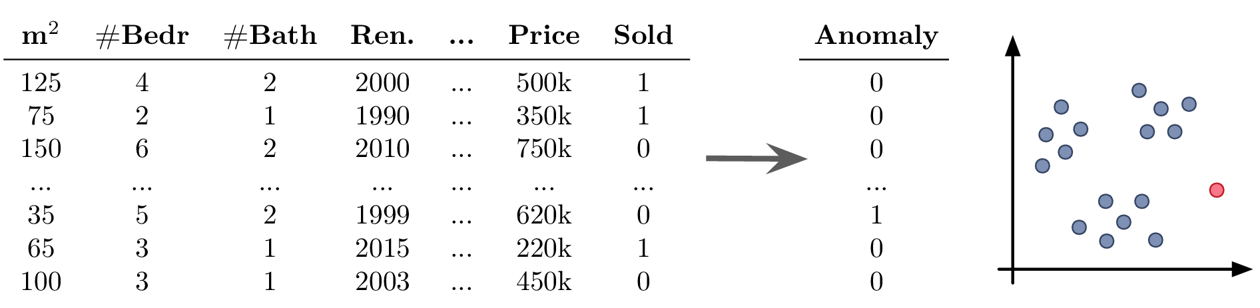

Outlier/Anomaly Detection: anomaly score (usually a value between 0 and 1 indicating how likely it is that this point is an outlier)

-

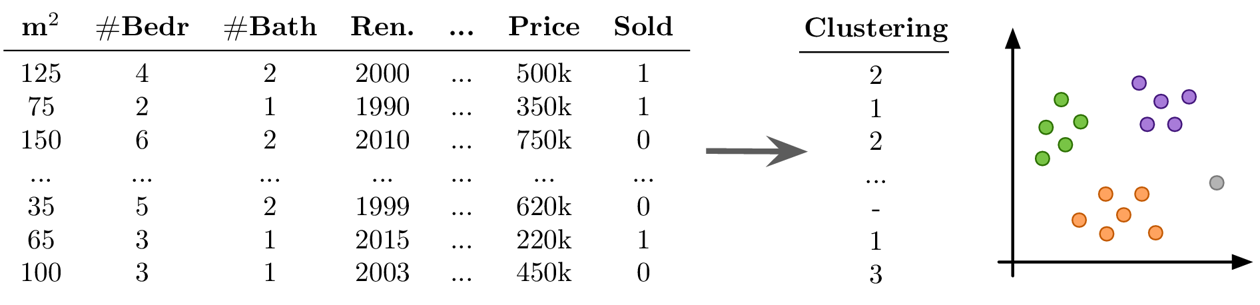

Clustering: cluster index (a number between 0 and k-1 indicating to which of the k clusters a data point belongs (or -1 for outliers))

-

Regression: a continuous value (any kind of numeric quantity that should be predicted)

-

Classification: a discrete value (one of several mutually exclusive categories)

-

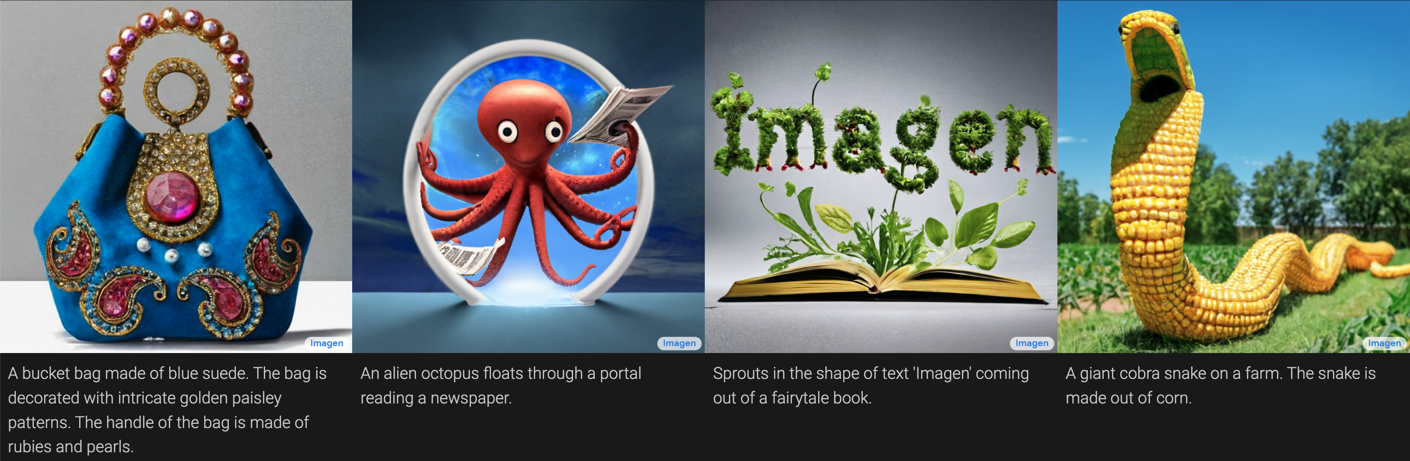

Deep Learning: unstructured output like a text or image (e.g., speech recognition, machine translation, image generation, or neural style transfer)

-

Recommender Systems & Information Retrieval: ranking of a set of items (recommender systems, for example, rank the products that a specific user might be most interested in; information retrieval systems rank other items based on their similarity to a given query item)

-

Reinforcement Learning: a sequence of actions (specific to the state the agent is in)

-

Let’s start with a more detailed look at the different unsupervised & supervised learning algorithms and what they are good for:

| Even if our ultimate goal is to predict something (i.e., use supervised learning), it can still be helpful to first use unsupervised learning to get a better understanding of the dataset, for example, by visualizing the data with dimensionality reduction methods to see all samples and their diversity at a glance, by identifying outliers to clean the dataset, or, for classification problems, by first clustering the samples to check whether the given class labels match the naturally occurring groups in the data or if, e.g., two very similar classes could be combined to simplify the problem. |

Dimensionality Reduction

-

create a 2D visualization to explore the dataset as a whole, where we can often already visually identify patterns like samples that can be grouped together (clusters) or that don’t belong (outliers)

-

noise reduction and/or feature engineering as a data preprocessing step to improve the performance in the following prediction task

-

transforming the data with dimensionality reduction methods constructs new features as a (non)linear combination of the original features, which decreases the interpretability of the subsequent analysis results

Anomaly Detection

-

clean up the data, e.g., by removing samples with wrongly entered values, as a data preprocessing step to improve the performance in the following prediction task

-

create alerts for anomalies, for example:

-

fraud detection: identify fraudulent credit card transaction in e-commerce

-

monitor a machine to see when something out of the ordinary happens or the machine might require maintenance

-

-

you should always have a good reason for throwing away data points — outliers are seldom random, sometimes they reveal interesting edge cases that should not be ignored

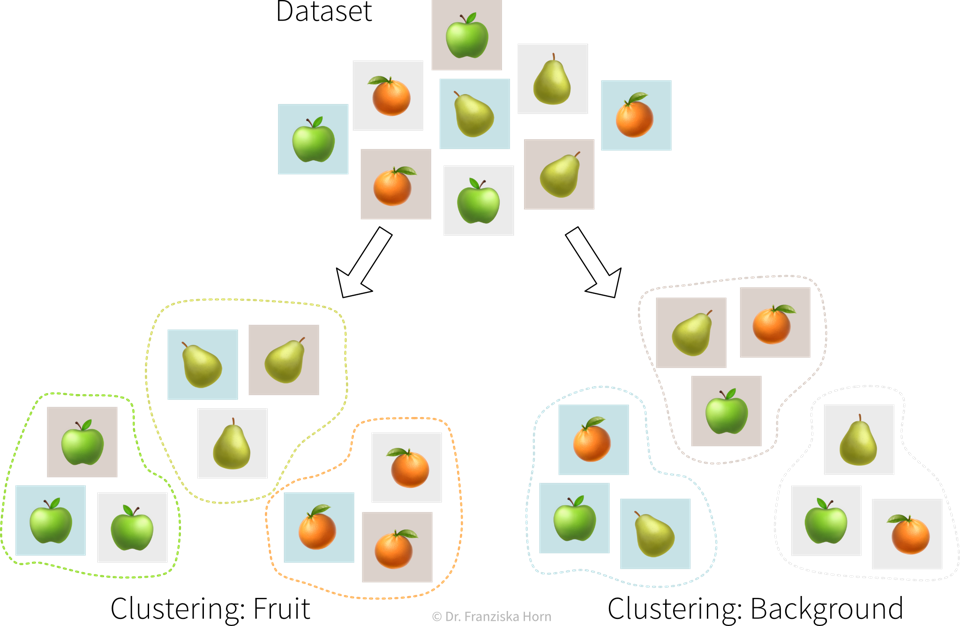

Clustering

-

identify groups of related data points, for example:

-

customer segmentation for targeted marketing campaign

-

-

no ground truth: difficult to choose between different models and parameter settings → the algorithms will always find something, but whether this is useful (i.e., what the identified patterns mean) can only be determined by a human in a post-processing step

-

many of the algorithms rely on similarities or distances between data points, and it can be difficult to define an appropriate measure for this or know in advance which features should be compared (e.g., what makes two customers similar?)

Regression & Classification

-

Learn a model to describe an input-output relationship and make predictions for new data points, for example:

-

predict in advance whether a product produced under the proposed process conditions will be of high quality or would be a waste of resources

-

churn prediction: identify customers that are about to cancel their contract (or employees that are about to quit) so you can reach out to them and convince them to stay

-

price optimization: determine the optimal price for a product (often used for dynamic pricing, e.g., to adapt prices based on the device a customer uses (e.g., new iPhone vs old Android phone) when accessing a website)

-

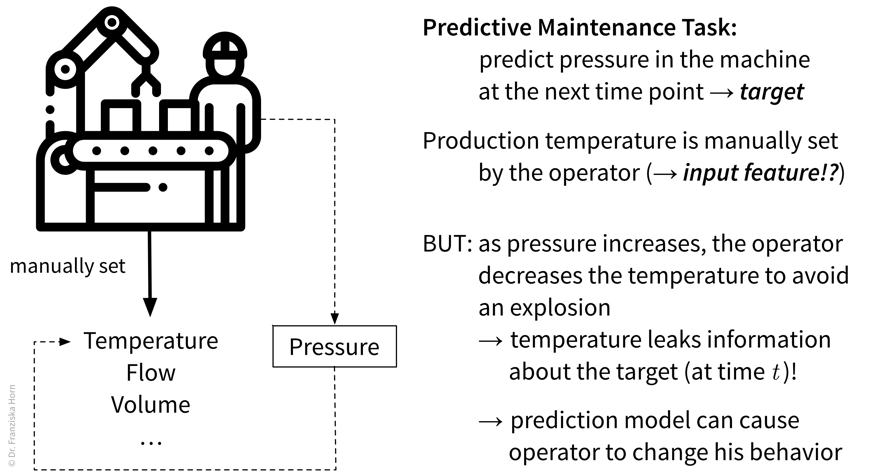

predictive maintenance: predict how long a machine component will last

-

sales forecasts: predict revenue in the coming weeks and how much inventory will be required to satisfy the demand

-

-

success is uncertain: while it is fairly straightforward to apply the models, it is difficult to determine in advance whether there even exists any relation between the measured inputs and targets (→ beware of garbage in, garbage out!)

-

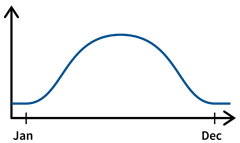

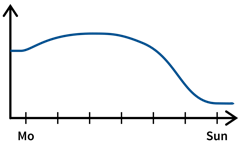

appropriate definition of the output/target/KPI that should be modeled, i.e., what does it actually mean for a process to run well and how might external factors influence this definition (e.g., can we expect the same performance on an exceptionally hot summer day?)

-

missing important input variables, e.g., if there exist other influencing factors that we haven’t considered or couldn’t measure, which means not all of the target variable’s variance can be explained

-

lots of possibly irrelevant input variables that require careful feature selection to avoid spurious correlations, which would result in incorrect ‘what-if’ forecasts since the true causal relationship between the inputs and outputs isn’t captured

-

often very time intensive data preprocessing necessary, e.g., when combining data from different sources and engineering additional features

Deep Learning

-

automate tedious, repetitive tasks otherwise done by humans, for example (see also ML is everywhere!):

-

text classification (e.g., identify spam / hate speech / fake news; forward customer support request to the appropriate department)

-

sentiment analysis (subtask of text classification: identify if text is positive or negative, e.g., to monitor product reviews or what social media users are saying about your company)

-

speech recognition (e.g., transcribe dictated notes or add subtitles to videos)

-

machine translation (translate texts from one language into another)

-

image classification / object recognition (e.g., identify problematic content (like child pornography) or detect street signs and pedestrians in autonomous driving)

-

image captioning (generate text that describes what’s shown in an image, e.g., to improve the online experience for for people with visual impairment)

-

predictive typing (e.g., suggest possible next words when typing on a smartphone)

-

data generation (e.g., generate new photos/images of specific objects or scenes)

-

style transfer (transform a given image into another style, e.g., make photos look like van Gogh paintings)

-

separate individual sources of an audio signal (e.g., unmix a song, i.e., separate vocals and instruments into individual tracks)

-

-

replace classical simulation models with ML models: since exact simulation models are often slow, the estimation for new samples can be speed up by instead predicting the results with an ML model, for example:

-

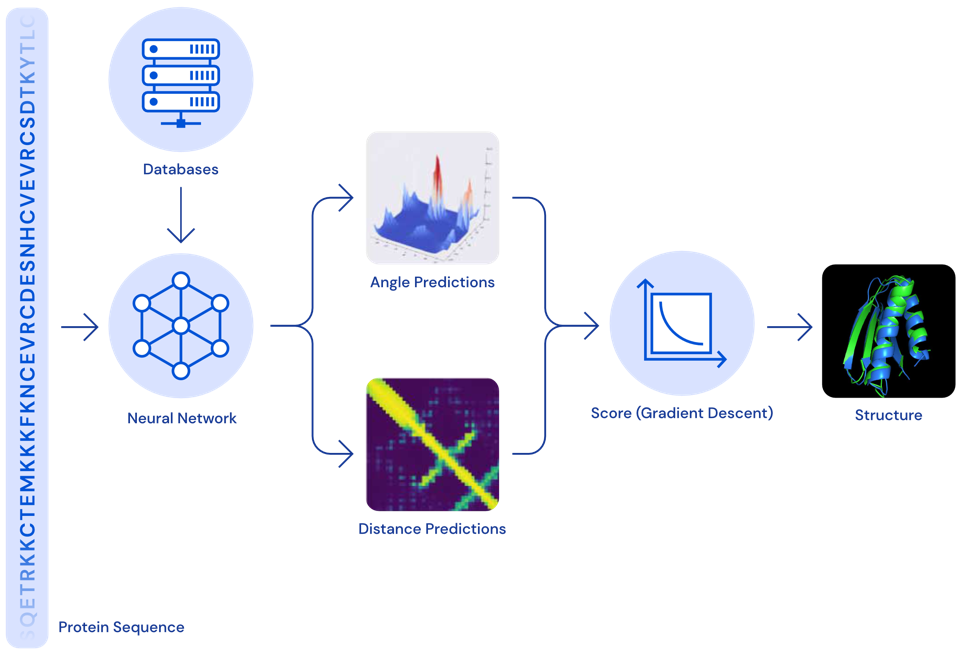

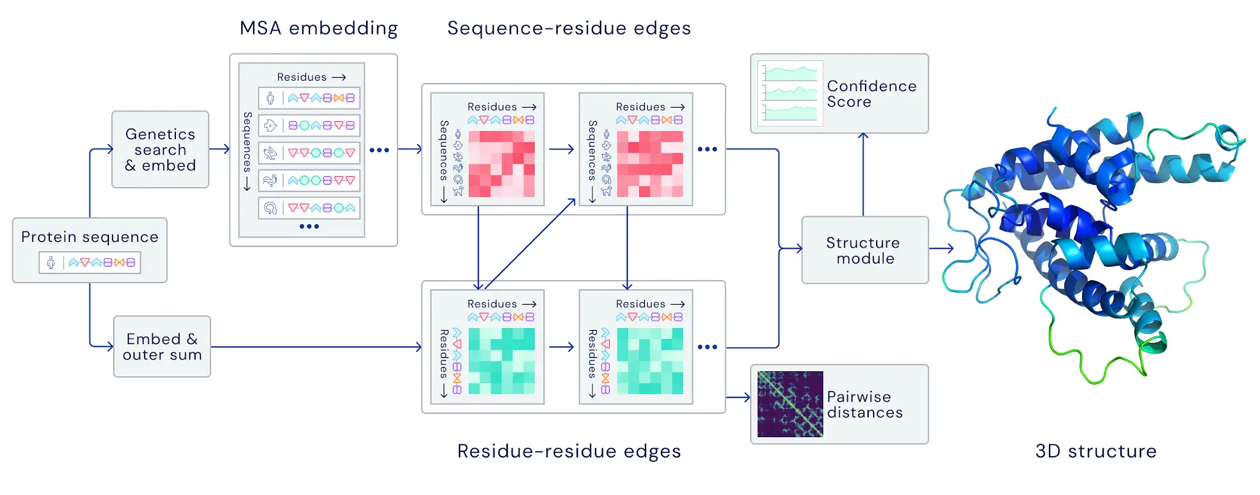

AlphaFold: generate 3D protein structure from amino acid sequence (to facilitate drug development)

-

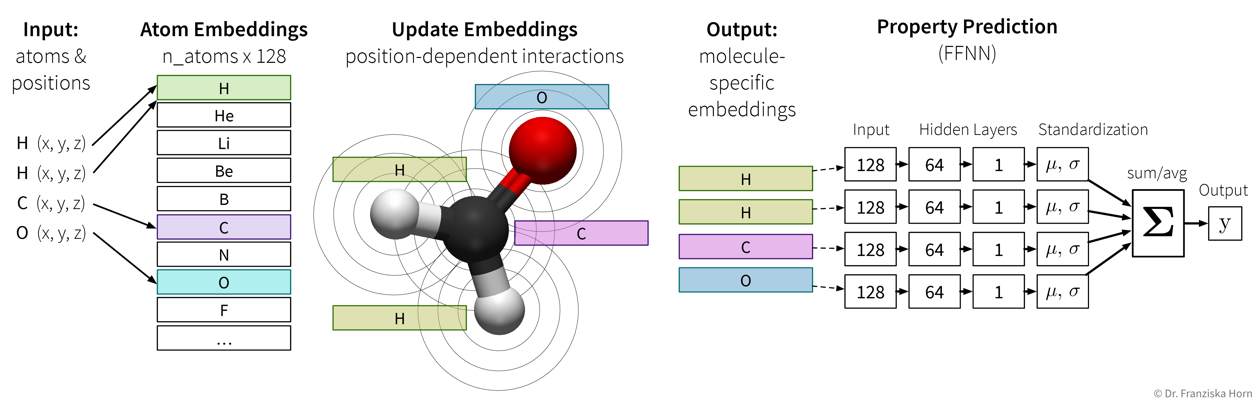

SchNet: predict energy and other properties of molecules given their configuration of atoms (to speed up materials research)

-

-

selecting a suitable neural network architecture & getting it to work properly; especially when replacing traditional simulation models it is often necessary to develop a completely new type of neural network architecture specifically designed for this task and inputs / outputs, which requires a lot of ML & domain knowledge, intuition, and creativity

-

computational resources (don’t train a neural network without a GPU!)

-

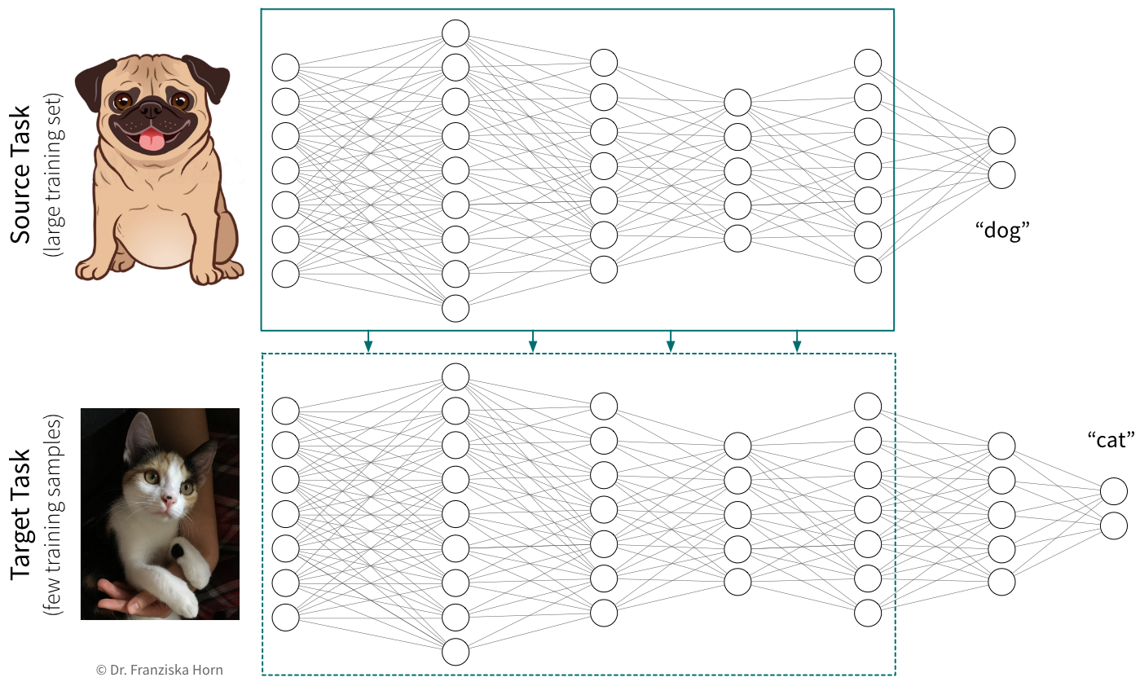

data quality and quantity: need a lot of consistently labeled data, i.e., many training instances labeled by human annotators who have to follow the same guidelines (but can be mitigated in some cases by pre-training the network using self-supervised learning)

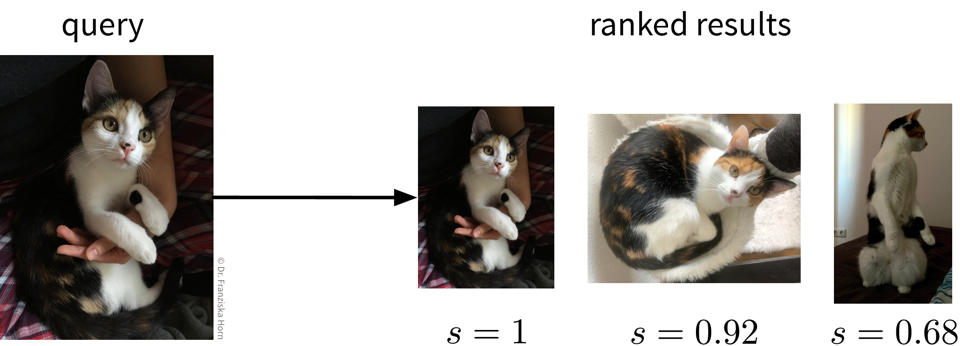

Information Retrieval

-

improve search results by identifying similar items: given a query, rank results, for example:

-

return matching documents / websites given a search query

-

show similar movies given the movie a user is currently looking at (e.g., same genre, director, etc.)

-

-

quality of results depends heavily on the chosen similarity metric; identifying semantically related items is currently more difficult for some data types (e.g., images) than others (e.g., text)

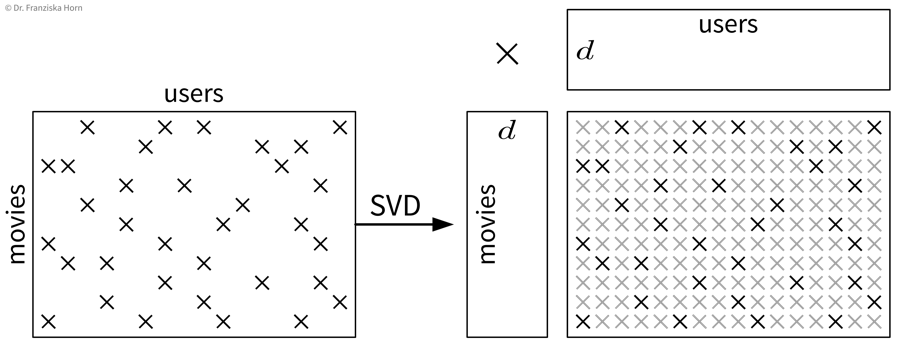

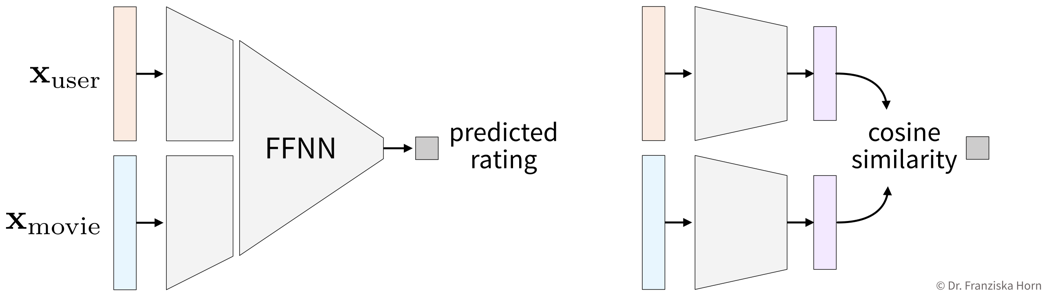

Recommender Systems

-

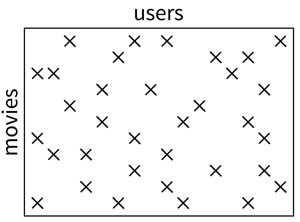

personalized suggestions: given a sample from one type of data (e.g., user, protein structure), identify the most relevant samples from another type of data (e.g., movie, drug composition), for example:

-

show a user movies that other users with a similar taste also liked

-

recommend molecule structures that could fit into a protein structure involved in a certain disease

-

-

little / incomplete data, for example, different users might like the same item for different reasons and it is unclear whether, e.g., a user didn’t watch a movie because he’s not interested in it or because he just didn’t notice it yet

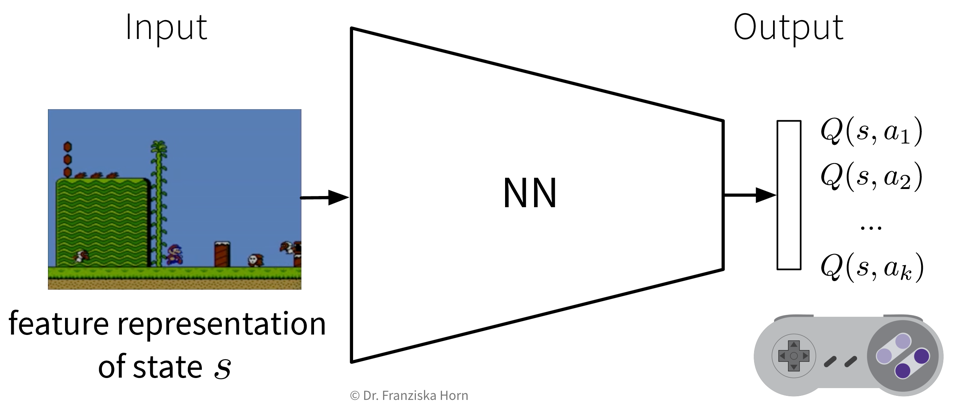

Reinforcement Learning

-

Determine an optimal sequence of actions given changing environmental conditions, for example:

-

virtual agent playing a (video) game

-

robot with complex movement patterns, e.g., picking up differently shaped objects from a box

-

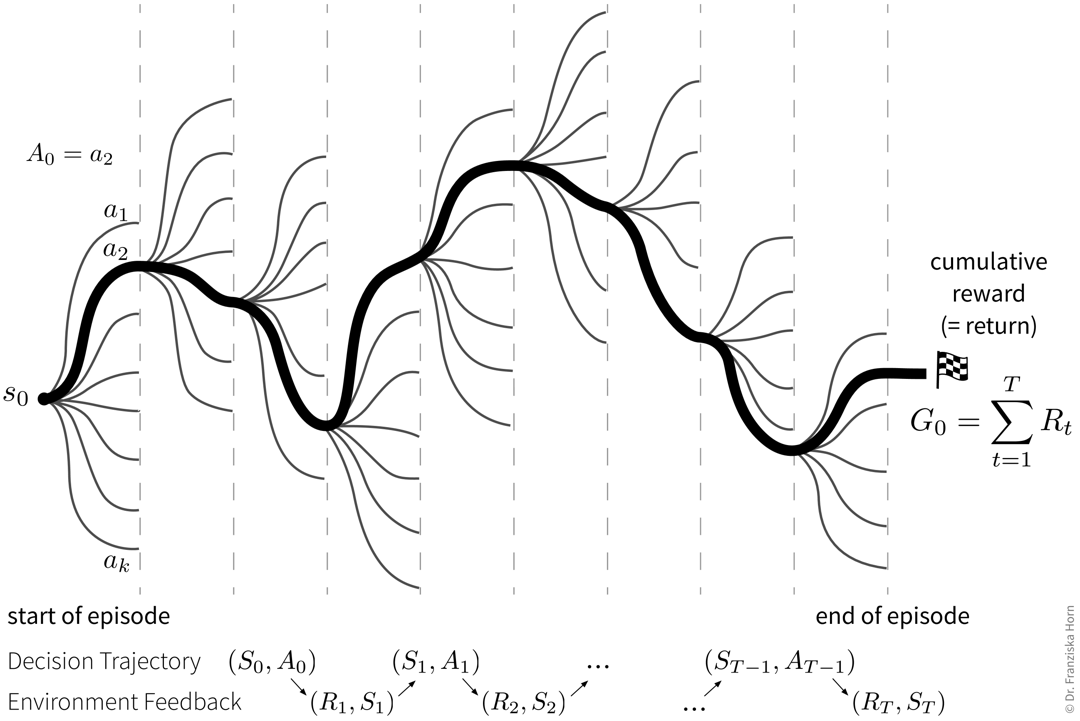

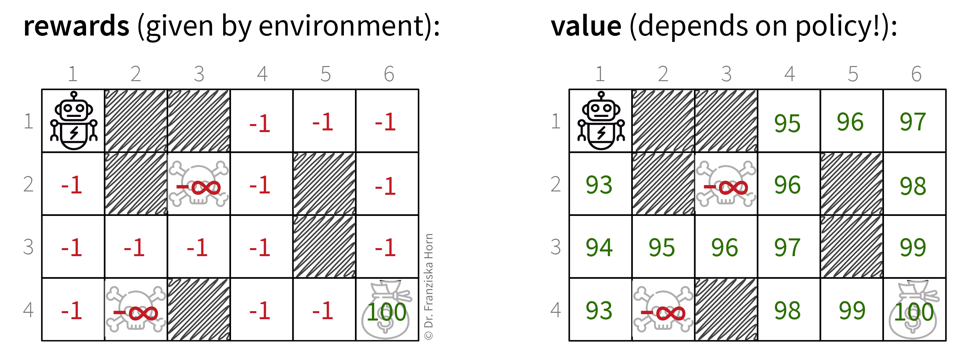

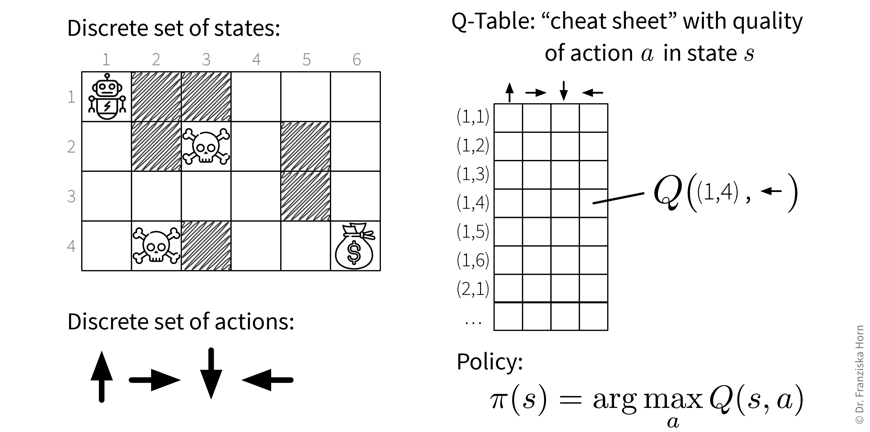

⇒ Unlike in regular optimization, where the optimal inputs given a single specific external condition are determined, here an “agent” (= the RL algorithm) tries to learn an optimal sequence of inputs to maximize the cumulative reward received over multiple time steps, where there can be a significant time delay between the inputs and the rewards that they generate (e.g., in a video game we might need to pick up a key in the beginning of a level, but the door that can be opened with it only comes several frames later).

-

usually requires a simulation environment for the agent to learn in before it starts acting in the real world, but developing an accurate simulation model isn’t easy and the agent will exploit any bugs if that results in higher rewards

-

can be tricky to define a clear reward function that should be optimized (imitation learning is often a better option, where the agent instead tries to mimic the decisions made by a human in some situation)

-

difficult to learn correct associations when there are long delays between critical actions and the received rewards

-

agent generates its own data: if it starts off with a bad policy, it will be tricky to escape from this (e.g., in a video game, if the agent always falls down a gap instead of jumping over it, it never sees the rewards that await on the other side and therefore can’t learn that it would be beneficial to jump over the gap)

Other

| ML algorithms are categorized by the output they generate for each input. If you want to solve an ‘input → output’ problem with a different output than the ones listed above, you’ll likely have to settle in for a multi-year research project — if the problem can be solved with ML at all! |

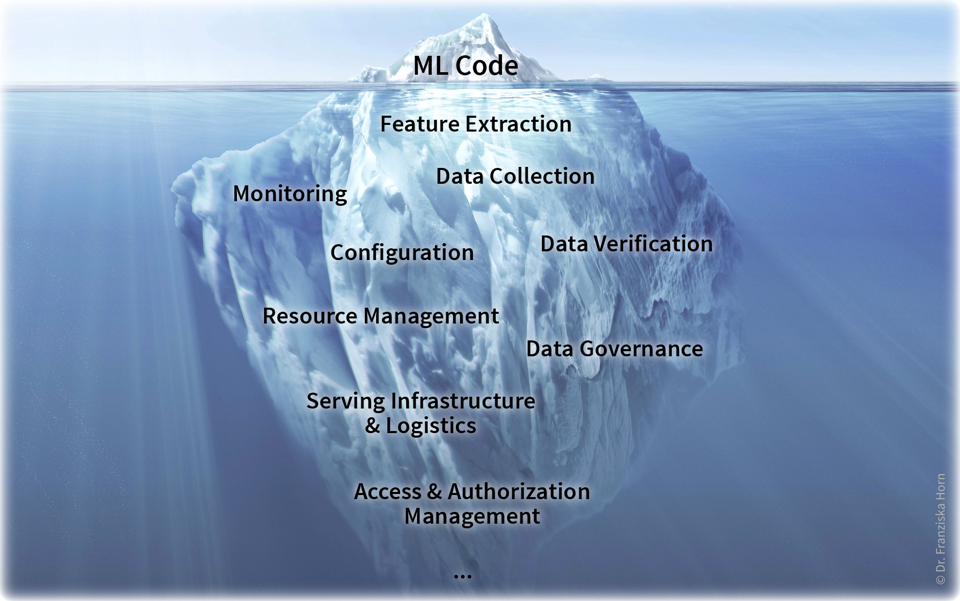

Solving problems with ML

Solving “input → output” problems with ML requires three main steps:

1. Identify a suitable problem

The first (and arguably most important) step is to identify where machine learning can (and should) be used in the first place.

For more details check out this blog article.

2. Devise a working solution

Once a suitable “input → output” problem as been identified, historical data needs to be gathered and the right ML algorithm needs to be selected and applied to obtain a working solution. This is what the next chapters are all about.

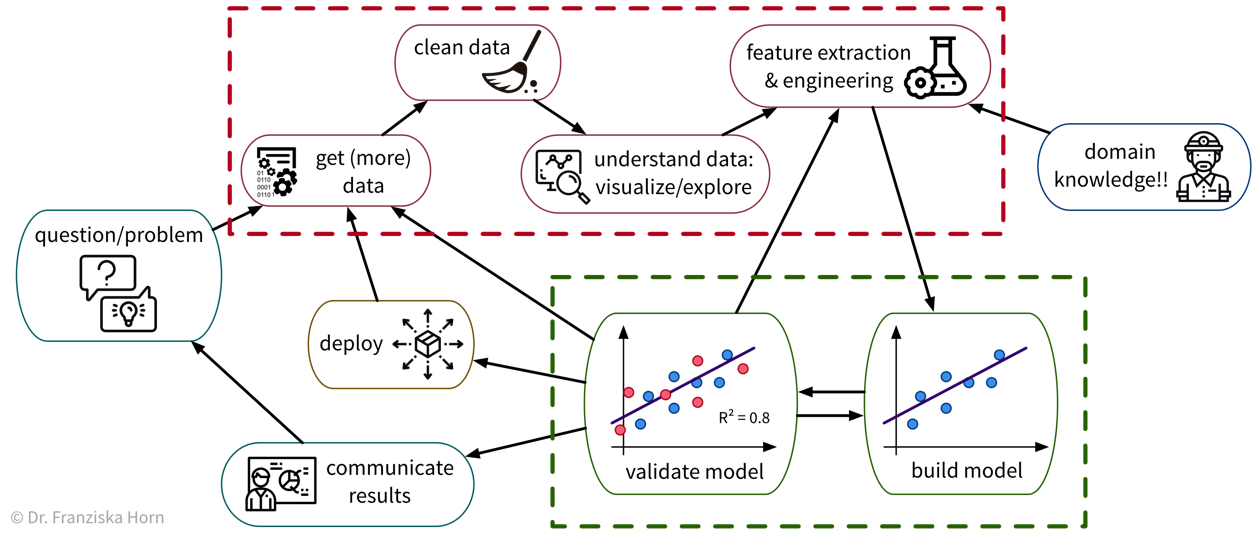

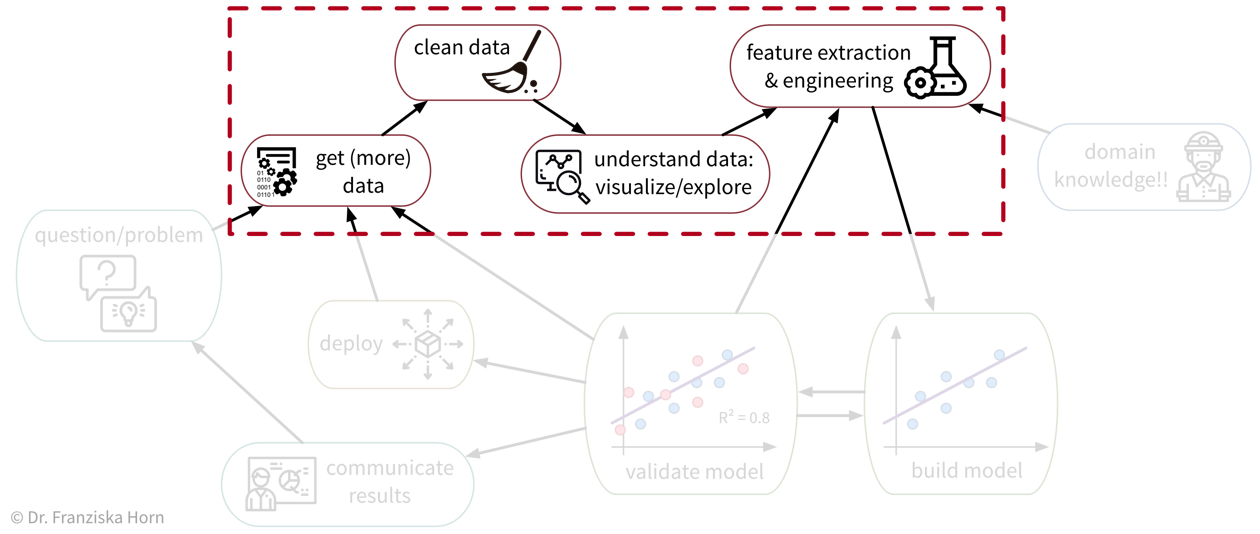

To solve a concrete problem using ML, we follow a workflow like this:

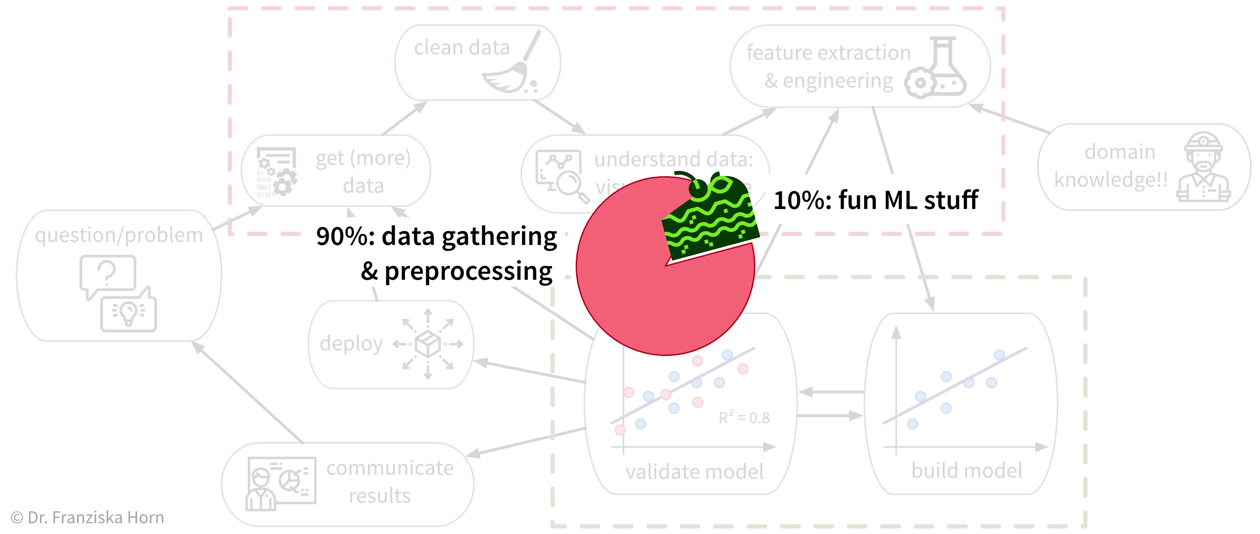

Unfortunately, due to a lack of standardized data infrastructure in many companies, the sad truth is that usually (at least) about 90% of a Data Scientist’s time is spent collecting, cleaning, and otherwise preprocessing the data to get it into a format where the ML algorithms can be applied:

While sometimes frustrating, the time spent cleaning and preprocessing the data is never wasted, as only with a solid data foundation the ML algorithms can achieve decent results.

3. Get it ready for production

When the prototypical solution has been implemented and meets the required performance level, this solution then has to be deployed, i.e., integrated into the general workflow and infrastructure so that it can actually be used to improve the respective process in practice (as a piece of software that continuously makes predictions for new data points). This might also require building some additional software around the ML model such as an API to programmatically query the model or a dedicated user interface to interact with the system. Finally, there are generally two strategies for how to run the finished solution:

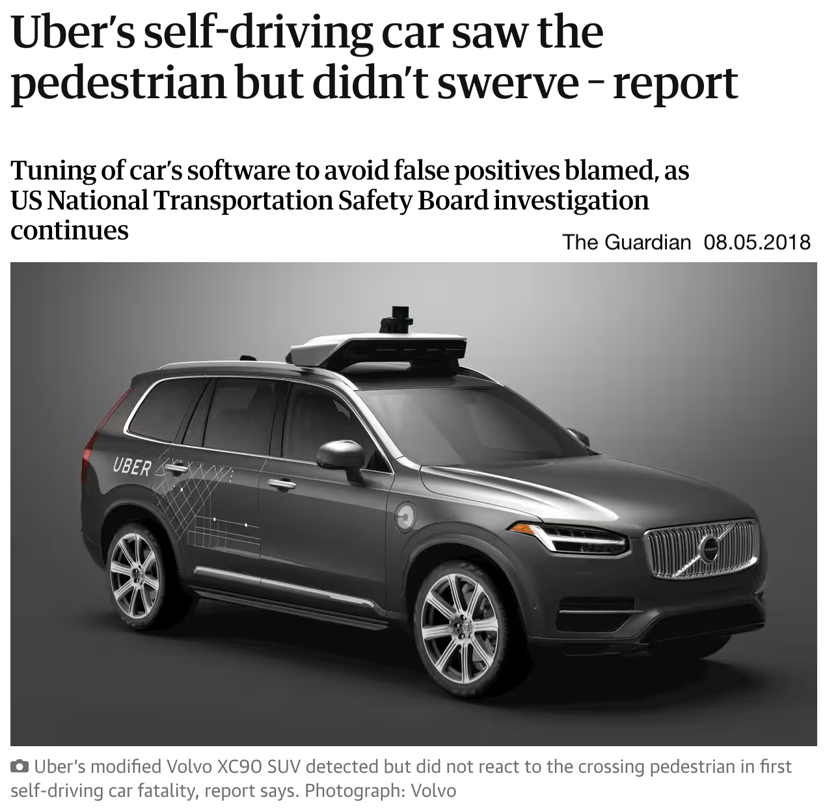

-

The ML model runs on an “edge” device, i.e., on each individual machine (e.g., mobile phone) where the respective data is generated and the output of the model is used in subsequent process steps. This is often the best strategy when results need to be computed in real time and / or a continuous Internet connection can not be guaranteed, e.g., in self-driving cars. However, the downside of this is that, depending on the type of ML model, comparatively expensive computing equipment needs to be installed in each machine, e.g., GPUs for neural network models.

-

The ML model runs in the “cloud”, i.e., on a central server (either on-premise or provisioned from a cloud provider such as AWS), e.g., in the form of a web application that receives data from individual users, processes it, and sends back the results. This is often the more efficient solution, if a response within a few seconds is sufficient for the use case. However, processing personal information in the cloud also raises privacy concerns. One of the major benefits of this solution is that it is easier to update the ML model, for example, when more historical data becomes available or if the process changes and the model now has to deal with slightly different inputs (we’ll discuss this further in later chapters).

→ As these decisions heavily depend on your specific use case, they go beyond the scope of this book. Search online for “MLOps” or read the book Designing Machine Learning Systems to find out more about these topics and hire a machine learning or data engineer to set up the required infrastructure in your company.

ML with Python

The exercises accompanying this book use the programming language Python.

- Why Python?

-

-

free & open source (unlike, e.g., MatLab)

-

easy; fast prototyping

-

general purpose language (unlike, e.g., R): easy to incorporate ML into regular applications or web apps

-

fast: many numerical operations are backed with C libraries

-

a lot of open source ML libraries with a very active community!!

-

- How?

-

-

regular scripts (i.e., normal text files ending in

.py), especially useful for function definitions that can be reused in different projects -

iPython shell: interactive console to execute code

-

Jupyter Notebooks (i.e., special files ending in

.ipynb): great for experimenting & sharing work with others (also works with other programming languages: Jupyter stands for Julia, Python, and R; you can even mix languages in the same notebook)

-

If you’re unfamiliar with Python, have a look at this Python tutorial specifically written to teach you the basics needed for the examples in this book. This cheat sheet additionally provides a summary of the most important steps when developing a machine learning solution, incl. code snippets using the libraries introduced below.

Overview of Python Libraries for ML

The libraries are always imported with specific abbreviations (e.g., np or pd). It is highly recommended that you stick to these conventions and you will also see this in many code examples online (e.g., on StackOverflow).

|

import numpy as nppandas-

higher level data manipulation with data stored in a

DataFrametable similar to R; very useful for loading data, cleaning, and some exploration with different plots

import pandas as pdmatplotlib(&seaborn)-

create plots (e.g.,

plt.plot(),plt.scatter(),plt.imshow()).

import matplotlib.pyplot as pltplotly-

create interactive plots (e.g.,

px.parallel_coordinates())

import plotly.express as pxscikit-learn-

includes a lot of (non-deep learning) machine learning algorithms, preprocessing tools, and evaluation functions with an unified interface, i.e., all models (depending on their type) have these

.fit(),.transform(), and/or.predict()methods, which makes it very easy to switch out models in the code by just changing the line where the model was initialized

# import the model class from the specific submodule

from sklearn.xxx import Model

from sklearn.metrics import accuracy_score

# initialize the model (usually we also set some parameters here)

model = Model()

# preprocessing/unsupervised learning methods:

model.fit(X) # only pass feature matrix X

X_transformed = model.transform(X) # e.g., the StandardScaler would return a scaled feature matrix

# supervised learning methods:

model.fit(X, y) # pass features and labels for training

y_pred = model.predict(X_test) # generate predictions for new points

# evaluate the model (the internal score function uses the model's prefered evaluation metric)

print("The model is this good:", model.score(X_test, y_test)) # .score() internally calls .predict()

print("Equivalently:", accuracy_score(y_test, y_pred)) import torch

(from tensorflow import keras)- Additional useful libraries to publish and share your work:

- Additional useful Natural Language Processing (NLP) libraries:

-

-

transformers(Hugging Face: pre-trained neural network models for different tasks) -

spacy(modern & fast NLP tools) -

nltk(traditional NLP tools) -

gensim(topic modeling) -

beautifulsoup(for parsing websites)

-

Data Analysis & Preprocessing

As we’ve seen, ML algorithms solve input-output tasks. And to solve an ML problem, we first need to collect data, understand it, and then transform (“preprocess”) it in such a way that ML algorithms can be applied:

Data Analysis

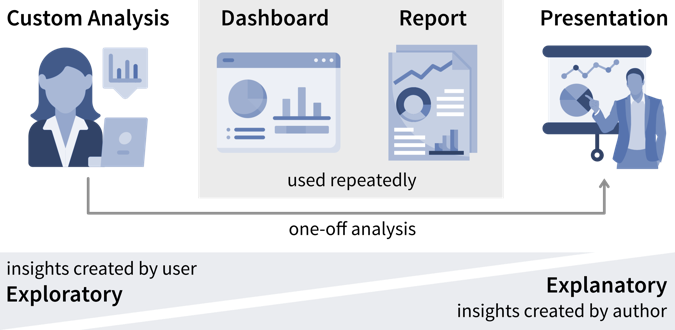

Analyzing data is not only an important step before using this data for a machine learning project, but can also generate valuable insights that result in better (data-driven) decisions. We usually analyze data for one of two reasons:

-

We need some specific information to make a (better) decision (reactive analysis, e.g., when something went wrong and we don’t know why).

-

We’re curious about the data and don’t know yet what the analysis will bring (proactive analysis, e.g., to better understand the data at the beginning of an ML project).

What all forms of data analyses have in common is that we’re after “(actionable) insights”.

Ideally, we should continuously monitor important metrics in dashboards or reports to spot deviations from the norm as quickly as possible, while identifying the root cause often requires a custom analysis.

| As a data analyst you are sometimes approached with more specific questions or requests such as “We’re deciding where to launch a new marketing campaign. Can you show me the number of users for all European countries?”. In these cases it can be helpful to ask “why?” to understand where the person noticed something unexpected that prompted this analysis request. If the answer is “Oh, we just have some marketing budget left over and need to spend the money somewhere” then just give them the results. But if the answer is “Our revenue for this quarter was lower than expected” it might be worth exploring other possible root causes for this, as maybe the problem is not the number of users that visit the website, but that many users drop out before they reach the checkout page and the money might be better invested in a usability study to understand why users don’t complete the sale. |

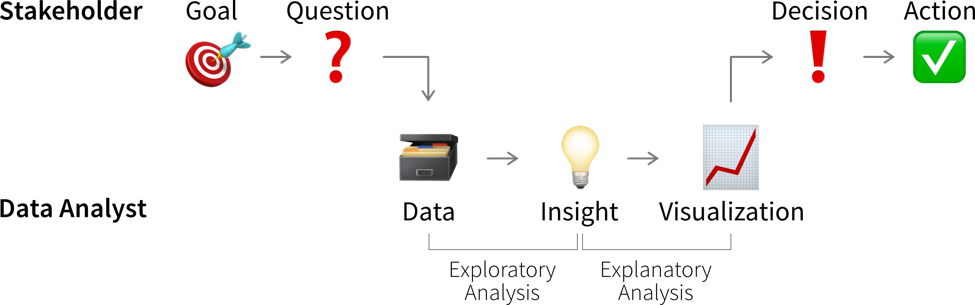

Data-driven Decisions

While learning something about the data and its context is often interesting and can feel rewarding by itself, it is not yet valuable. Insights become valuable when they influence a decision and inspire a different course of action, better than the default that would have been taken without the analysis.

This means we need to understand which decision(s) the insights from our data analysis should influence.

| Not all decisions need to be made in a data-driven way. But decision makers should be transparent and upfront about whether a decision can be influenced by analysis results, i.e., what data would make them change their mind and choose a different course of action. If data is only requested to support a decision that in reality has already been made, save the analysts the time and effort! |

Before we conduct a data analysis we need to be clear on:

-

Who are the relevant stakeholders, i.e., who will consume the data analysis results (= our audience / dashboard users)?

-

What is their goal?

In business contexts, the users' goals are usually in some way related to making a profit for the company, i.e., increasing revenue (e.g., by solving a customer problem more effectively than the competition) or reducing costs.

The progress towards these goals is tracked with so called Key Performance Indicators (KPIs), i.e., custom metrics that tell us how well things are going. For example, if we’re working on a web application, one KPI we might want to track could be “user happiness”. Unfortunately, true user happiness is difficult to measure, but we can instead check the number of users returning to our site and how long they stay and then somehow combine these and other measurements into a proxy variable that we then call “user happiness”.

| A KPI is only a reliable measure, if it is not simultaneously used to control people’s behavior, as they will otherwise try to game the system (Goodhart’s Law). For example, if our goal is high quality software, counting the number of bugs in our software is not a reliable measure for quality, if we simultaneously reward programmers for every bug they find and fix. |

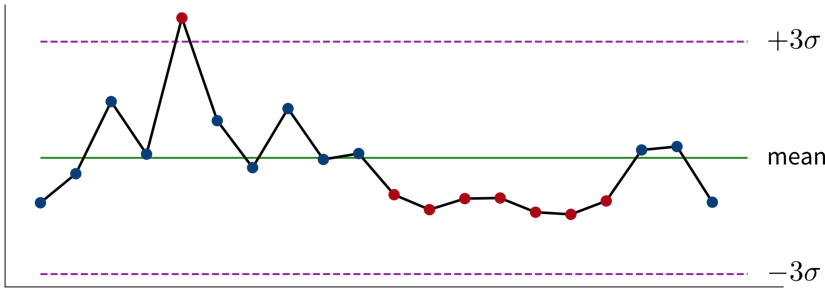

The first step when making a data-driven decision is to realize that we should act by monitoring our KPIs to see whether we’re on track to achieve our goals.

Ideally, this is achieved by combining these metrics with thresholds for alerts to automatically notify us if things go south and a corrective action becomes necessary. For example, we could establish some alert on the health of a system or machine to notify a technician when maintenance is necessary. To avoid alert fatigue, it is important to reduce false alarms, i.e., configure the alert such that the responsible person tells you “when this threshold is reached, I will drop everything else and go fix the problem” (not “at this point we should probably keep an eye on it”).

Depending on how frequently the value of the KPI changes and how quickly corrective actions show effects, we want to check for the alert condition either every few minutes to alert someone in real time or, for example, every morning, every Monday, or once per month if the values change more slowly.

For every alert that is created, i.e., every time it is clear that a corrective action is needed, it is worth considering whether this action can be automated and to directly trigger this automated action together with the alert (e.g., if the performance of an ML model drops below a certain threshold, instead of just notifying the data scientist we could automatically trigger a retraining with the most recent data). If this is not possible, e.g., because it is not clear what exactly happened and therefore which action should be taken, we need a deeper analysis.

Digging deeper into the data can help us answer questions such as “Why did we not reach this goal and how can we do better?” (or, in rarer cases, “Why did we exceeded this goal and how can we do it again?”) to decide on the specific action to take.

| Don’t just look for data that confirms the story you want to tell and supports the action you wanted to take from the start (i.e., beware of confirmation bias)! Instead be open and actively try to disprove your hypothesis. |

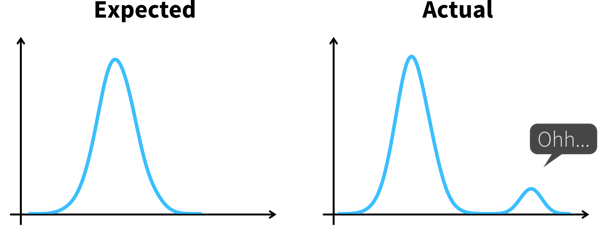

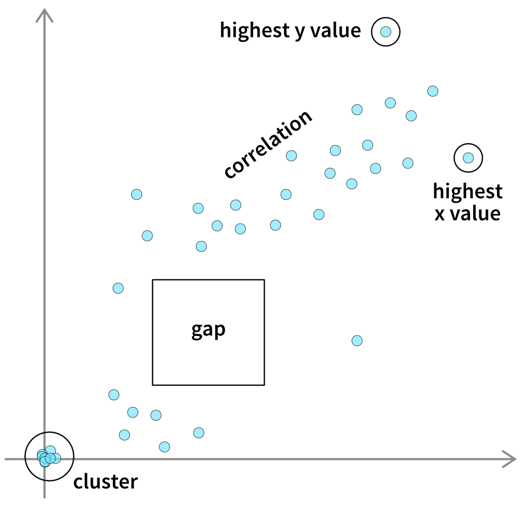

Such an exploratory analysis is often a quick and dirty process where we generate lots of plots to better understand the data and where the difference between what we expected and what we saw in the data is coming from, e.g., by examining other correlated variables. However, arriving at satisfactory answers is often more art than science.

| When using an ML model to predict a KPI, we can interpret this model and its predictions to better understand which variables might influence the KPI. Focusing on the features deemed important by the ML model can be helpful if our dataset contains hundreds of variables and we don’t have time to look at all of them in detail. But use with caution — the model only learned from correlations in the data; these do not necessarily represent true causal relationships between the variables. |

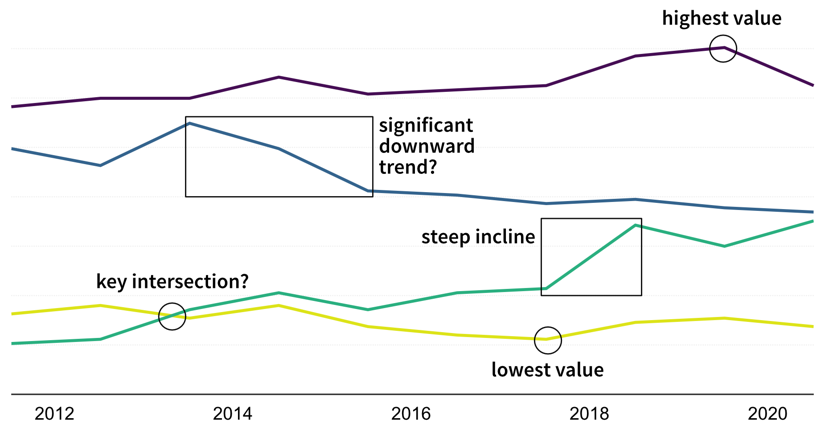

Communicating Insights

The plots that were created during an exploratory analysis should not be the plots we show our audience when we’re trying to communicate our findings. Since our audience is far less familiar with the data than us and probably also not interested / doesn’t have the time to dive deeper into the data, we need to make the results more accessible, a process often called explanatory analysis.

| Don’t “just show all the data” and hope that your audience will make something of it — this is the downfall of many dashboards. It is essential, that you understand what goal your audience is trying to achieve and what questions they need answers to. |

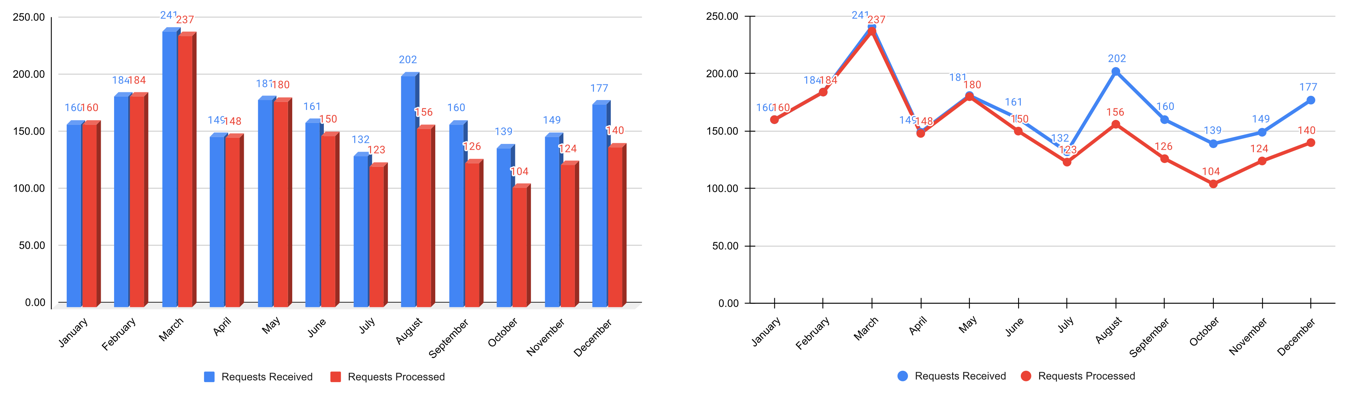

Step 1: Choose the right plot type

-

get inspired by visualization libraries (e.g., here or here), but avoid the urge to create fancy graphics; sticking with common visualizations makes it easier for the audience to correctly decode the presented information

-

don’t use 3D effects!

-

avoid pie or donut charts (angles are hard to interpret)

-

use line plots for time series data

-

use horizontal instead of vertical bar charts for audiences that read left to right

-

start the y-axis at 0 for area & bar charts

-

consider using small multiples or sparklines instead of cramming too much into a single chart

Step 2: Cut clutter / maximize data-to-ink ratio

-

remove border

-

remove gridlines

-

remove data markers

-

clean up axis labels

-

label data directly

Step 3: Focus attention

-

start with gray, i.e., push everything in the background

-

use pre-attentive attributes like color strategically to highlight what’s most important

-

use data labels sparingly

Step 4: Make data accessible

-

add context: Which values are good (goal state), which are bad (alert threshold)? Should the value be compared to another variable (e.g., actual vs. forecast)?

-

leverage consistent colors when information is spread across multiple plots (e.g., data from a certain country is always drawn in the same color)

-

annotate the plot with text explaining the main takeaways (if this is not possible, e.g., in dashboards where the data keeps changing, the title can instead include the question that the plot should answer, e.g., “Does our revenue follow the projections?”)

Garbage in, garbage out!

Remember: data is our raw material when producing something valuable with ML. If the quality or quantity of the data is insufficient, we are facing a “garbage in, garbage out” scenario and no matter what kind of fancy ML algorithm we try, we wont get a satisfactory result. In fact, the fancier the algorithm (e.g., deep learning), the more data we need.

Below you find a summary of some common risks associated with data that can make it complicated or even impossible to apply ML:

| If you can, observe how the data is collected. As in: actually physically stand there and watch how someone enters the values in some program or how the machine operates as the sensors measure something. You will probably notice some things that can be optimized in the data collection process directly, which will save you lots of preprocessing work in the future. |

- Best Practice: Data Catalog

-

To make datasets more accessible, especially in larger organizations, they should be documented. For example, in structured datasets, there should be information available on each variable like:

-

Name of the variable

-

Description

-

Units

-

Data type (e.g., numerical or categorical values)

-

Date of first measurement (e.g., in case a sensor was installed later than the others)

-

Normal/expected range of values (→ “If this variable is below this threshold, then the machine is off and the data points can be ignored.”)

-

How missing values are recorded, i.e., whether they are recorded as missing values or substituted with some unrealistic value instead, which can happen since some sensors are not able to send a signal for “Not a Number” (NaN) directly or the database does not allow for the field to be empty.

-

Notes on anything else you should be aware of, e.g., a sensor malfunctioning during a certain period of time or some other glitch that resulted in incorrect data. This can otherwise be difficult to spot, for example, if someone instead manually entered or copy & pasted values from somewhere, which look normal at first glance.

-

You can find further recommendations on what is particularly important when documenting datasets for machine learning applications in the Data Cards Playbook.

| In addition to documenting datasets as a whole, it is also helpful to store metadata for individual samples. For example, for image data, this could include the time stamp of when the image was taken, the geolocation (or if the camera is built into a manufacturing machine then the ID of this machine), information about the camera settings, etc.. This can greatly help when analyzing model prediction errors, as it might turn out that, for example, images taken with a particular camera setting are especially difficult to classify, which in turn gives us some hints on how to improve the data collection process. |

Data Preprocessing

Now that we better understand our data and verified that it is (hopefully) of good quality, we can get it ready for our machine learning algorithms.

What constitutes one data point?

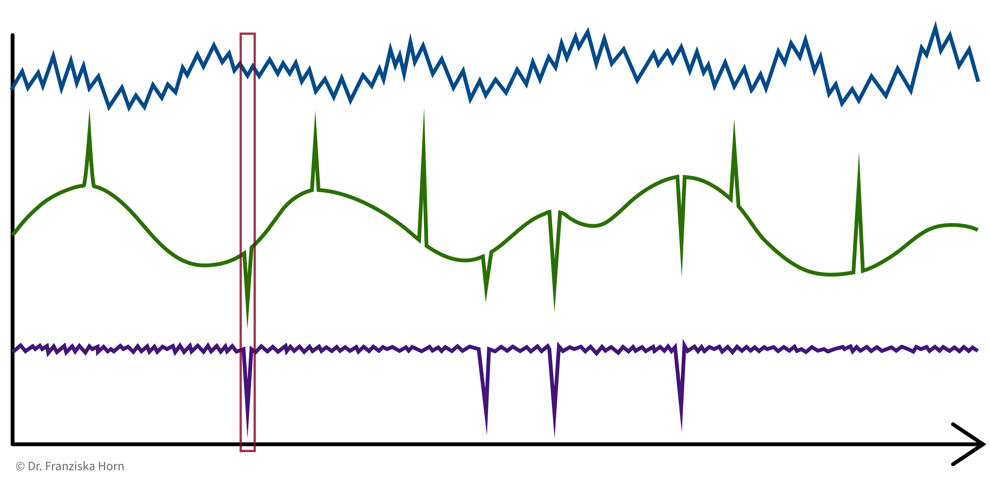

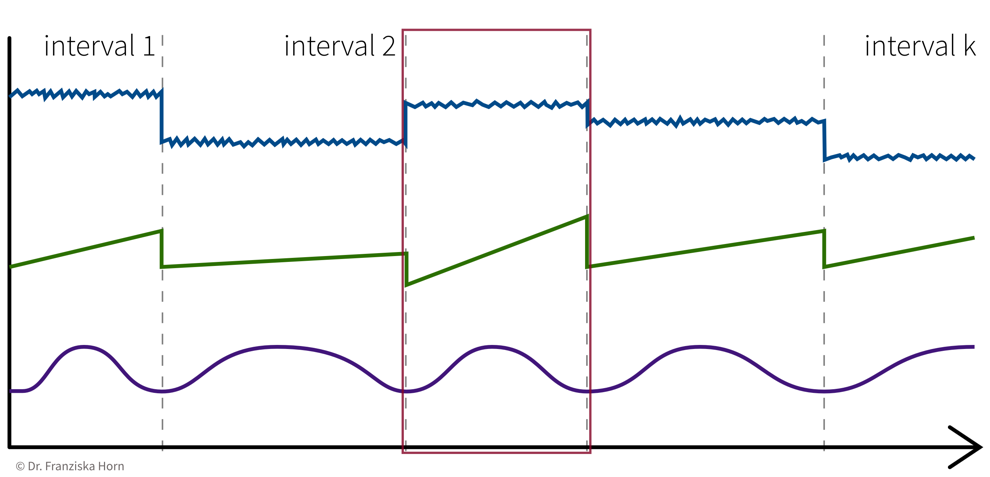

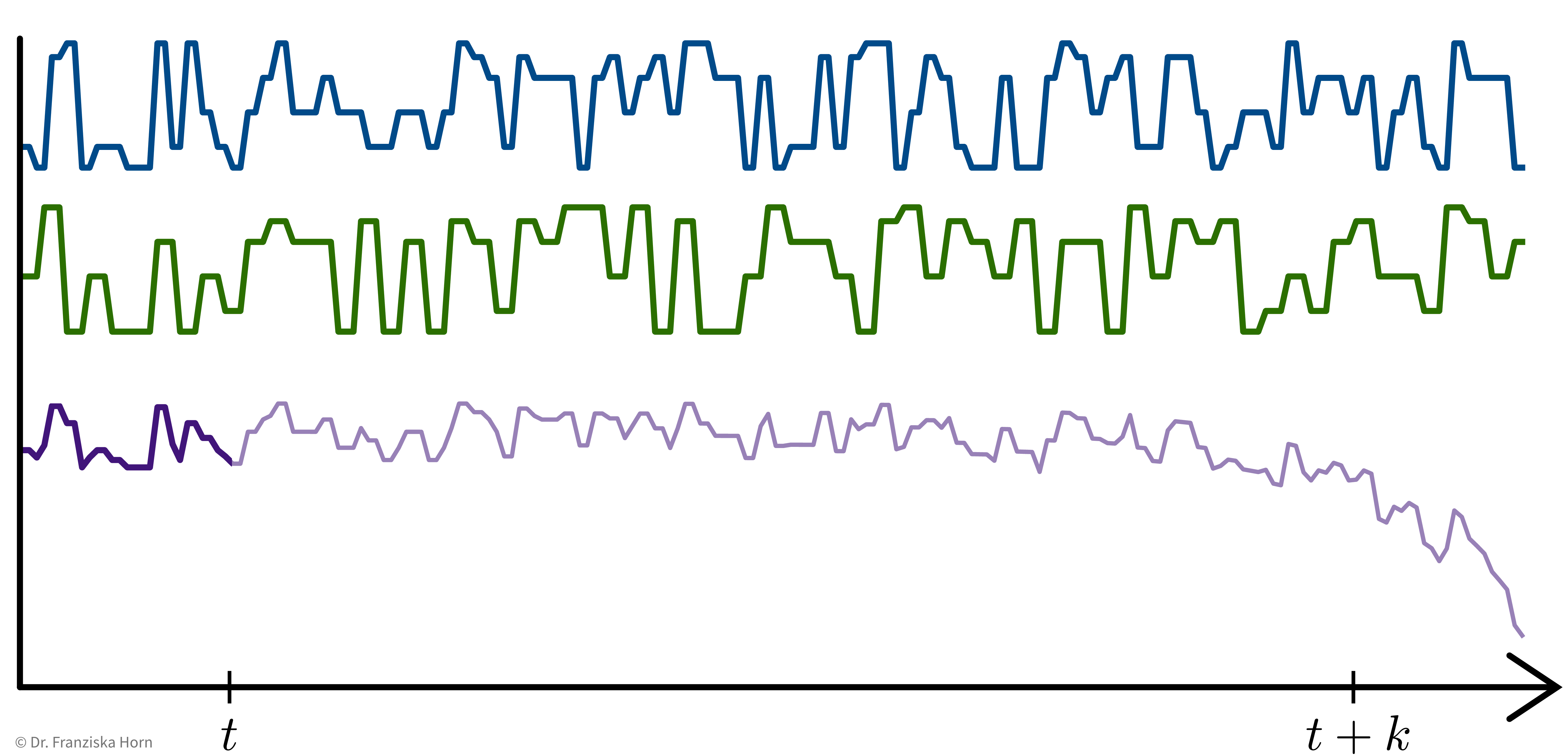

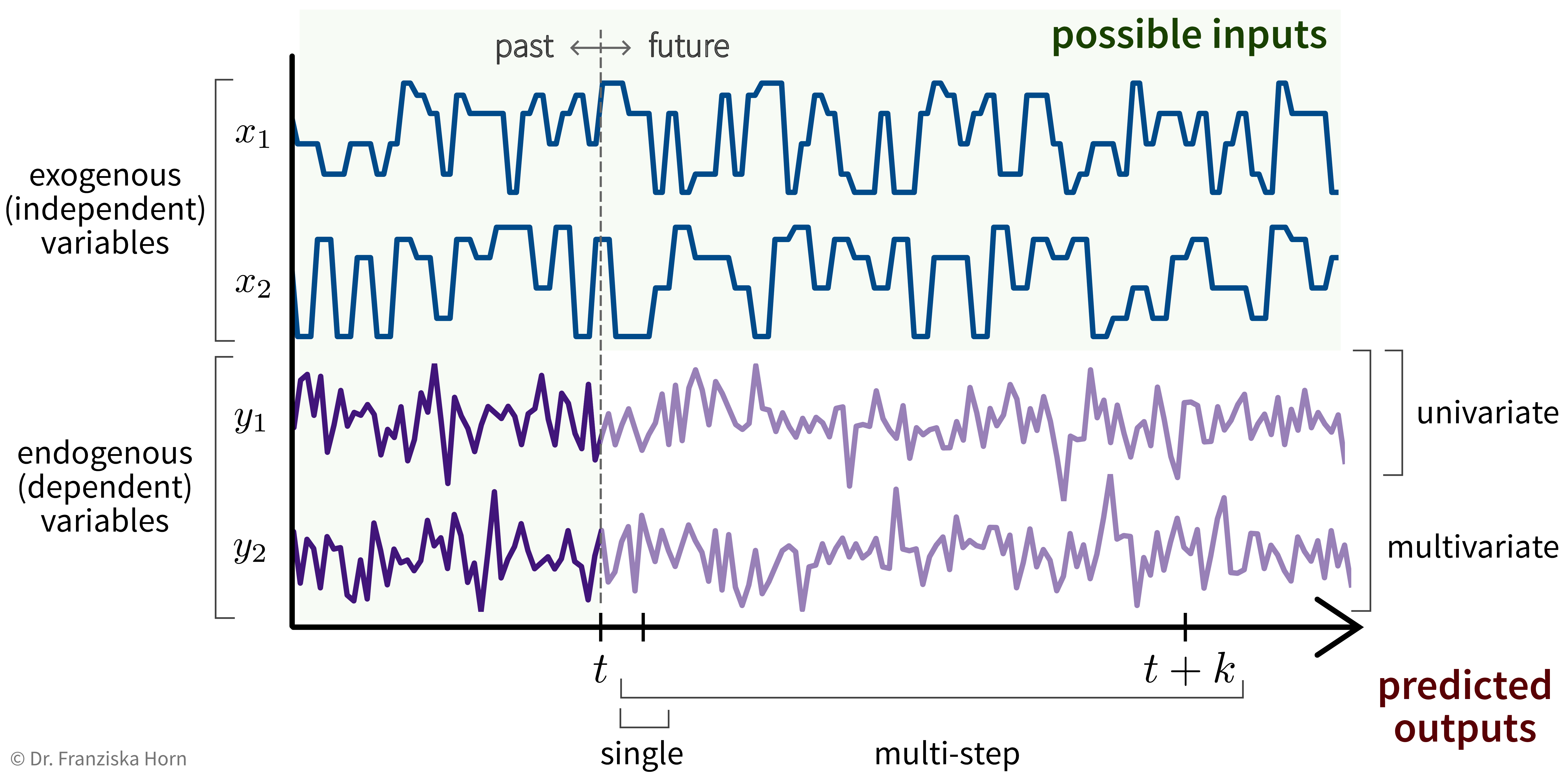

Even given the same raw data, depending on what problem we want to solve, the definition of ‘one data point’ can be quite different. For example, when dealing with time series data, the raw data is in the form ‘n time points with measurements from d sensors’, but depending on the type of question we are trying to answer, the actual feature matrix can look quite different:

- 1 Data Point = 1 Time Point

-

e.g., anomaly detection ⇒ determine for each time point if everything is normal or if there is something strange going on:

→ \(X\): n time points \(\times\) d sensors, i.e., n data points represented as d-dimensional feature vectors

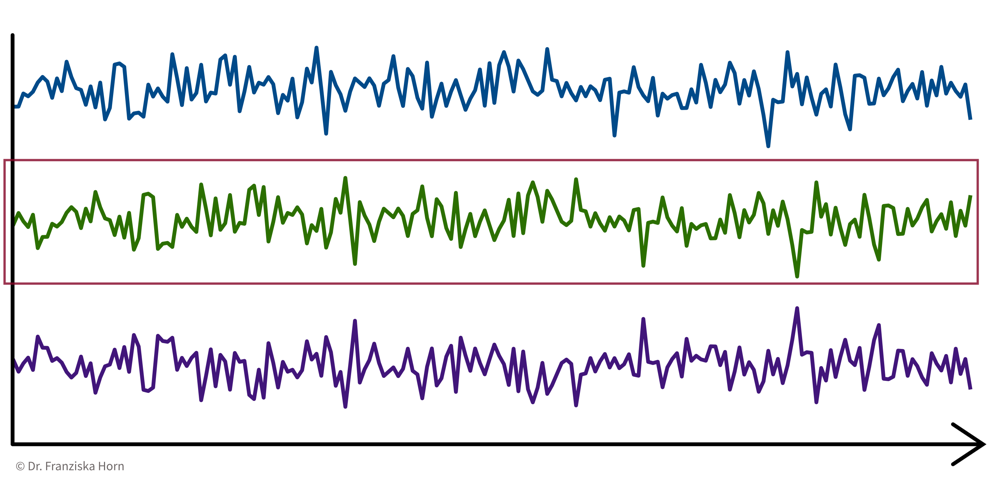

- 1 Data Point = 1 Time Series

-

e.g., cluster sensors ⇒ see if some sensors measure related things:

→ \(X\): d sensors \(\times\) n time points, i.e., d data points represented as n-dimensional feature vectors

- 1 Data Point = 1 Time Interval

-

e.g., classify time segments ⇒ products are being produced one after another, some take longer to produce than others, and the task is to predict whether a product produced during one time window at the end meets the quality standards, i.e., we’re not interested in the process over time per se, but instead regard each produced product (and therefore each interval) as an independent data point:

→ Data points always need to be represented as fixed-length feature vectors, where each dimension stands for a specific input variable. Since the intervals here have different lengths, we can’t just represent one product as the concatenation of all the sensor measurements collected during its production time interval, since these vectors would not be comparable for the different products. Instead, we compute features for each time segment by aggregating the sensor measurements over the interval (e.g., min, max, mean, slope, …).

→ \(X\): k intervals \(\times\) q features (derived from the d sensors), i.e., k data points represented as q-dimensional feature vectors

Feature Extraction

Machine learning algorithms only work with numbers. But some data does not consist of numerical values (e.g., text documents) or these numerical values should not be interpreted as such (e.g., sports players have numbers on their jerseys, but these numbers don’t mean anything in a numeric sense, i.e., higher numbers don’t mean the person scored more goals, they are merely IDs).

For the second case, statisticians distinguish between nominal, ordinal, interval, and ratio data, but for simplicity we lump the first two together as categorical data, while the other two are considered meaningful numerical values.

For both text and categorical data we need to extract meaningful numerical features from the original data. We’ll start with categorical data and deal with text data at the end of the section.

Categorical features can be transformed with a one-hot encoding, i.e., by creating dummy variables that enable the model to introduce a different offset for each category.

For example, our dataset could include samples from four product categories circle, triangle, square, and pentagon, where each data point (representing a product) falls into exactly one of these categories. Then we create four features, is_circle, is_triangle, is_square, and is_pentagon, and indicate a data point’s product category using a binary flag, i.e., a value of 1 at the index of the true category and 0 everywhere else:

e.g., product category: triangle ⇒ [0, 1, 0, 0]

from sklearn.preprocessing import OneHotEncoderFeature Engineering & Transformations

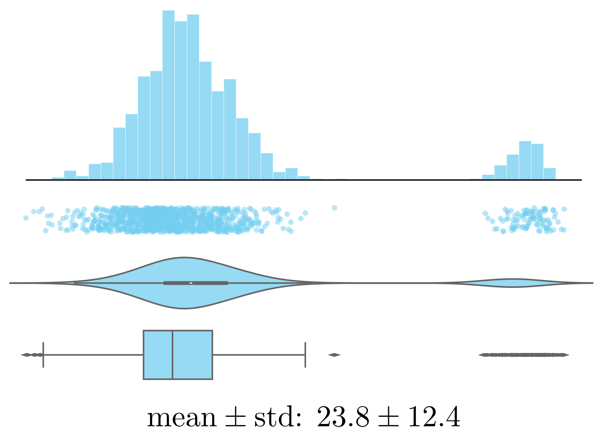

Often it is very helpful to not just use the original features as is, but to compute new, more informative features from them — a common practice called feature engineering. Additionally, one should also check the distributions of the individual variables (e.g., by plotting a histogram) to see if the features are approximately normally distributed (which is an assumption of most ML models).

- Generate additional features (i.e., feature engineering)

-

-

General purpose library: missing data imputation, categorical encoders, numerical transformations, and much more.

→feature-enginelibrary -

Feature combinations: e.g., product/ratio of two variables. For example, compute a new feature as the ratio of the temperature inside a machine to the temperature outside in the room.

→autofeatlibrary (Disclaimer: written by yours truly.) -

Relational data: e.g., aggregations across tables. For example, in a database one table contains all the customers and another table contains all transactions and we compute a feature that shows the total volume of sales for each customer, i.e., the sum of the transactions grouped by customers.

→featuretoolslibrary -

Time series data: e.g., min/max/average over time intervals.

→tsfreshlibrary

⇒ Domain knowledge is invaluable here — instead of blindly computing hundreds of additional features, ask a subject matter expert which derived values she thinks might be the most helpful for the problem you’re trying to solve!

-

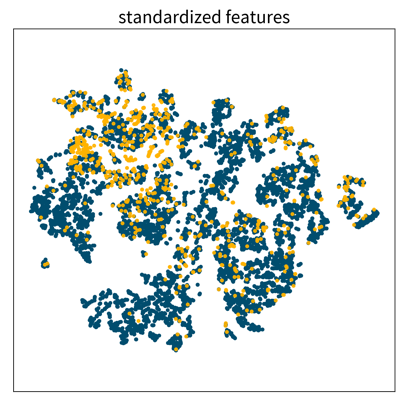

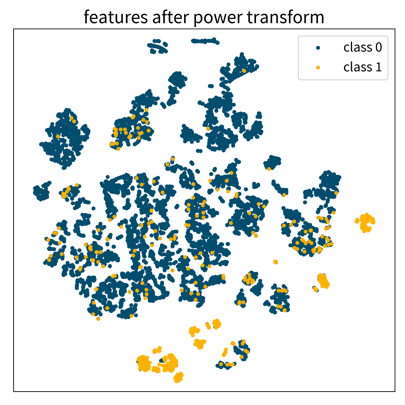

- Aim for normally/uniformly distributed features

-

This is especially important for heterogeneous data:

For example, given a dataset with different kinds of sensors with different scales, like a temperature that varies between 100 and 500 degrees and a pressure sensor that measures values between 1.1 and 1.8 bar:

→ the ML model only sees the values and does not know about the different units

⇒ a difference of 0.1 for pressure might be more significant than a difference of 10 for the temperature.-

for each feature: subtract mean & divide by standard deviation (i.e., transform an arbitrary Gaussian distribution into a normal distribution)

from sklearn.preprocessing import StandardScaler -

for each feature: scale between 0 and 1

from sklearn.preprocessing import MinMaxScaler -

map to Gaussian distribution (e.g., take log/sqrt if the feature shows a skewed distribution with a few extremely large values)

from sklearn.preprocessing import PowerTransformer

-

Computing Similarities

Many ML algorithms rely on similarities or distances between data points, computed with measures such as:

-

Cosine similarity (e.g., when working with text data)

-

Similarity coefficients (e.g., Jaccard index)

-

… and many more.

from sklearn.metrics.pairwise import ...| Which feature should have how much influence on the similarity between points? → Domain knowledge! |

-

⇒ Scale / normalize heterogeneous data: For example, the pressure difference between 1.1 and 1.3 bar might be more dramatic in the process than the temperature difference between 200 and 220 degrees, but if the distance between data points is computed with the unscaled values, then the difference in temperature completely overshadows the difference in pressure.

-

⇒ Exclude redundant / strongly correlated features, as they otherwise count twice towards the distance.

Working with Text Data

As mentioned before, machine learning algorithms can not work with text data directly, but we first need to extract meaningful numerical features from it.

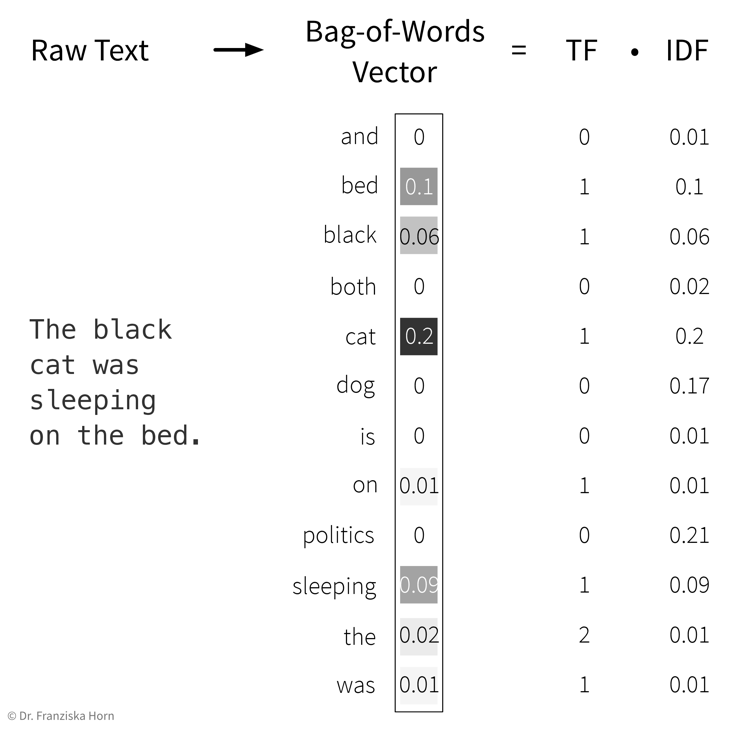

- Feature extraction: Bag-of-Words (BOW) TF-IDF features:

-

Represent a document as the weighted counts of the words occurring in the text:

-

Term Frequency (TF): how often does this word occur in the current text document.

-

Inverse Document Frequency (IDF): a weight to capture how significant this word is. This is computed by comparing the total number of documents in the dataset to the number of documents in which the word occurs. The IDF weight thereby reduces the overall influence of words that occur in almost all documents (e.g., so-called stopwords like ‘and’, ‘the’, ‘a’, …).

Please note that here the feature vector is shown as a column vector, but since each document is one data point, it is actually one row in the feature matrix \(X\), while the TF-IDF values for the individual words are the features in the columns.

Please note that here the feature vector is shown as a column vector, but since each document is one data point, it is actually one row in the feature matrix \(X\), while the TF-IDF values for the individual words are the features in the columns.

→ First, the whole corpus (= a dataset consisting of text documents) is processed once to determine the overall vocabulary (i.e., the unique words occurring in all documents that then make up the dimensionality of the BOW feature vector) and to compute the IDF weights for all words. Then each individual document is processed to compute the final TF-IDF vector by counting the words occurring in the document and multiplying these counts with the respective IDF weights.

-

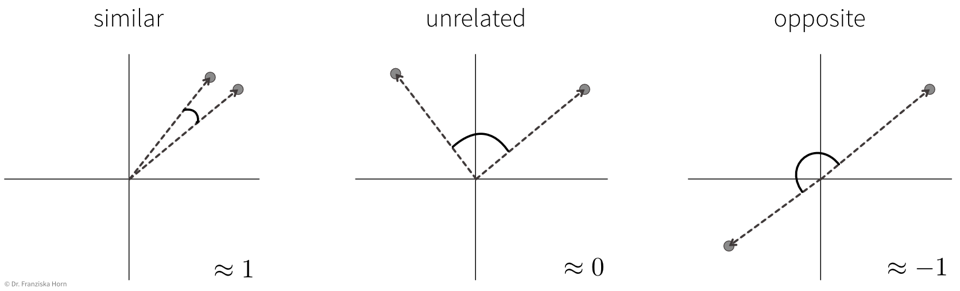

from sklearn.feature_extraction.text import TfidfVectorizer- Computing similarities between texts (represented as TF-IDF vectors) with the cosine similarity:

-

Scalar product (→

linear_kernel) of length normalized TF-IDF vectors:\[sim(\mathbf{x}_i, \mathbf{x}_j) = \frac{\mathbf{x}_i^\top \mathbf{x}_j}{\|\mathbf{x}_i\| \|\mathbf{x}_j\|} \quad \in [-1, 1]\]i.e., the cosine of the angle between the length-normalized vectors:

→ similarity score is between [0, 1] for TF-IDF vectors, since all entries in the vectors are positive.

- Disadvantages of TF-IDF vectors:

-

-

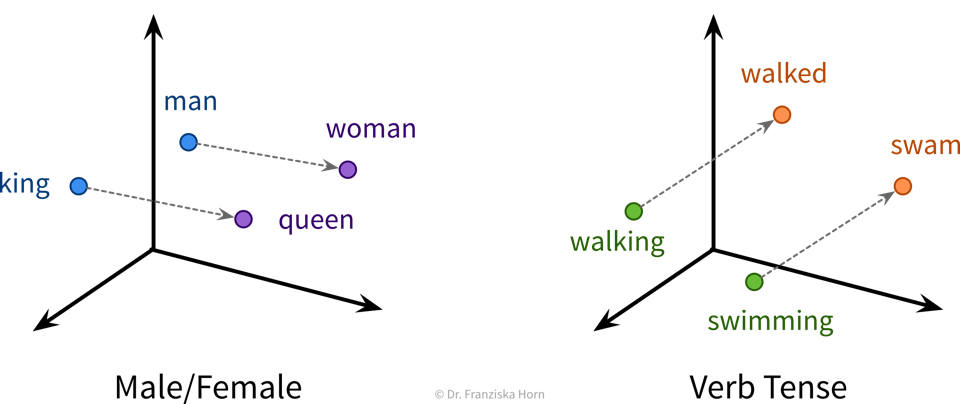

Similarity between individual words (e.g., synonyms) is not captured, since each word has its own distinct dimension in the feature vector and is therefore equally far away from all other words.

-

Word order is ignored → this is also where the name “bag of words” comes from, i.e., imagine all the words from a document are thrown into a bag and shook and then we just check how often each word occurred in the text.

-

Unsupervised Learning

The first algorithms we look at in more detail are from the area of unsupervised learning:

While the subfields of unsupervised learning all include lots of different algorithms that can be used for the respective purpose, we’ll always just examine a few example algorithms with different underlying ideas in more detail. But feel free to, e.g., have a look at the sklearn user guide for more information about other methods.

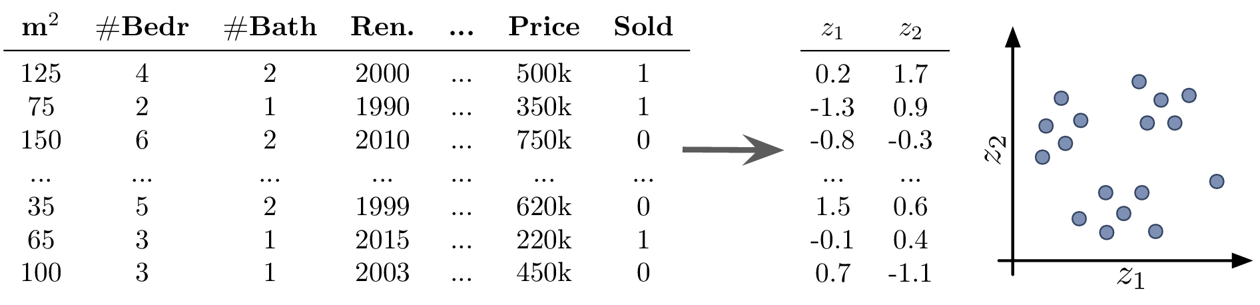

Dimensionality Reduction

The first subfield of unsupervised learning that we look at is dimensionality reduction:

- Goal

-

Reduce the number of features without loosing relevant information.

- Advantages

-

-

Reduced data needs less memory (usually not that important anymore today)

-

Noise reduction (by focusing on the most relevant signals)

-

Create a visualization of the dataset (what we are mostly using these algorithms for)

-

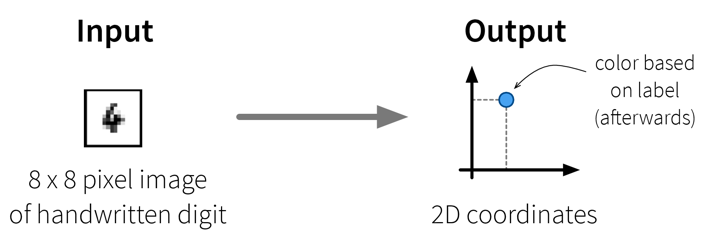

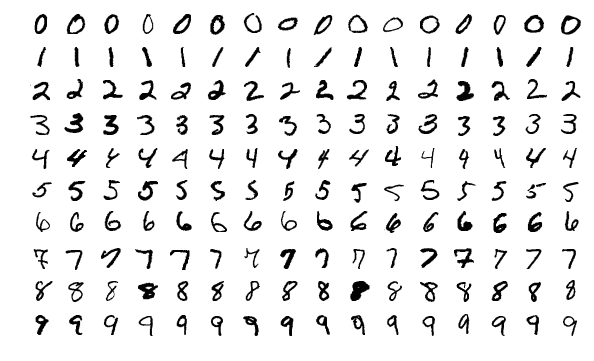

- Example: Embed images of hand written digits in 2 dimensions

-

The dataset in this small example consists of a set of 8 x 8 pixel images of handwritten digits, i.e., each data point can be represented as a 64-dimensional input feature vector containing the gray-scale pixel values. Usually, this dataset is used for classification, i.e., where a model should predict which number is shown on the image. We instead only want to get an overview of the dataset, i.e., our goal is to reduce the dimensionality of this dataset to two coordinates, which we can then use to visualize all samples in a 2D scatter plot. Please note that the algorithms only use the original image pixel values as input to compute the 2D coordinates, but afterwards we additionally use the labels of the images (i.e., the digit shown in the image) to give the dots in the plot some color to better interpret the results.

The dataset in this small example consists of a set of 8 x 8 pixel images of handwritten digits, i.e., each data point can be represented as a 64-dimensional input feature vector containing the gray-scale pixel values. Usually, this dataset is used for classification, i.e., where a model should predict which number is shown on the image. We instead only want to get an overview of the dataset, i.e., our goal is to reduce the dimensionality of this dataset to two coordinates, which we can then use to visualize all samples in a 2D scatter plot. Please note that the algorithms only use the original image pixel values as input to compute the 2D coordinates, but afterwards we additionally use the labels of the images (i.e., the digit shown in the image) to give the dots in the plot some color to better interpret the results.

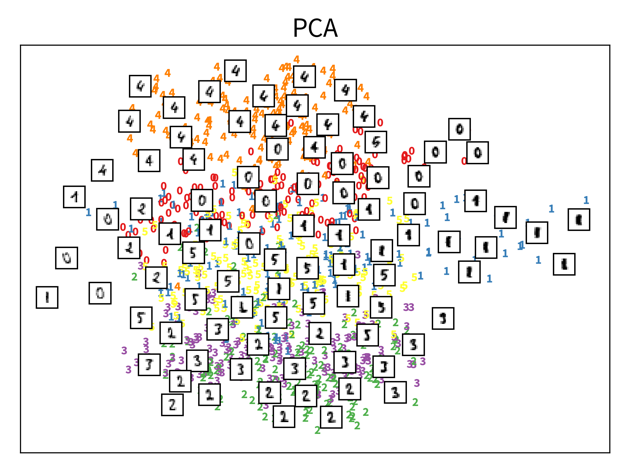

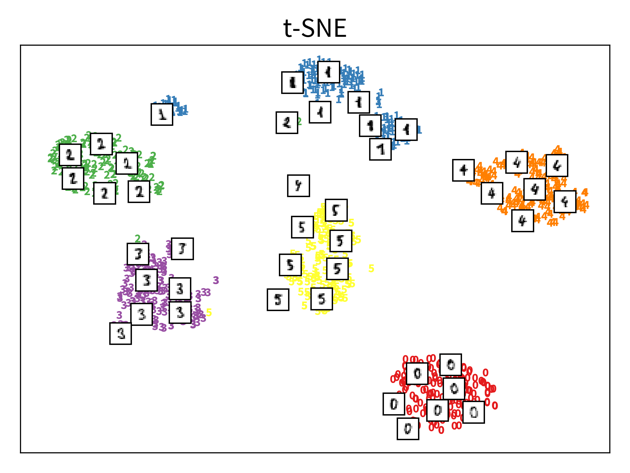

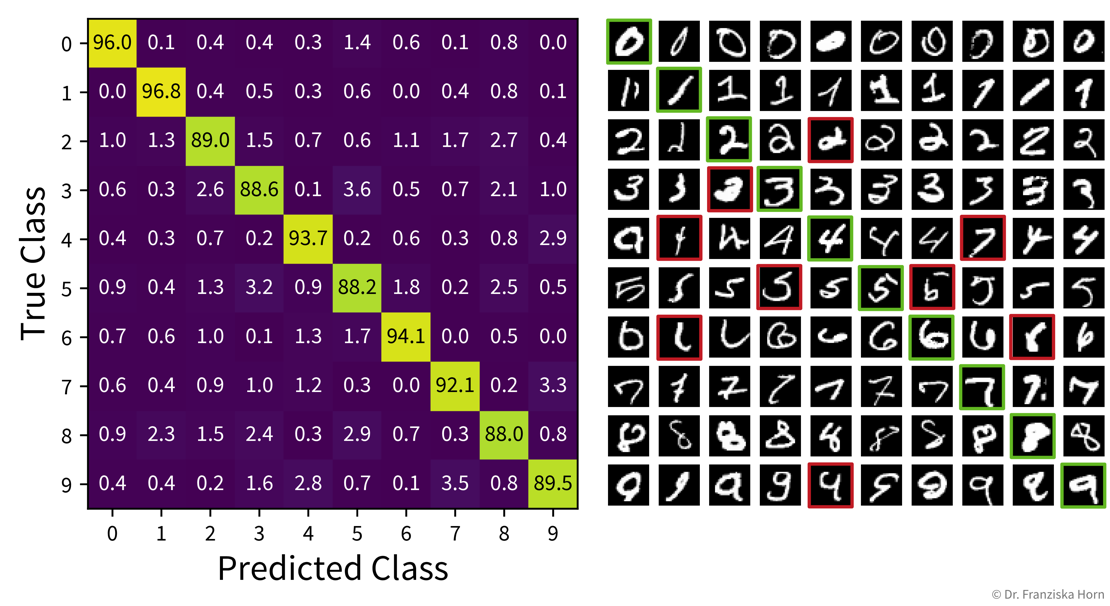

The two plots show the results, i.e., the 2-dimensional representation of the dataset created with two different dimensionality reduction algorithms, PCA and t-SNE. Each point or thumbnail in the plot represents one data point (i.e., one image) and the colors, numbers, and example images were added after reducing the dimensionality so the plot is easier to interpret.

There is no right or wrong way to represent the data in 2D — it’s an unsupervised learning problem, which by definition has no ground truth answer. The algorithms arrive at two very different solutions, since they follow different strategies and have a different definition of what it means to preserve the relevant information. While PCA created a plot that preserves the global relationship between the samples, t-SNE arranged the samples in localized clusters.

The remarkable thing here is that these methods did not know about the fact that the images displayed different, distinct digits (i.e., they did not use the label information), yet t-SNE grouped images showing the same number closer together. From such a plot we can already see that if we were to solve the corresponding classification problem (i.e., predict which digit is shown in an image), this task should be fairly easy, since even an unsupervised learning algorithm that did not use the label information showed that images displaying the same number are very similar to each other and can easily be distinguished from images showing different numbers. Or conversely, if our classification model performed poorly on this task, we would know that we have a bug somewhere, since apparently the relevant information is present in the features to solve this task.

| Please note that even though t-SNE seems to create clusters here, it is not a clustering algorithm. As a dimensionality reduction algorithm, t-SNE produces a set of new 2D coordinates for our samples and when plotting the samples at these coordinates, they happen to be arranged in clusters. However, a clustering algorithm instead outputs cluster indices, that state which samples were assigned to the same group (which could then be used to color the points in the 2D coordinate plot). |

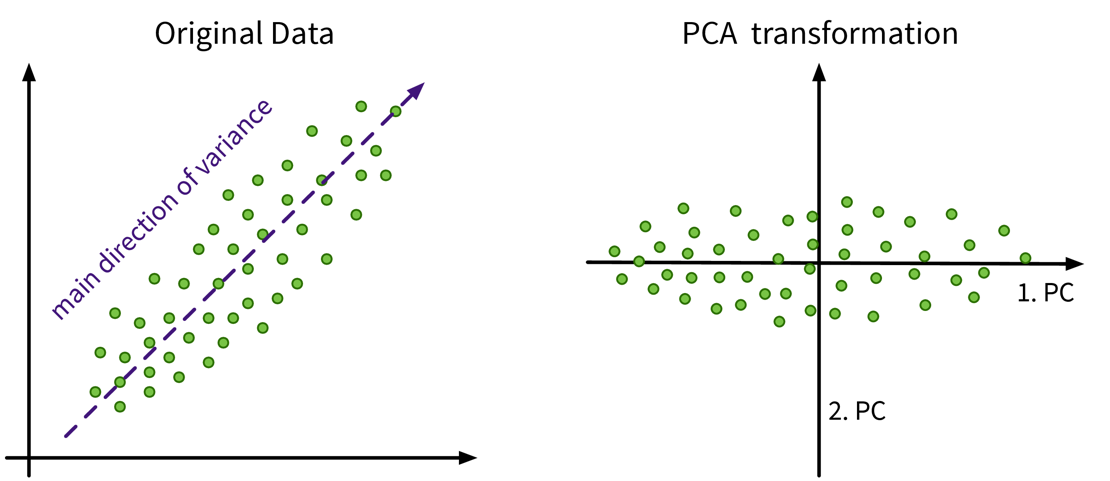

Principal Component Analysis (PCA)

- Useful for

-

General dimensionality reduction & noise reduction.

⇒ The transformed data is sometimes used as input for other algorithms instead of the original features. - Main idea

-

Compute the eigendecomposition of the dataset’s covariance matrix, a symmetric matrix of size \(d \times d\) (with d = number of original input features), which states how strongly any two features co-vary, i.e., how related they are, similar to the linear correlation between two features.

By computing the eigenvalues and -vectors of this matrix, the main directions of variance in the data are identified. These are the principle components and can be expressed as linear combinations of the original features. We then reorient the data along these new axis.

Have a look at this video for a more detailed explanation. In this example the original data only has two features anyways, so a dimensionality reduction does not make much sense, but it nicely illustrates how the PCA algorithm works: The main direction of variance is selected as the first new dimension, while the direction with the next strongest variation (orthogonal to the first) is the second new dimension. These new dimensions are the principle components. The data is then be rotated accordingly, such that the amount of variance in the data, i.e., the information content and therefore also the eigenvalue associated with the principle components, decreases from the first to the last of these new dimensions.

In this example the original data only has two features anyways, so a dimensionality reduction does not make much sense, but it nicely illustrates how the PCA algorithm works: The main direction of variance is selected as the first new dimension, while the direction with the next strongest variation (orthogonal to the first) is the second new dimension. These new dimensions are the principle components. The data is then be rotated accordingly, such that the amount of variance in the data, i.e., the information content and therefore also the eigenvalue associated with the principle components, decreases from the first to the last of these new dimensions.

from sklearn.decomposition import PCAImportant Parameters:

-

→

n_components: New dimensionality of data; this can be as many as the original features (or the rank of the feature matrix).

- Pros

-

-

Linear algebra based: solution is a global optima, i.e., when we compute PCA multiple times on the same dataset we’ll always get the same results.

-

Know how much information is retained in the low dimensional representation; stored in the attributes

explained_variance_ratio_orsingular_values_/eigenvalues_(= eigenvalues of the respective PCs):

The principle components are always ordered by the magnitude of their corresponding eigenvalues (largest first);

When using the first k components with eigenvalues \(\lambda_i\), the amount of variance that is preserved is: \(\frac{\sum_{i=1}^k \lambda_i}{\sum_{i=1}^d \lambda_i}\).

Note: If the intrinsic dimensionality of the dataset is lower than the original number features, e.g., because some features were strongly correlated, then the last few eigenvalues will be zeros. You can also plot the eigenvalue spectrum, i.e., the eigenvalues ordered by their magnitude, to see how many dimensions you might want to keep, i.e., where this curve starts to flatten out.

-

- Careful

-

-

Computationally expensive for many (> 10k) features.

Tip: If you have fewer data points than features, consider using Kernel PCA instead: This variant of the PCA algorithm computes the eigendecomposition of a similarity matrix, which is \(n \times n\) (with n = number of data points), i.e., when n < d this matrix will be smaller than the covariance matrix and therefore computing its eigendecomposition will be faster. -

Outliers can heavily skew the results, because a few points away from the center can introduce a large variance in that direction.

-

t-SNE

- Useful for

-

Visualizing data in 2D — but please do not use the transformed data as input for other algorithms.

- Main idea

-

Randomly initialize the points in 2D and move them around until their distances in 2D match the original distances in the high dimensional input space, i.e., until the points that were similar to each other in the original high dimensional space are located close together in the new 2D map of the dataset.

→ Have a look at the animations in this great blog article to see t-SNE in action!

from sklearn.manifold import TSNEImportant Parameters:

-

→

perplexity: Roughly: how many nearest neighbors a point is expected to have. Have a look at the corresponding section in the blog article linked above for an example. However, what an appropriate value for this parameter is depends on the size and diversity of the dataset, e.g., if a dataset consists of 1000 images with 10 classes, then a perplexity of 5 might be a reasonable choice, while for a dataset with 1 million samples, 500 could be a better value.

The original paper says values up to 50 work well, but in 2003 “big data” also wasn’t a buzzword yet ;-) -

→

metric: How to compute the distances in the original high dimensional input space, which tells the model which points should be close together in the 2D map.

- Pros

-

-

Very nice visualizations.

-

- Careful

-

-

Algorithm can get stuck in a local optimum, e.g., with some points trapped between clusters.

-

Selection of distance metric for heterogeneous data ⇒ normalize!

-

Outlier / Anomaly Detection

Next up on our list of ML tools is anomaly or outlier detection:

- Useful for

-

-

Detecting anomalies for monitoring purposes, e.g., machine failures or fraudulent credit card transactions.

-

Removing outliers from the dataset to improve the performance of other algorithms.

-

Things to consider when trying to remove outliers or detect anomalies:

-

Does the dataset consist of independent data points or time series data with dependencies?

-

Are you trying to detect

-

outliers in individual dimensions? For example, in time series data we might not see a failure in all sensors simultaneously, but only one sensor acts up spontaneously, i.e., shows a value very different from the previous time point, which would be enough to rule this time point an anomaly irrespective of the values of the other sensors.

-

multidimensional outlier patterns? Individual feature values of independent data points might not seem anomalous by themselves, only when considered in combination with the data point’s other feature values. For example, a 35 \(m^2\) apartment could be a nice studio, but if this apartment supposedly has 5 bedrooms, then something is off.

-

-

Are you expecting a few individual outliers or clusters of outliers? The latter is especially common in time series data, where, e.g., a machine might experience an issue for several minutes or even hours before the signals look normal again. Clusters of outliers are more tricky to detect, especially if the data also contains other ‘legitimate’ clusters of normal points. Subject matter expertise is key here!

-

Do you have any labels available? While we might not know what the different kinds of (future) anomalies look like, maybe we do know what normal data points look like, which is important information that we can use to check how far new points deviate from these normal points, also called novelty detection.

| Know your data — are missing values marked as NaNs (“Not a Number”) or set to some ‘unreasonable’ high or low value? |

|

Removing outlier samples from a dataset is often a necessary cleaning step, e.g., to obtain better prediction models. However, we should always be able to explain why we removed these points, as they could also be interesting edge cases. Try to remove as many outliers as possible with manually crafted rules (e.g., “when this sensor is 0, the machine is off and the points can be disregarded”), especially when the dataset contains clusters of outliers, which are harder to detect with data-driven methods. |

| Please note that some of the data points a prediction model encounters in production might be outliers as well. Therefore, new data needs to be screened for outliers as well, as otherwise these points would force the model to extrapolate beyond the training domain. |

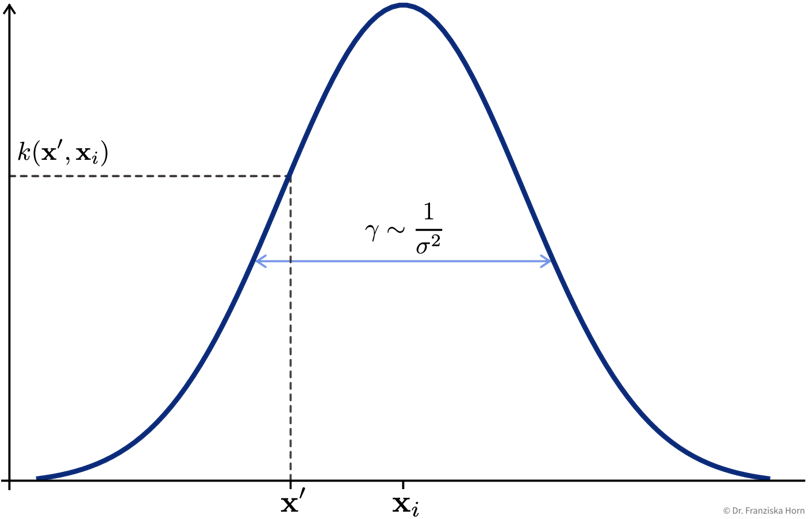

\(\gamma\)-index

Harmeling, Stefan, et al. “From outliers to prototypes: ordering data.” Neurocomputing 69.13-15 (2006): 1608-1618.

- Main idea

-

Compute the average distance of a point to its k nearest neighbors:

→ Points with a large average distance are more likely to be outliers.

⇒ Set a threshold for the average distance when a point is considered an outlier.

import numpy as np

from sklearn.metrics import pairwise_distances

def gammaidx(X, k):

"""

Inputs:

- X [np.array]: n samples x d features input matrix

- k [int]: number of nearest neighbors to consider

Returns:

- gamma_index [np.array]: vector of length n with gamma index for each sample

"""

# compute n x n Euclidean distance matrix

D = pairwise_distances(X, metric='euclidean')

# sort the entries of each row, such that the 1st column is 0 (distance of point to itself),

# the following columns are distances to the closest nearest neighbors (i.e., smallest values first)

Ds = np.sort(D, axis=1)

# compute mean distance to the k nearest neighbors of every point

gamma_index = np.mean(Ds[:, 1:(k+1)], axis=1)

return gamma_index

# or more efficiantly with the NearestNeighbors class

from sklearn.neighbors import NearestNeighbors

def gammaidx_fast(X, k):

"""

Inputs:

- X [np.array]: n samples x d features input matrix

- k [int]: number of nearest neighbors to consider

Returns:

- gamma_index [np.array]: vector of length n with gamma index for each sample

"""

# initialize and fit nearest neighbors search

nn = NearestNeighbors(n_neighbors=k).fit(X)

# compute mean distance to the k nearest neighbors of every point (ignoring the point itself)

# (nn.kneighbors returns a tuple of distances and indices of nearest neighbors)

gamma_index = np.mean(nn.kneighbors()[0], axis=1) # for new points: nn.kneighbors(X_test)

return gamma_index- Pros

-

-

Conceptually very simple and easy to interpret.

-

- Careful

-

-

Computationally expensive for large datasets (distance matrix: \(\mathcal{O}(n^2)\)) → compute distances to a random subsample of the dataset or to a smaller set of known non-anomalous points instead.

-

Normalize heterogeneous datasets before computing distances!

-

Know your data: does the dataset contain larger clusters of outliers? → k needs to be large enough such that a tight cluster of outliers is not mistaken as prototypical data points.

-

| Extension for time series data: don’t identify the k nearest neighbors of a sample based on the distance of the data points in the feature space, but take the neighboring time points instead. |

Clustering

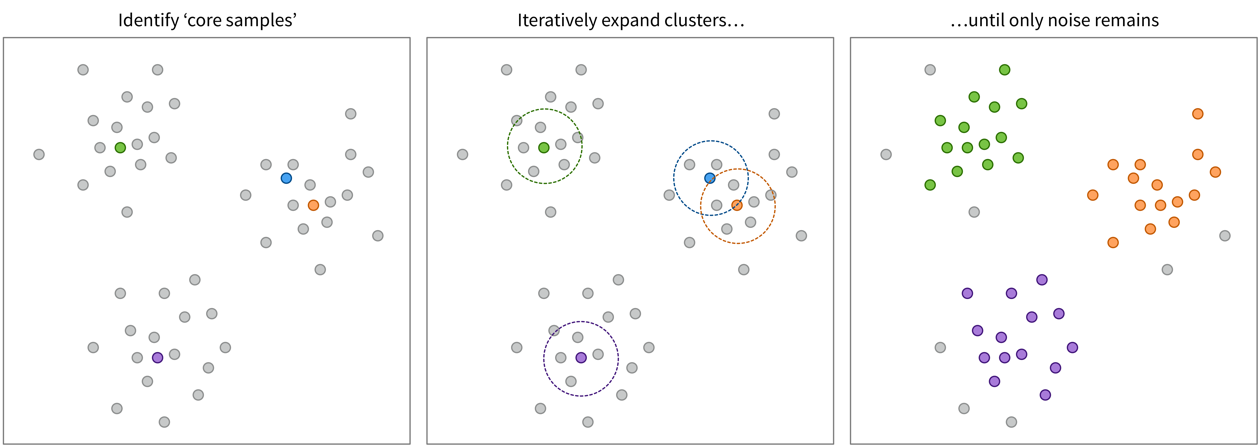

The last category of unsupervised learning algorithms is clustering:

- Useful for

-

Identifying naturally occurring groups in the data (e.g., for customer segmentation).

There exist quite a lot of different clustering algorithms and we’ll only present two with different ideas here.

When you look at the linked sklearn examples, please note that even though other clustering algorithms might seem to perform very well on fancy toy datasets, data in reality is seldom arranged in two concentric circles, and on real-world datasets the k-means clustering algorithm is often a robust choice.

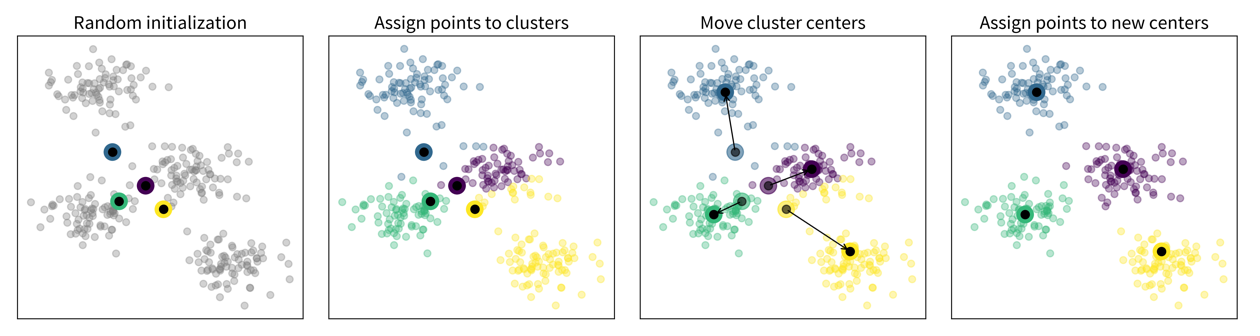

k-means clustering

- Main idea

-

-

Randomly place k cluster centers (where k is a hyperparameter set by the user);

-

Assign each data point to its closest cluster center;

-

Update cluster centers as the mean of the assigned data points;

-

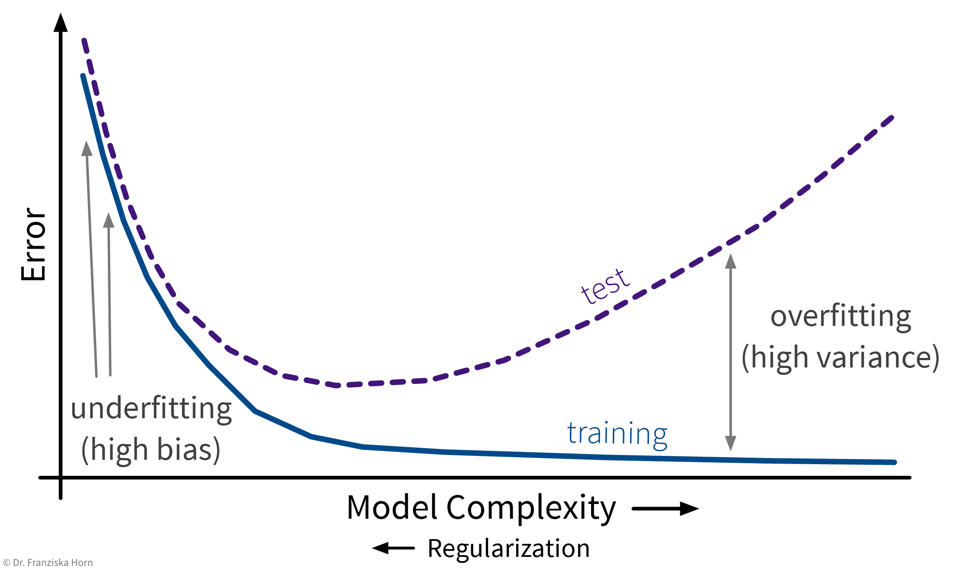

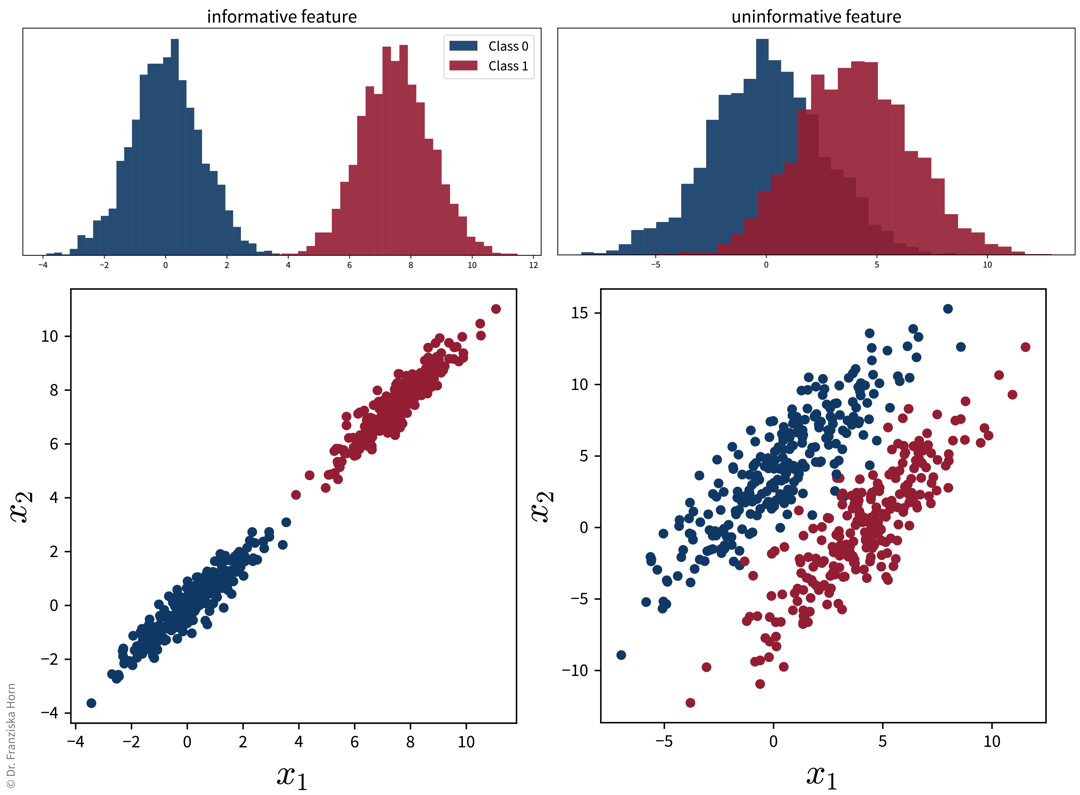

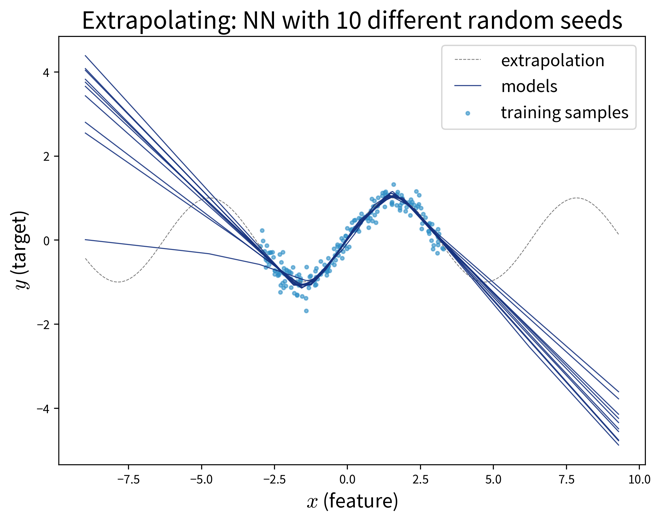

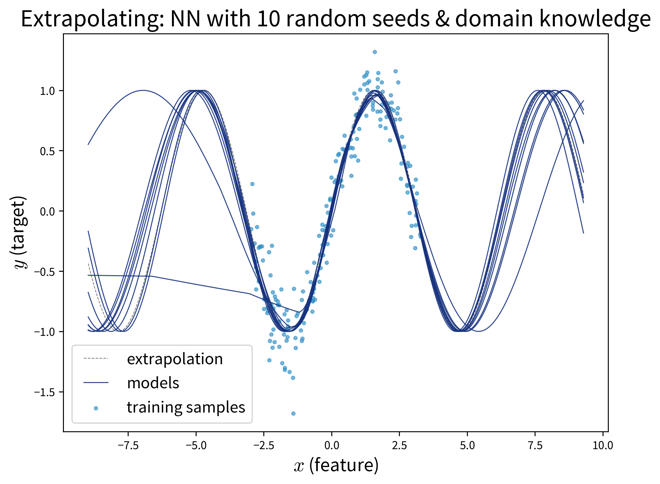

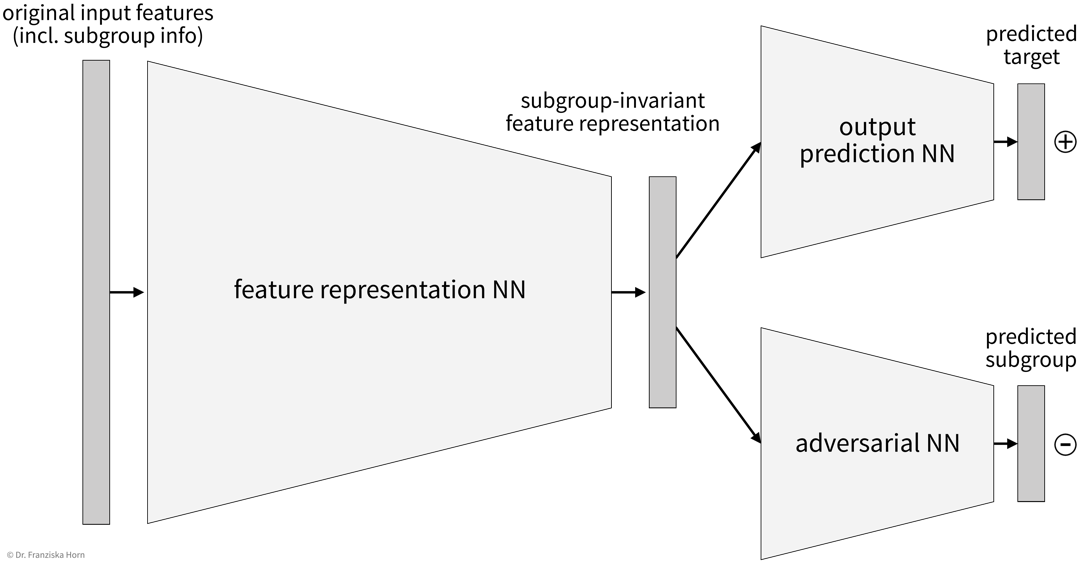

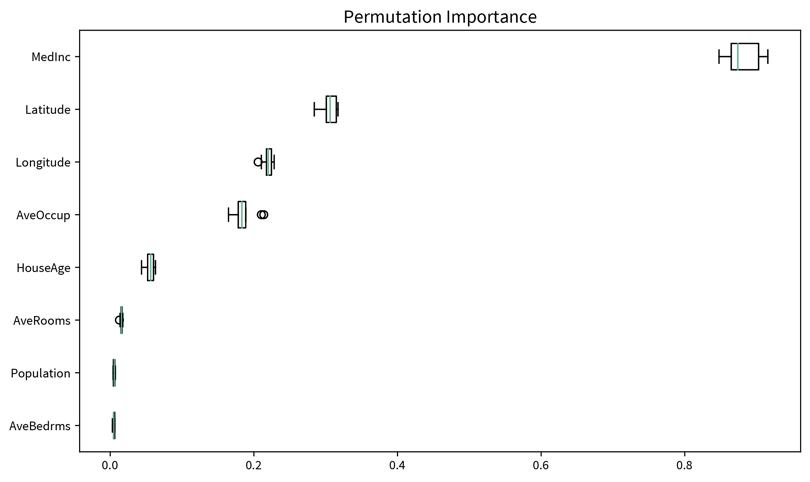

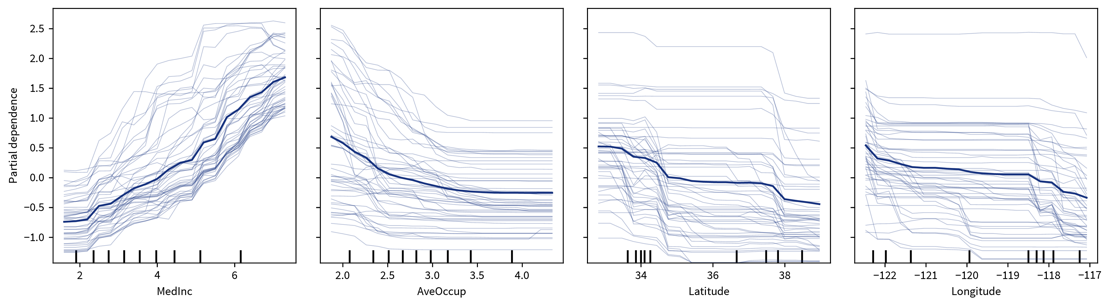

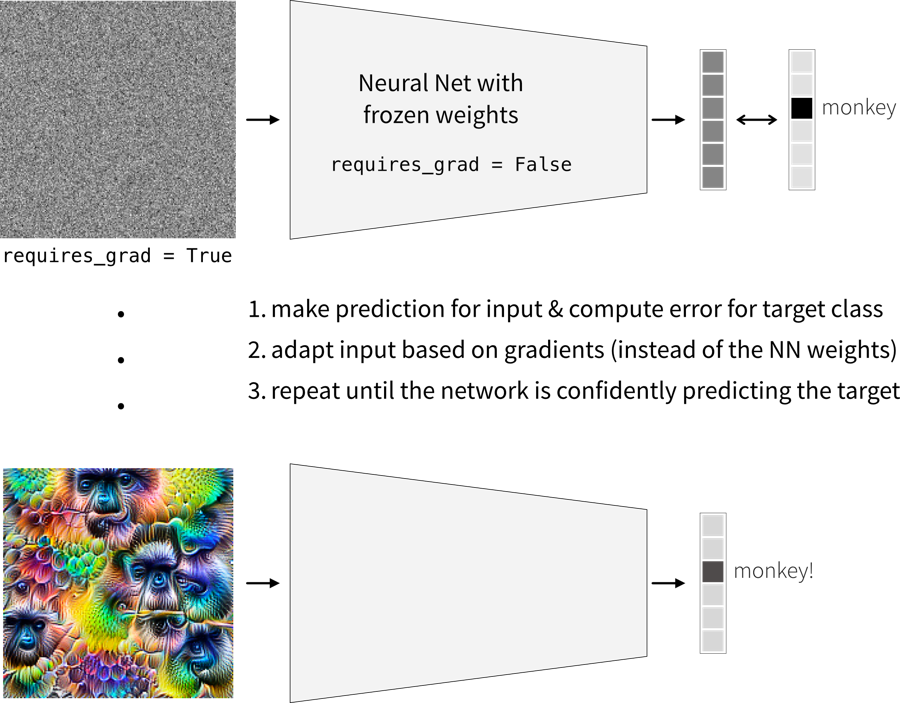

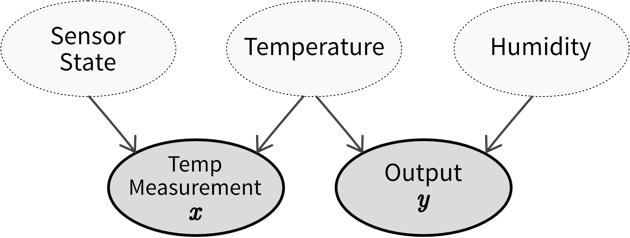

Repeat steps 2-3 until convergence.