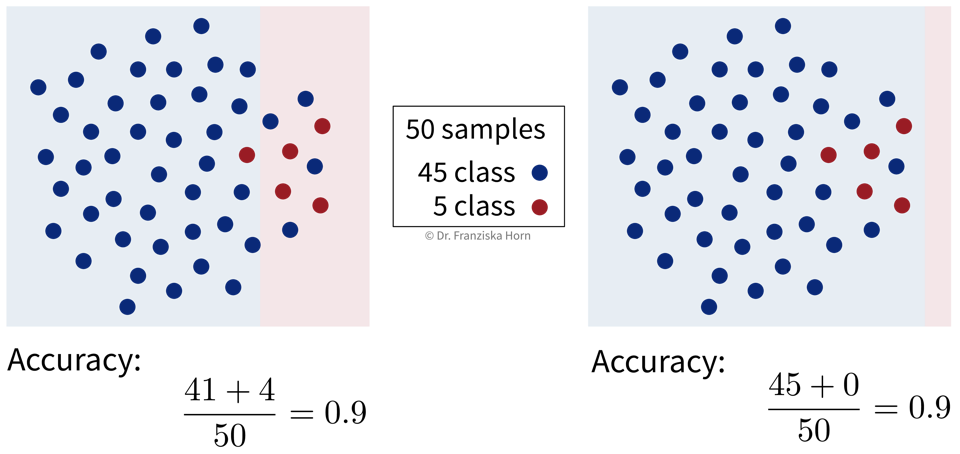

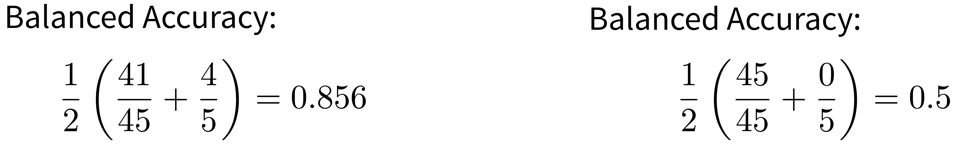

[Pitfall #1] Deceptive model evaluation

Predictive models need to be evaluated, i.e., their performance needs to be quantified with an appropriate evaluation metric to get a realistic estimate of how useful a model will be in practice and how many mistakes we have to expect from it.

Since in supervised learning problems we know the ground truth, we can objectively evaluate different models and benchmark them against each other.

However, it can be quite easy to paint an overly optimistic picture here, therefore we always need to be critical and, for example, compare the performance of a model to that of a baseline. The simplest comparison would be to a “stupid” model that only predicts the mean (→ regression) or most frequent class (→ classification).