[Pitfall #3] Model abuses spurious correlations

Even when a model is capable of generating correct predictions for new data points from the same distribution as the training set, this does not mean that the model actually picked up on the true causal relationship between the inputs and outputs!

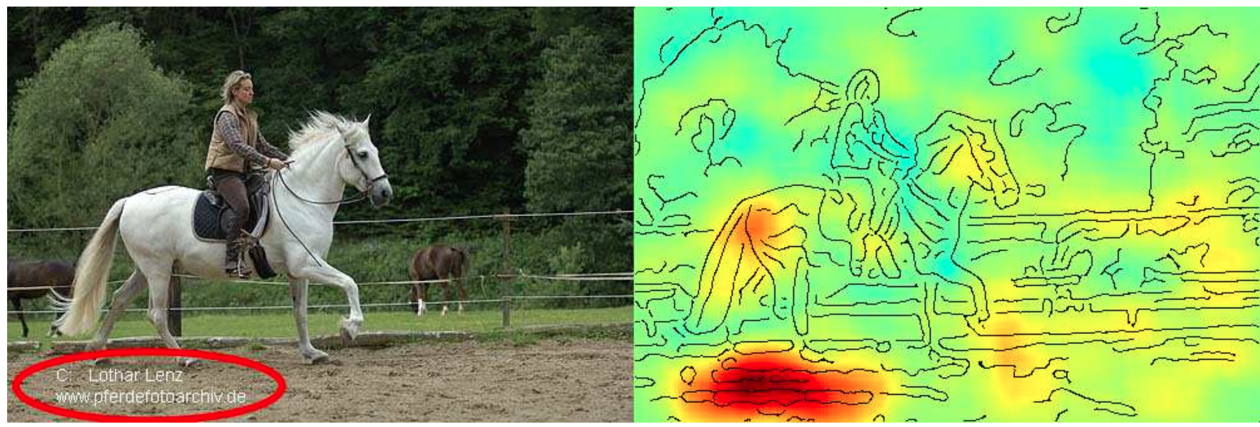

| ML models love to cheat & take shortcuts! They will often pick up on spurious correlations instead of learning the true causal relationships. This makes them vulnerable to adversarial attacks and data/domain shifts, which force the model to extrapolate instead of interpolate. |

This is by far not the only example where a model has “cheated” by exploiting spurious correlations in the training set. Another popular example: A dataset with images of dogs and wolves, where all wolves were photographed on snowy backgrounds and the dogs on grass or other non-white backgrounds. Models trained on such a dataset can show a good predictive performance without having learned the true causal relationship between the features and labels.

To catch these kinds of mishaps, it is important to

-

a) critically examine the test set and hopefully notice any problematic patterns that could result in an overly optimistic performance estimate, and

-

b) interpret the model and explain its predictions to see if it has focused on the features you (or a subject matter expert) would have expected (as they did in the paper above).

Finding robust causal models that capture the true ‘input → output’ relationship in the data is still an active research area and a lot harder than learning a model that “only” generalizes well to the test set.